Zero-Downtime TLS Certificate Rotation in Kubernetes with cert-manager

Your production deployment is humming along until 3 AM when your TLS certificate expires and your on-call engineer gets paged. The dashboard is red, customers are seeing security warnings, and everyone is scrambling to remember where that certificate came from in the first place. Was it Let’s Encrypt? Did someone generate it manually eighteen months ago? Where’s the private key?

This scenario plays out across organizations of every size, and it’s almost always preventable. The frustrating part isn’t that certificate management is inherently difficult—it’s that most teams treat it as a “set and forget” problem until it becomes a “drop everything and fix” emergency.

Manual certificate management creates a specific kind of operational debt. Unlike a slow database query or a memory leak, expired certificates fail catastrophically and instantly. There’s no graceful degradation. One second your application works; the next, browsers refuse to connect. And the blast radius extends beyond your application—service meshes, internal APIs, webhook endpoints, and ingress controllers all depend on valid certificates.

The tooling landscape has improved dramatically. cert-manager has emerged as the de facto standard for Kubernetes certificate automation, handling everything from ACME challenges to private CA integration. But installing cert-manager is just the starting point. The difference between “we have cert-manager” and “we have reliable certificate infrastructure” comes down to understanding the failure modes that tooling alone doesn’t solve: silent renewal failures that go unnoticed for weeks, clock skew between nodes causing premature validation errors, DNS propagation delays that break challenge completion, and the operational blind spots that only surface when something goes wrong.

Let’s start with the failure modes that documentation rarely mentions.

The Certificate Management Problem Nobody Talks About

At 3 AM on a Saturday, your monitoring alerts fire. Users report they cannot access your application. The incident channel floods with panicked messages. After twenty minutes of investigation, someone discovers the culprit: an expired TLS certificate on a critical ingress endpoint.

This scenario plays out across organizations of every size. Certificate expiration remains one of the most preventable causes of production outages, yet it continues to catch teams off guard with surprising regularity.

The Operational Debt of Manual Certificate Management

Manual certificate management creates compounding technical debt that grows invisible until it causes an outage. Teams track expiration dates in spreadsheets, set calendar reminders, and rely on tribal knowledge about which certificates protect which services.

This approach fails for predictable reasons:

- Spreadsheets become stale the moment someone provisions a new service without updating the tracker

- Calendar reminders get dismissed during busy sprints or organizational transitions

- Personnel changes leave certificate knowledge siloed with departed team members

- Scaling beyond a handful of certificates makes manual tracking untenable

Failure Modes That Catch Production Systems

Beyond simple expiration, several failure modes plague certificate management in distributed systems:

Silent renewal failures occur when automated systems attempt renewal but encounter transient errors—rate limits, network partitions, or misconfigured credentials. Without proper alerting, these failures remain undetected until the certificate actually expires.

Clock skew issues cause certificates to appear invalid before their actual expiration. A server with incorrect system time rejects perfectly valid certificates, creating intermittent failures that prove difficult to diagnose.

DNS propagation delays during ACME DNS-01 challenges lead to validation failures. The certificate authority checks for a TXT record that has not yet propagated to all DNS resolvers, causing renewal to fail.

The Real Cost of Certificate Outages

Certificate-related outages carry costs beyond immediate downtime. They erode customer trust, trigger SLA violations, and force engineers into reactive firefighting mode. A single expired certificate incident often consumes 4-8 engineer-hours when accounting for detection, diagnosis, remediation, and post-incident review.

What Automation Solves—And What It Does Not

cert-manager automates certificate issuance, renewal, and storage within Kubernetes. It eliminates manual tracking, handles renewal before expiration, and integrates with multiple certificate authorities through a unified interface.

However, cert-manager does not eliminate the need for monitoring, capacity planning for rate limits, or understanding your certificate topology. Automation shifts the burden from manual renewal to proper configuration and observability.

Understanding this distinction matters. cert-manager provides the machinery for reliable certificate lifecycle management—but you still need to operate that machinery correctly. The following sections walk through exactly how to do that, starting with cert-manager’s architecture and control loop.

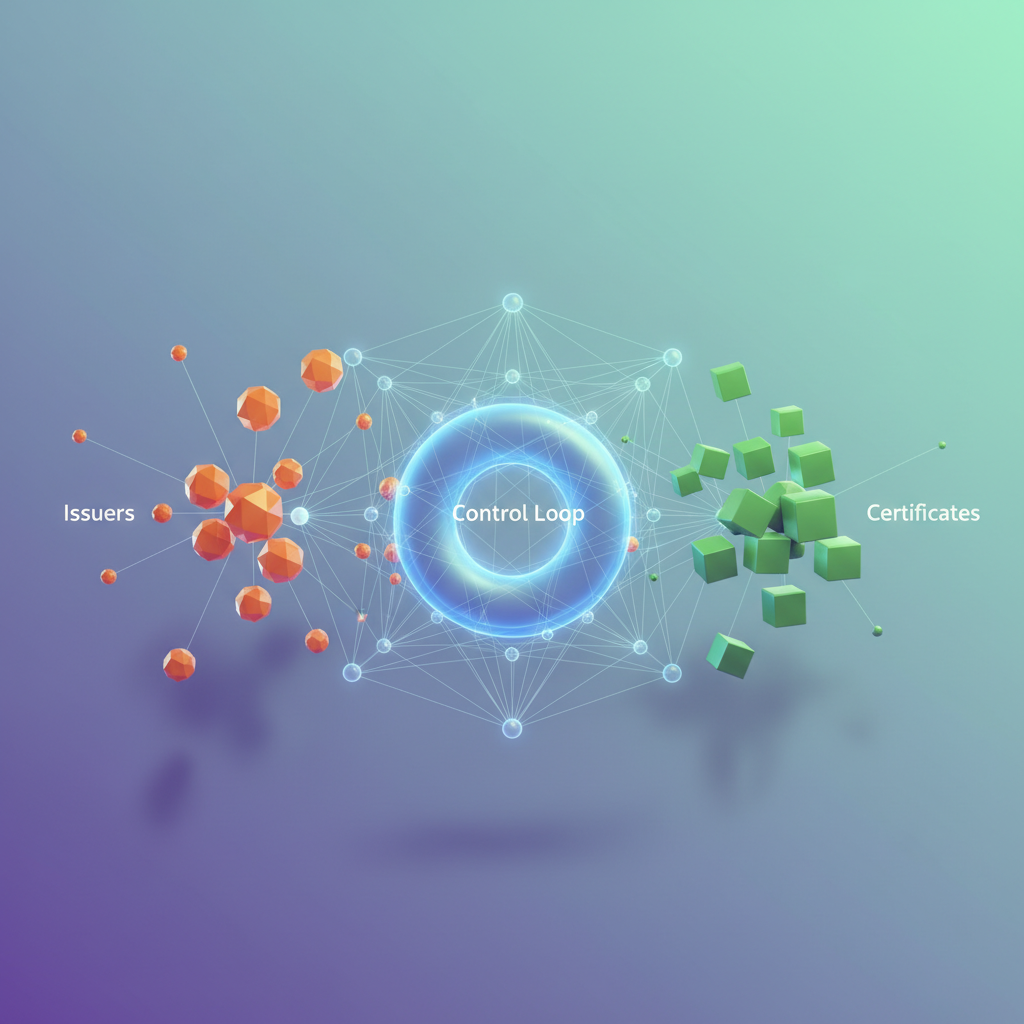

cert-manager Architecture: Issuers, Certificates, and the Control Loop

Understanding cert-manager’s internal architecture transforms you from a passive user into someone who can predict behavior, debug efficiently, and design robust configurations. At its core, cert-manager implements the Kubernetes controller pattern—a reconciliation loop that continuously drives actual state toward desired state.

The Controller Pattern and Eventual Consistency

cert-manager runs as a set of controllers watching Custom Resource Definitions (CRDs). When you create a Certificate resource, the controller detects this change, compares current state against desired state, and takes action to reconcile the difference. This happens continuously, not just at creation time.

This model delivers eventual consistency: if a certificate expires, gets deleted, or fails validation, the controller automatically attempts recovery. Network blips, temporary DNS failures, or ACME rate limits don’t cause permanent failures—the controller retries with exponential backoff until it succeeds or hits configured limits.

The reconciliation loop examines several status conditions on Certificate resources: Ready, Issuing, and InvalidRequest. When troubleshooting, these conditions tell you exactly where in the lifecycle a certificate stalled. A certificate stuck in Issuing with a CertificateRequestFailed condition points you directly to the underlying CertificateRequest resource for detailed error messages.

The Resource Hierarchy

cert-manager organizes certificate management through three primary resource types:

Issuers define how certificates are obtained—they specify the certificate authority, authentication credentials, and challenge configuration. An Issuer is namespace-scoped, meaning it can only issue certificates within its own namespace.

ClusterIssuers function identically to Issuers but operate cluster-wide. Any Certificate resource in any namespace can reference a ClusterIssuer, making them ideal for platform teams providing certificate services to multiple application teams.

Certificates declare the desired certificate properties: DNS names, validity duration, secret name for storage, and which Issuer to use. When you create a Certificate, cert-manager generates a CertificateRequest, which the appropriate Issuer controller processes to obtain the actual certificate.

💡 Pro Tip: Use ClusterIssuers for shared infrastructure concerns (wildcard certificates, internal CA) and namespace-scoped Issuers when teams need isolated control over their certificate sources or credentials.

Choosing Between ClusterIssuer and Issuer

The decision comes down to credential isolation and operational boundaries. ClusterIssuers store credentials in the cert-manager namespace, centralizing secret management but requiring cluster-admin involvement for changes. Namespace-scoped Issuers let teams manage their own ACME accounts or CA credentials independently.

For multi-tenant clusters, a common pattern combines both: a ClusterIssuer handles production certificates through a centrally-managed ACME account, while development namespaces use local Issuers pointing to staging environments.

With this mental model of controllers, resources, and their relationships established, let’s move to the practical work of installing cert-manager with production-appropriate Helm configurations.

Production-Ready Installation with Helm

The default cert-manager installation works fine for development, but production clusters demand more. Resource limits prevent runaway memory consumption during certificate storms. Pod disruption budgets ensure upgrades don’t knock out your webhook during critical moments. Webhook timeout configuration determines whether certificate operations fail gracefully or leave your deployments hanging. Getting these settings right from the start saves you from 3 AM pages when your certificates fail to renew.

Helm Values for Production

Create a dedicated values file that configures cert-manager for reliability under load. Each component requires careful tuning based on your cluster’s workload patterns and failure tolerance requirements:

installCRDs: true

replicaCount: 3

podDisruptionBudget: enabled: true minAvailable: 2

resources: requests: cpu: 50m memory: 128Mi limits: cpu: 500m memory: 512Mi

webhook: replicaCount: 3 timeoutSeconds: 30 podDisruptionBudget: enabled: true minAvailable: 2 resources: requests: cpu: 25m memory: 64Mi limits: cpu: 250m memory: 256Mi # Fail open during webhook unavailability to prevent blocking deployments failurePolicy: Ignore

cainjector: replicaCount: 2 resources: requests: cpu: 25m memory: 128Mi limits: cpu: 250m memory: 256Mi

prometheus: enabled: true servicemonitor: enabled: true namespace: monitoring

global: leaderElection: namespace: cert-managerThe webhook configuration deserves special attention. The timeoutSeconds: 30 setting gives the webhook adequate time to validate certificate resources during high-load scenarios, while the failurePolicy: Ignore ensures that webhook unavailability doesn’t block unrelated deployments. In clusters where certificate validation is security-critical, consider using failurePolicy: Fail instead, but ensure you have robust monitoring to detect webhook outages immediately.

The cainjector component handles CA bundle injection into webhooks and API services. While it tolerates fewer replicas than the main controller, resource constraints remain important—memory pressure during large-scale certificate rotations can cause cainjector to crash, leaving webhooks with stale CA bundles.

Install cert-manager with these production settings:

helm repo add jetstack https://charts.jetstack.iohelm repo update

kubectl create namespace cert-manager

helm install cert-manager jetstack/cert-manager \ --namespace cert-manager \ --version v1.14.4 \ --values cert-manager-values.yaml \ --wait💡 Pro Tip: The

--waitflag blocks until all pods are ready, preventing race conditions when you immediately apply Issuer resources.

RBAC for Multi-Tenant Clusters

In shared clusters, restrict who can create cluster-wide issuers versus namespace-scoped ones. Unrestricted ClusterIssuer access allows any tenant to potentially issue certificates for domains they don’t own, creating security vulnerabilities. This ClusterRole allows teams to manage their own certificates without touching cluster resources:

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: tenant-certificate-managerrules:- apiGroups: ["cert-manager.io"] resources: ["certificates", "certificaterequests"] verbs: ["create", "delete", "get", "list", "watch", "update", "patch"]- apiGroups: ["cert-manager.io"] resources: ["issuers"] verbs: ["create", "delete", "get", "list", "watch", "update", "patch"]## Explicitly deny ClusterIssuer access - tenants use namespace Issuers onlyBind this role to tenant service accounts or groups within their respective namespaces using RoleBindings. Platform administrators should create pre-configured ClusterIssuers that reference centrally managed credentials, allowing tenants to issue certificates without accessing sensitive ACME account keys or DNS provider tokens. Consider implementing admission policies that validate Certificate resources reference only approved ClusterIssuers, preventing tenants from circumventing namespace isolation.

Validating the Installation

Before deploying your first production certificate, verify that all components function correctly. A thorough validation catches misconfigurations before they impact real workloads:

## Check all pods are runningkubectl get pods -n cert-manager

## Verify webhook is respondingkubectl get apiservices v1.webhook.cert-manager.io -o yaml | grep -A5 status

## Test certificate creation with a self-signed issuerkubectl apply -f - <<EOFapiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: selfsigned-testspec: selfSigned: {}---apiVersion: cert-manager.io/v1kind: Certificatemetadata: name: test-certificate namespace: cert-managerspec: secretName: test-tls issuerRef: name: selfsigned-test kind: ClusterIssuer commonName: test.local dnsNames: - test.localEOF

## Verify the certificate was issuedkubectl get certificate -n cert-manager test-certificate

## Clean up test resourceskubectl delete certificate -n cert-manager test-certificatekubectl delete clusterissuer selfsigned-testThe certificate should reach Ready: True within seconds. If it stays pending, check the cert-manager controller logs for webhook connectivity issues or RBAC problems. Common failure modes include network policies blocking webhook traffic, resource exhaustion preventing pod scheduling, and misconfigured leader election causing controller conflicts.

For production environments, extend this validation to include load testing. Create multiple certificates simultaneously to verify the controller handles concurrent requests without resource starvation. Monitor memory consumption during these tests—if limits are too aggressive, the controller restarts mid-operation, leaving certificates in inconsistent states.

With cert-manager installed and validated, you’re ready to configure real certificate issuers. ACME-based issuers from Let’s Encrypt remain the most common choice, supporting both HTTP-01 challenges for public endpoints and DNS-01 challenges for wildcard certificates and internal services.

Configuring ACME Issuers with DNS-01 and HTTP-01 Challenges

ACME (Automatic Certificate Management Environment) issuers form the backbone of automated certificate provisioning with Let’s Encrypt. The challenge type you choose determines how cert-manager proves domain ownership—and getting this right eliminates entire categories of production incidents.

Choosing Between DNS-01 and HTTP-01

HTTP-01 challenges require cert-manager to expose a temporary endpoint at /.well-known/acme-challenge/ on port 80. This works well for publicly accessible services but fails completely for wildcard certificates, internal clusters, or environments where port 80 is blocked by corporate firewalls. The challenge flow involves Let’s Encrypt making an inbound HTTP request to your cluster, which means your ingress controller must be reachable from the public internet during validation.

DNS-01 challenges prove ownership by creating a TXT record under _acme-challenge.yourdomain.com. This approach handles wildcard certificates, works behind firewalls, and doesn’t require any inbound connectivity. The tradeoff: you need API access to your DNS provider, and propagation delays can occasionally cause validation timeouts if your DNS provider is slow to update.

Use HTTP-01 when you have simple, publicly routable services and don’t need wildcards. Use DNS-01 for everything else—wildcards, private clusters, split-horizon DNS environments, or when you want consistent validation regardless of network topology. Many teams standardize on DNS-01 across all environments simply to avoid maintaining two different validation strategies.

Setting Up Staging and Production Issuers

Always configure both staging and production issuers. Let’s Encrypt’s production endpoint has strict rate limits (50 certificates per registered domain per week), while staging allows unlimited testing with untrusted certificates. Teams that skip staging inevitably hit rate limits during development cycles and find themselves locked out of certificate issuance for days.

apiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: letsencrypt-stagingspec: acme: server: https://acme-staging-v02.api.letsencrypt.org/directory privateKeySecretRef: name: letsencrypt-staging-account-key solvers: - http01: ingress: ingressClassName: nginx---apiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: letsencrypt-prodspec: acme: server: https://acme-v02.api.letsencrypt.org/directory privateKeySecretRef: name: letsencrypt-prod-account-key solvers: - http01: ingress: ingressClassName: nginx💡 Pro Tip: Use a distribution list or team alias for the ACME email. Individual emails create bus-factor problems when that engineer leaves the organization. Let’s Encrypt sends expiration warnings to this address, so ensure it routes to a monitored inbox.

Configuring DNS-01 with Cloud Providers

DNS-01 validation requires provider-specific credentials. Here’s a production-ready configuration for AWS Route53:

apiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: letsencrypt-prod-dnsspec: acme: server: https://acme-v02.api.letsencrypt.org/directory privateKeySecretRef: name: letsencrypt-prod-dns-account-key solvers: - dns01: route53: region: us-east-1 hostedZoneID: Z2ABCDEF123456 selector: dnsZones: - "timderzhavets.com"For EKS clusters, use IAM Roles for Service Accounts (IRSA) instead of static credentials. The cert-manager service account needs permissions for route53:GetChange, route53:ChangeResourceRecordSets, and route53:ListHostedZonesByName. Avoid granting broader Route53 permissions—the principle of least privilege matters especially for components with DNS write access.

For Google Cloud DNS, reference a service account key stored as a Kubernetes secret. On GKE, prefer Workload Identity over secret-based authentication when possible:

apiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: letsencrypt-prod-gcpspec: acme: server: https://acme-v02.api.letsencrypt.org/directory privateKeySecretRef: name: letsencrypt-prod-gcp-account-key solvers: - dns01: cloudDNS: project: timderzhavets-prod serviceAccountSecretRef: name: clouddns-service-account key: credentials.jsonThe selector.dnsZones field enables multi-zone configurations where different domains validate against different DNS providers. This is essential for organizations managing multiple cloud accounts or hybrid infrastructure.

Handling Rate Limits with Issuer Fallback

Production environments should implement issuer redundancy. When Let’s Encrypt rate limits hit (they will during incident recovery or large-scale deployments), having a fallback issuer prevents certificate outages from cascading into application downtime.

Configure multiple issuers with different ACME providers—ZeroSSL and Buypass both offer free certificates with separate rate limit pools:

apiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: zerossl-prodspec: acme: server: https://acme.zerossl.com/v2/DV90 externalAccountBinding: keyID: a1b2c3d4e5f6g7h8 keySecretRef: name: zerossl-eab-secret key: secret privateKeySecretRef: name: zerossl-prod-account-key solvers: - dns01: route53: region: us-east-1 hostedZoneID: Z2ABCDEF123456Note that ZeroSSL requires External Account Binding (EAB) credentials, which you obtain from their dashboard. Store these credentials securely and rotate them according to your organization’s secret management policies.

The fallback strategy works at the application level—annotate critical ingresses with your primary issuer and have runbooks ready to switch annotations when rate limits hit. Consider implementing automated monitoring that alerts when certificate orders fail, giving your team time to switch issuers before existing certificates expire.

With issuers configured, the next step is connecting them to your ingress resources through cert-manager’s annotation-driven provisioning system.

Ingress Integration and Annotation-Driven Certificate Provisioning

The ingress-shim controller transforms TLS certificate management from an operational burden into a declarative, self-service workflow. By adding a single annotation to your Ingress resources, cert-manager automatically creates Certificate objects, requests certificates from your configured issuer, and stores them in Kubernetes Secrets—all without manual intervention.

How the Ingress-Shim Controller Works

The ingress-shim is a component within cert-manager that watches all Ingress resources in your cluster. When it detects an Ingress with the cert-manager.io/cluster-issuer or cert-manager.io/issuer annotation, it automatically generates a corresponding Certificate resource. This Certificate inherits the TLS hosts and secret name directly from your Ingress spec.

The controller reconciles continuously. If someone deletes the Certificate or Secret, cert-manager recreates them. If the Ingress TLS configuration changes, the Certificate updates accordingly. This declarative approach ensures your TLS state always matches your Ingress definitions.

The distinction between the two annotations matters for multi-tenant clusters. Use cert-manager.io/issuer to reference namespace-scoped Issuers, which enforce isolation between teams. Use cert-manager.io/cluster-issuer when a central platform team manages certificate issuance cluster-wide through ClusterIssuers.

Annotation-Driven Certificate Creation

The simplest integration requires just one annotation:

apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: api-gateway namespace: production annotations: cert-manager.io/cluster-issuer: letsencrypt-prodspec: ingressClassName: nginx tls: - hosts: - api.example.com secretName: api-gateway-tls rules: - host: api.example.com http: paths: - path: / pathType: Prefix backend: service: name: api-service port: number: 8080When you apply this manifest, cert-manager creates a Certificate named api-gateway-tls that requests a certificate for api.example.com from the letsencrypt-prod ClusterIssuer. The certificate gets stored in the Secret specified by secretName.

Beyond the issuer annotation, cert-manager supports additional annotations for fine-grained control. The cert-manager.io/duration annotation overrides the default certificate validity period, while cert-manager.io/renew-before controls how early before expiration renewal begins.

Handling Multiple TLS Hosts

Production Ingresses often serve multiple domains. cert-manager handles this by creating a single Certificate with multiple DNS names:

apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: web-frontend namespace: production annotations: cert-manager.io/cluster-issuer: letsencrypt-prodspec: ingressClassName: nginx tls: - hosts: - www.example.com - example.com - app.example.com secretName: web-frontend-tls rules: - host: www.example.com http: paths: - path: / pathType: Prefix backend: service: name: frontend port: number: 3000All hostnames appear on a single certificate as Subject Alternative Names (SANs). Let’s Encrypt supports up to 100 SANs per certificate, making this approach practical for most deployments.

When your Ingress includes multiple tls blocks with different secretName values, cert-manager creates separate Certificate resources for each. This design lets you isolate certificate lifecycles—useful when different domains have different security requirements.

Migrating Existing Ingresses Without Downtime

For Ingresses already serving traffic with manually managed certificates, migration requires a careful sequence:

-

Add the annotation without changing the secretName. If your existing Secret name matches what you specify in the Ingress TLS block, cert-manager detects the existing Secret and does not overwrite it immediately.

-

Wait for certificate readiness. Monitor the Certificate resource cert-manager creates. Once its status shows

Ready: True, the new certificate is available. -

Trigger the rotation. Delete the old Secret. cert-manager immediately provisions a new one with the certificate from your issuer.

kubectl get certificate -n production web-frontend-tlskubectl delete secret -n production web-frontend-tlsThe ingress controller continues serving the existing certificate until the Secret deletion. The new Secret appears within seconds, and TLS connections continue without interruption.

For high-traffic services where even brief disruption is unacceptable, consider a parallel migration: create a new Ingress with a different name and secretName pointing to the same backend services, verify TLS works correctly, then cut over traffic and decommission the legacy Ingress.

Monitoring Certificate Health and Expiration

Certificate automation eliminates manual renewal tasks, but it introduces a different challenge: silent failures. A misconfigured DNS provider, expired API credentials, or rate limit exhaustion can prevent renewals without triggering obvious errors. By the time anyone notices, your certificates have expired and services are down. Proactive monitoring transforms certificate management from a hope-based system into an observable, alertable infrastructure component.

Essential Prometheus Metrics

cert-manager exposes metrics on port 9402 by default. The most critical metric for preventing outages is certmanager_certificate_expiration_timestamp_seconds, which provides the Unix timestamp when each certificate expires. Combined with Prometheus’s time() function, you can calculate exactly how much validity remains. This single metric forms the foundation of certificate observability—everything else builds on knowing when certificates will expire.

Enable the ServiceMonitor for Prometheus Operator integration:

apiVersion: monitoring.coreos.com/v1kind: ServiceMonitormetadata: name: cert-manager namespace: cert-manager labels: app: cert-managerspec: selector: matchLabels: app.kubernetes.io/name: cert-manager endpoints: - port: tcp-prometheus-servicemonitor interval: 60s path: /metricsBeyond expiration timestamps, monitor these additional metrics for complete visibility:

certmanager_certificate_ready_status: Current ready condition (1 = ready, 0 = not ready). This metric immediately reflects certificate health and catches issues that expiration time alone cannot detect.certmanager_certificate_renewal_timestamp_seconds: When cert-manager will attempt renewal. Comparing this against the current time reveals whether renewals are scheduled appropriately.certmanager_controller_sync_call_count: Controller reconciliation activity by result. Spikes in error counts indicate systematic issues with your certificate infrastructure.certmanager_http_acme_client_request_count: ACME request counts by status code. Track 429 responses to detect rate limiting before it causes renewal failures.

Alerting Rules for Proactive Response

Configure alerts at multiple thresholds to give your team escalating warnings before certificates expire. The goal is layered defense: catch issues early when you have time to investigate calmly, then escalate urgency as expiration approaches.

apiVersion: monitoring.coreos.com/v1kind: PrometheusRulemetadata: name: cert-manager-alerts namespace: monitoringspec: groups: - name: cert-manager rules: - alert: CertificateExpiringSoon expr: | (certmanager_certificate_expiration_timestamp_seconds - time()) < 604800 for: 1h labels: severity: warning annotations: summary: "Certificate {{ $labels.name }} expires in less than 7 days" description: "Certificate {{ $labels.name }} in namespace {{ $labels.namespace }} expires in {{ $value | humanizeDuration }}"

- alert: CertificateExpiryCritical expr: | (certmanager_certificate_expiration_timestamp_seconds - time()) < 172800 for: 10m labels: severity: critical annotations: summary: "Certificate {{ $labels.name }} expires in less than 48 hours"

- alert: CertificateNotReady expr: | certmanager_certificate_ready_status{condition="True"} == 0 for: 15m labels: severity: warning annotations: summary: "Certificate {{ $labels.name }} is not ready" description: "Certificate has been in not-ready state for 15 minutes, indicating renewal failure"

- alert: HighCertificateRenewalFailureRate expr: | sum(rate(certmanager_controller_sync_call_count{result="error"}[10m])) > 0.1 for: 5m labels: severity: warning annotations: summary: "Elevated certificate renewal error rate detected" description: "cert-manager is experiencing sustained reconciliation errors"The CertificateNotReady alert catches renewal failures early. Since cert-manager attempts renewal 30 days before expiration by default, a certificate stuck in not-ready state gives you weeks to investigate and resolve the issue. The HighCertificateRenewalFailureRate alert complements this by detecting systemic problems that might affect multiple certificates simultaneously, such as ACME server issues or cluster-wide DNS problems.

💡 Pro Tip: Set the

CertificateExpiringSoonthreshold beyond yourrenewBeforesetting. If certificates renew at 30 days and you alert at 7 days, something has already gone wrong—that’s exactly when you want to know.

Dashboard Essentials

Build a Grafana dashboard that answers three questions at a glance: which certificates expire soonest, which certificates are failing renewal, and what’s the overall health of your certificate fleet. A well-designed dashboard enables quick triage during incidents and provides ongoing visibility for capacity planning.

Key panels to include:

## Certificates expiring within 30 days (table)sort_desc( (certmanager_certificate_expiration_timestamp_seconds - time()) / 86400 < 30)

## Certificate fleet health (stat panel)count(certmanager_certificate_ready_status{condition="True"} == 1)/count(certmanager_certificate_ready_status)

## Failed renewals over time (graph)sum(rate(certmanager_controller_sync_call_count{result="error"}[5m])) by (controller)

## ACME rate limit status (graph)sum(rate(certmanager_http_acme_client_request_count{status="429"}[1h])) by (host)Display certificates as a sorted table with namespace, name, days until expiration, and ready status. Color-code rows based on urgency—green for healthy, yellow under 14 days, red under 7 days. Include a namespace filter to help teams focus on their own certificates in multi-tenant clusters. Adding a time-series graph showing certificate counts by ready status over time helps identify intermittent issues that point-in-time metrics might miss.

With metrics flowing and alerts configured, you have visibility into certificate health across your entire cluster. When alerts fire or dashboards show issues, the next step is systematic troubleshooting to identify and resolve the root cause.

Troubleshooting Certificate Issues in Production

Certificate failures at 2 AM demand systematic debugging, not frantic kubectl commands. Understanding cert-manager’s status conditions and common failure patterns transforms incident response from guesswork into methodical diagnosis. This section equips you with the diagnostic tools and recovery procedures needed to resolve certificate issues quickly and confidently.

Reading Status Conditions

Every Certificate resource exposes conditions that reveal its current state. Start here before diving deeper:

## Get certificate status with conditionskubectl get certificate api-tls -n production -o yaml | yq '.status'

## Quick health check across all certificateskubectl get certificates -A -o custom-columns=\'NAMESPACE:.metadata.namespace,NAME:.metadata.name,READY:.status.conditions[?(@.type=="Ready")].status,REASON:.status.conditions[?(@.type=="Ready")].reason'The Ready condition tells you whether the certificate is valid and mounted. When it shows False, the reason field points to the failure category: Issuing, Pending, or Failed. Pay attention to the lastTransitionTime field—it indicates how long the certificate has been in its current state, which helps distinguish between transient issues and persistent failures.

CertificateRequest resources provide granular insight into what went wrong during issuance:

## List recent certificate requestskubectl get certificaterequest -n production --sort-by=.metadata.creationTimestamp

## Examine the failed requestkubectl describe certificaterequest api-tls-2847d -n production

## Get detailed conditions from the requestkubectl get certificaterequest api-tls-2847d -n production -o jsonpath='{.status.conditions}' | jqThe Events section contains the actual error messages from your issuer—ACME challenge failures, authorization errors, or webhook rejections. Cross-reference these events with cert-manager controller logs for complete context.

Common Failure Patterns

DNS propagation delays cause HTTP-01 and DNS-01 challenges to fail intermittently. ACME servers query authoritative nameservers that may not have received updates yet. This issue surfaces more frequently when using DNS providers with longer TTLs or global anycast networks:

## Check DNS propagation for DNS-01 challengesdig +short TXT _acme-challenge.api.mycompany.io @8.8.8.8

## Verify the challenge record existskubectl get challenges -n production -o wide

## Check propagation across multiple DNS serversfor ns in 8.8.8.8 1.1.1.1 9.9.9.9; do echo "Checking $ns:" dig +short TXT _acme-challenge.api.mycompany.io @$nsdoneACME rate limits strike when requesting too many certificates for the same domain. Let’s Encrypt enforces 50 certificates per registered domain per week, with additional limits on failed validations. Check your current standing:

## Review recent certificate orderskubectl get orders -n production --sort-by=.metadata.creationTimestamp | tail -20

## Look for rate limit errors in eventskubectl get events -n production --field-selector reason=Failed | grep -i "rate"

## Check order status for rate limit indicatorskubectl get orders -n production -o jsonpath='{range .items[*]}{.metadata.name}: {.status.state}{"\n"}{end}'Webhook connectivity issues prevent cert-manager from validating resources. The validating webhook must be reachable from the API server, and network policies or firewall rules can silently block this communication:

## Verify webhook pod healthkubectl get pods -n cert-manager -l app.kubernetes.io/component=webhook

## Check webhook service endpointskubectl get endpoints cert-manager-webhook -n cert-manager

## Test webhook connectivity from API server perspectivekubectl logs -n cert-manager -l app=webhook --tail=50Using cmctl for Debugging

The cert-manager CLI tool provides purpose-built debugging commands that simplify complex diagnostic workflows:

## Check overall cert-manager statuscmctl check api

## Inspect why a certificate isn't readycmctl status certificate api-tls -n production

## Manually trigger renewal for testingcmctl renew api-tls -n production

## Approve a pending CertificateRequest (for manual approval workflows)cmctl approve my-certificaterequest -n productionThe cmctl status certificate command walks the entire resource chain—Certificate, CertificateRequest, Order, Challenge—identifying exactly where the process stalled. This eliminates the need to manually trace relationships between resources during incident response.

Recovery Procedures

When certificates get stuck, systematic recovery beats deletion and recreation. Follow this escalation path, starting with the least disruptive options:

## Delete failed challenges to retrykubectl delete challenges -n production -l cert-manager.io/certificate-name=api-tls

## Clear stuck certificate requestskubectl delete certificaterequest -n production -l cert-manager.io/certificate-name=api-tls

## Force re-issuance by deleting the secret (use with caution)kubectl delete secret api-tls -n productionPro Tip: Before deleting secrets, verify your ingress controller handles missing certificates gracefully. NGINX returns 502 errors when the referenced secret disappears, while some controllers fall back to a default certificate.

For persistent failures, increase cert-manager controller logging temporarily:

kubectl set env deployment/cert-manager -n cert-manager --containers=cert-manager-controller \ CONTROLLER_LOG_LEVEL=6

## Watch logs during issuancekubectl logs -n cert-manager -l app=cert-manager -f --tail=100Watch the logs during the next issuance attempt to capture detailed error context, then restore normal logging levels once diagnosed. Remember to revert the log level after troubleshooting to avoid excessive log volume in production.

With these diagnostic techniques mastered, you’re equipped to handle certificate incidents confidently. The patterns covered throughout this guide—from architecture through monitoring and troubleshooting—form a complete operational framework for production TLS automation.

Key Takeaways

- Deploy cert-manager with Helm using production values including resource limits, PDBs, and proper webhook configuration before issuing any certificates

- Configure both staging and production Let’s Encrypt issuers, and always test new certificate configurations against staging first to avoid rate limit issues

- Set up Prometheus alerting on certificate expiration timestamps with a 14-day warning threshold to catch renewal failures before they impact production

- Use the cmctl CLI tool and Certificate status conditions as your primary debugging tools when certificate issuance fails