Production-Ready EKS: Multi-AZ Node Groups with Karpenter Autoscaling

Your EKS cluster works perfectly in staging, but production traffic is unpredictable. Some days you’re overprovisioned and burning money on nodes sitting idle at 20% utilization. Other days pods sit pending for minutes during traffic spikes while your managed node group’s ASG slowly spins up capacity. You’ve tuned the Cluster Autoscaler, adjusted scaling policies, and still find yourself choosing between cost efficiency and responsiveness.

The instinct is to pick a side: stick with managed node groups for their operational simplicity, or go all-in on Karpenter for its speed and flexibility. But this binary thinking misses the architecture that actually works in production—one where managed node groups provide predictable baseline capacity for your core services while Karpenter handles the burst traffic that would otherwise leave you overprovisioned or underserved.

This hybrid approach isn’t a compromise. It’s a recognition that different workloads have fundamentally different scaling characteristics. Your stateful services, monitoring stack, and cluster-critical components need the stability of pre-provisioned capacity. Your batch jobs, async workers, and traffic-driven services benefit from nodes that materialize in seconds and disappear when demand drops.

The difference between a cluster that burns 40% of its compute budget on slack capacity and one that maintains sub-minute pod scheduling during 10x traffic spikes comes down to understanding where each approach excels—and architecting your node strategy accordingly.

The Node Group Spectrum: From Managed to Fully Dynamic

Every production EKS cluster faces the same fundamental tension: provision enough capacity to handle peak load, or accept the risk of pods stuck in pending states during traffic spikes. Managed node groups push teams toward the first option, while Karpenter enables the second. Understanding where your workloads fall on this spectrum determines both your infrastructure costs and your on-call experience.

The Overprovisioning Tax

EKS managed node groups operate on a simple model: you define instance types, desired capacity, and scaling thresholds. The Auto Scaling Group handles the rest. This simplicity comes with a hidden cost.

Consider a cluster running steady-state workloads that occasionally burst to 3x capacity during batch processing. With managed node groups alone, you have two choices: maintain headroom for burst capacity (paying for idle nodes 80% of the time) or configure aggressive scaling policies that inevitably lag behind actual demand. Most teams choose headroom, accepting a 30-50% overprovisioning tax as the cost of reliability.

The math gets worse with diverse workload requirements. A single node group optimized for memory-intensive workloads wastes capacity when running CPU-bound jobs. Multiple node groups solve this but multiply operational complexity and increase the minimum viable cluster size.

Just-in-Time Economics

Karpenter inverts this model. Instead of pre-provisioning capacity and hoping pods fit, Karpenter watches for unschedulable pods and provisions nodes specifically for them. A pod requesting 4 vCPUs and 16GB of memory triggers provisioning of an appropriately-sized instance within seconds, not the minutes typical of ASG scaling events.

This approach eliminates the overprovisioning tax for variable workloads. Karpenter’s bin-packing algorithms consolidate pods onto fewer, larger instances during steady state, then provision burst capacity on-demand. The consolidation feature actively terminates underutilized nodes, moving their pods elsewhere—something managed node groups never do.

💡 Pro Tip: Karpenter’s consolidation respects pod disruption budgets. Ensure your workloads define appropriate PDBs before enabling aggressive consolidation policies.

The Hybrid Architecture

Production clusters benefit from combining both approaches. Managed node groups provide baseline capacity: predictable, always-available nodes for core platform services, system workloads, and minimum viable application replicas. Karpenter handles everything above that baseline—burst traffic, batch jobs, development workloads, and any pod that doesn’t fit the baseline profile.

This hybrid model answers the workload placement question directly:

- Managed node groups: Control plane add-ons, monitoring stacks, service meshes, databases with persistent volumes, and workloads requiring specific AMIs or launch templates

- Karpenter: Stateless application replicas beyond minimum count, batch processing, CI/CD runners, development namespaces, and any workload tolerant of node churn

The decision framework reduces to a single question: does this workload require guaranteed capacity, or can it wait seconds for a node? Most production traffic patterns include both types.

With this mental model established, the next step is designing the managed node group foundation that provides your cluster’s baseline resilience.

Designing Multi-AZ Node Groups for Baseline Workloads

Before configuring dynamic autoscaling with Karpenter, you need a stable foundation: managed node groups sized for your predictable baseline load. This baseline absorbs steady-state workloads while Karpenter handles burst capacity, reducing churn and improving cluster stability. Getting this foundation right prevents the costly cycle of over-provisioning during quiet periods and under-provisioning during predictable peaks.

Calculating Baseline Capacity

Start by analyzing historical pod resource requests across a representative time window—two weeks captures most patterns while excluding anomalies. Query your monitoring system for the P50 (median) CPU and memory requests:

kubectl top pods -A --containers | \ awk '{cpu+=$3; mem+=$4} END {print "CPU:", cpu, "Memory:", mem}'For production clusters, pull metrics from Prometheus or CloudWatch Container Insights rather than relying on point-in-time snapshots. Target your baseline node groups at 60-70% of peak utilization—this leaves headroom for pod scheduling while Karpenter provisions additional capacity. Going below 60% wastes resources on idle capacity; exceeding 70% risks scheduling failures during traffic spikes before Karpenter can respond.

A cluster running 50 pods averaging 500m CPU and 1Gi memory each requires roughly 25 vCPUs and 50Gi memory at baseline. Account for system pods (CoreDNS, kube-proxy, CSI drivers) consuming approximately 10-15% overhead. Don’t forget DaemonSets—these run on every node and can significantly impact available capacity, especially monitoring agents and logging sidecars that often request 100-200m CPU per node.

Multi-AZ Node Group Configuration

Distribute baseline capacity evenly across availability zones to survive zone failures without capacity loss. This distribution strategy ensures that losing any single AZ leaves sufficient capacity to handle critical workloads while replacement nodes spin up. The following eksctl configuration creates a production-ready node group spanning three AZs:

apiVersion: eksctl.io/v1alpha5kind: ClusterConfig

metadata: name: my-cluster region: us-east-1 version: "1.29"

managedNodeGroups: - name: baseline-workloads instanceType: m6i.xlarge minSize: 3 maxSize: 9 desiredCapacity: 6 volumeSize: 100 volumeType: gp3 availabilityZones: - us-east-1a - us-east-1b - us-east-1c labels: workload-type: baseline provisioner: managed taints: - key: baseline-only value: "true" effect: NoSchedule iam: withAddonPolicies: autoScaler: true cloudWatch: trueSetting minSize: 3 with three AZs guarantees at least one node per zone. The maxSize: 9 allows the Cluster Autoscaler to add capacity within managed groups before Karpenter intervenes—useful for gradual scaling during predictable daily patterns. Note that EKS managed node groups automatically balance nodes across specified AZs, but you should verify this distribution periodically using kubectl get nodes --label-columns=topology.kubernetes.io/zone.

💡 Pro Tip: Use

m6iorm7iinstances for general-purpose baseline workloads. Their balanced CPU-to-memory ratio (1:4) matches typical containerized application profiles, and Intel-based instances offer better Spot availability than Graviton in most regions.

Instance Type Selection

For baseline workloads, prioritize consistency over cost optimization. Select instance types based on:

- vCPU-to-memory ratio: Match your workload profile (compute-heavy: c-series, memory-heavy: r-series, balanced: m-series)

- EBS bandwidth: Ensure sufficient throughput for your storage patterns—m6i.xlarge provides 4,750 Mbps baseline

- Network performance: Up to 12.5 Gbps on xlarge instances handles most inter-pod traffic

- Generation consistency: Stick with current-generation instances (6th or 7th gen) for predictable performance characteristics and longer availability windows

Avoid mixing instance types within baseline node groups. Homogeneous pools simplify capacity planning and prevent scheduling imbalances where larger nodes accumulate pods. Mixed instance types also complicate cost attribution and make it harder to predict scaling behavior. Reserve instance diversity for Karpenter-managed pools where flexibility enables cost optimization.

Workload Isolation with Taints

The baseline-only taint in the configuration above prevents Karpenter-managed workloads from landing on baseline nodes. Pods targeting these nodes require a matching toleration:

spec: tolerations: - key: baseline-only operator: Equal value: "true" effect: NoSchedule nodeSelector: workload-type: baselineThis separation ensures baseline nodes serve only predictable workloads while burst traffic routes to Karpenter-provisioned capacity. The combination of taints and node selectors creates clear boundaries between infrastructure tiers. Consider using PreferNoSchedule instead of NoSchedule during initial rollout—this softer constraint allows workloads to land on baseline nodes if Karpenter capacity is unavailable, preventing outages while you tune the system.

For workloads requiring guaranteed baseline placement, add pod anti-affinity rules to spread replicas across AZs, ensuring zone failures don’t take down all instances of a critical service simultaneously.

With baseline capacity established, Karpenter handles everything beyond—scaling from zero to hundreds of nodes for unpredictable demand without pre-provisioning expensive reserved capacity.

Karpenter NodePools: Configuring Dynamic Scaling

While managed node groups provide stability for baseline workloads, Karpenter transforms how your cluster responds to demand spikes. Karpenter provisions right-sized nodes in under 60 seconds by working directly with EC2’s fleet API, bypassing the ASG abstraction entirely. This direct integration enables intelligent instance selection across hundreds of instance types, optimizing for both cost and workload requirements.

Installing Karpenter

Karpenter requires specific IAM permissions to provision EC2 instances and interact with your EKS cluster. The Helm installation handles the controller deployment, but you must first establish the IAM infrastructure. This includes creating an IAM role for the Karpenter controller with permissions to create, tag, and terminate EC2 instances, as well as a separate node role that provisioned instances will assume.

apiVersion: v1kind: ServiceAccountmetadata: name: karpenter namespace: karpenter annotations: eks.amazonaws.com/role-arn: arn:aws:iam::1234567890:role/KarpenterControllerRole-my-cluster---## Install via Helm with IRSA configured## helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter \## --version 0.37.0 \## --namespace karpenter --create-namespace \## --set settings.clusterName=my-cluster \## --set settings.interruptionQueue=my-cluster-interruption \## --set controller.resources.requests.cpu=1 \## --set controller.resources.requests.memory=1GiThe interruption queue enables Karpenter to handle Spot instance termination notices gracefully, draining nodes before EC2 reclaims them. Create this SQS queue and configure EventBridge rules to forward EC2 Spot interruption warnings, instance state changes, and health events. Without this queue, Spot interruptions result in abrupt pod terminations rather than graceful migrations.

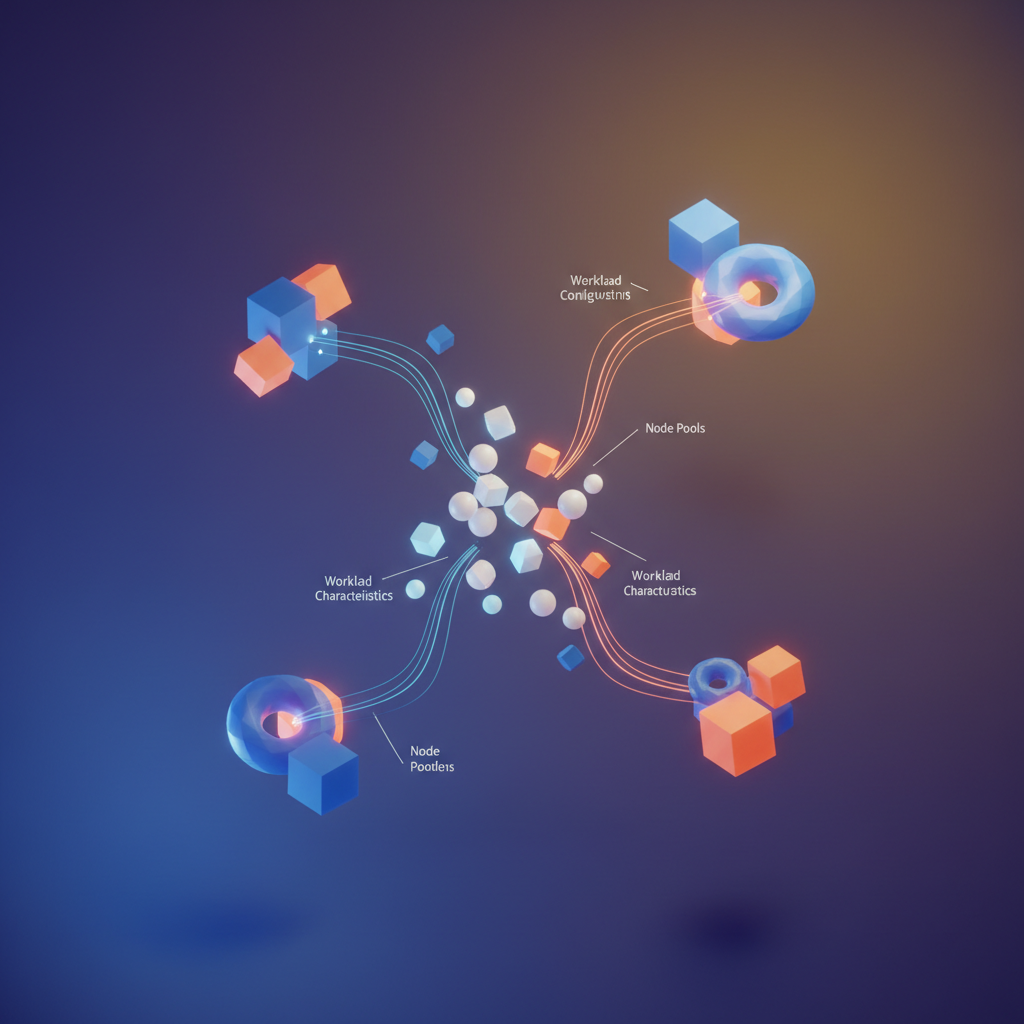

NodePool and EC2NodeClass Configuration

Karpenter uses two custom resources: NodePool defines scheduling constraints and limits, while EC2NodeClass specifies AWS-specific provisioning details. This separation allows you to create multiple NodePools sharing common EC2 configurations, reducing duplication while enabling distinct scaling behaviors for different workload types.

apiVersion: karpenter.sh/v1beta1kind: NodePoolmetadata: name: general-workloadsspec: template: spec: requirements: - key: kubernetes.io/arch operator: In values: ["amd64"] - key: karpenter.sh/capacity-type operator: In values: ["spot", "on-demand"] - key: karpenter.k8s.aws/instance-category operator: In values: ["c", "m", "r"] - key: karpenter.k8s.aws/instance-generation operator: Gt values: ["5"] nodeClassRef: apiVersion: karpenter.k8s.aws/v1beta1 kind: EC2NodeClass name: default limits: cpu: 1000 memory: 2000Gi disruption: consolidationPolicy: WhenUnderutilized consolidateAfter: 30s---apiVersion: karpenter.k8s.aws/v1beta1kind: EC2NodeClassmetadata: name: defaultspec: amiFamily: AL2023 role: KarpenterNodeRole-my-cluster subnetSelectorTerms: - tags: karpenter.sh/discovery: my-cluster securityGroupSelectorTerms: - tags: karpenter.sh/discovery: my-cluster blockDeviceMappings: - deviceName: /dev/xvda ebs: volumeSize: 100Gi volumeType: gp3 iops: 3000 throughput: 125The instance category and generation requirements tell Karpenter to consider any compute-optimized, general-purpose, or memory-optimized instance from generation 6 or newer. This flexibility allows Karpenter to find capacity across 50+ instance types, dramatically improving Spot availability. The limits section acts as a guardrail, preventing runaway scaling from consuming excessive resources during demand spikes or misconfigurations.

💡 Pro Tip: Set instance generation greater than 5 to exclude older instance types that lack Nitro features and have slower networking. This single constraint eliminates dozens of suboptimal choices while maintaining broad instance selection.

Spot Integration and Consolidation

The capacity-type requirement enables Spot instances as the preferred option with on-demand fallback. Karpenter’s allocation strategy uses price-capacity-optimized selection, choosing instances with both low interruption rates and competitive pricing. When Spot capacity becomes unavailable, Karpenter automatically falls back to on-demand instances, ensuring workloads continue running without manual intervention.

Consolidation prevents cluster sprawl by continuously evaluating whether workloads can be packed onto fewer or smaller nodes. The WhenUnderutilized policy identifies nodes running below capacity and cordons them, allowing pods to migrate before terminating the underutilized node. The 30-second consolidation window prevents thrashing during brief demand fluctuations while still responding quickly to sustained changes in resource utilization.

apiVersion: karpenter.sh/v1beta1kind: NodePoolmetadata: name: batch-processingspec: template: spec: requirements: - key: karpenter.sh/capacity-type operator: In values: ["spot"] taints: - key: workload-type value: batch effect: NoSchedule nodeClassRef: apiVersion: karpenter.k8s.aws/v1beta1 kind: EC2NodeClass name: default disruption: consolidationPolicy: WhenEmpty consolidateAfter: 5mFor batch workloads tolerant of interruption, a dedicated Spot-only NodePool with taints ensures cost-sensitive jobs don’t compete with production services for on-demand capacity. The WhenEmpty consolidation policy paired with a longer consolidation window suits batch patterns where nodes may sit idle between job submissions. This configuration can reduce compute costs by 60-90% compared to on-demand pricing for appropriate workloads.

Consider creating separate NodePools for distinct workload profiles: latency-sensitive services might require on-demand instances with specific instance types, while machine learning training jobs benefit from GPU instances provisioned exclusively through Spot. Each NodePool can enforce resource limits independently, providing cost controls at the workload category level rather than cluster-wide.

With nodes provisioning dynamically based on pending pod requirements, the next challenge becomes directing those pods to the appropriate nodes. Workload scheduling through node selectors, affinities, and topology spread constraints ensures your applications land on nodes matching their reliability and performance requirements.

Workload Scheduling: Directing Pods to the Right Nodes

With baseline node groups and Karpenter NodePools in place, the next challenge is ensuring pods land on the right infrastructure. Effective scheduling configuration prevents critical workloads from competing with burst traffic and maximizes the investment in your multi-tier node strategy. Kubernetes provides several complementary mechanisms for controlling pod placement, each suited to different scheduling requirements.

Node Selectors and Affinity for Workload Tiers

The simplest approach uses node selectors to pin workloads to specific node types. For baseline workloads that need predictable performance, target your managed node groups directly:

apiVersion: apps/v1kind: Deploymentmetadata: name: payment-processorspec: replicas: 3 selector: matchLabels: app: payment-processor template: metadata: labels: app: payment-processor spec: nodeSelector: node-type: baseline workload-class: critical containers: - name: processor image: 123456789012.dkr.ecr.us-east-1.amazonaws.com/payment-processor:v2.1.0 resources: requests: cpu: "500m" memory: "1Gi" limits: cpu: "1000m" memory: "2Gi"Node selectors enforce hard requirements—pods will remain pending indefinitely if no matching node exists. This behavior makes them ideal for workloads that absolutely must run on specific infrastructure, but requires careful capacity planning to avoid scheduling deadlocks.

For burst workloads that Karpenter provisions, use node affinity with preferences rather than requirements. This allows pods to schedule on baseline nodes during low utilization while preferring Karpenter-provisioned capacity during scale events:

spec: affinity: nodeAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 80 preference: matchExpressions: - key: karpenter.sh/nodepool operator: In values: - burst-compute requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/arch operator: In values: - amd64The weight parameter ranges from 1 to 100, allowing you to express relative preferences across multiple affinity rules. Higher weights take precedence when the scheduler evaluates candidate nodes.

Pod Topology Spread for High Availability

Multi-AZ deployments require topology spread constraints to prevent pods from clustering in a single availability zone. Without these constraints, the scheduler optimizes for bin-packing efficiency, which often results in uneven distribution that compromises fault tolerance:

spec: topologySpreadConstraints: - maxSkew: 1 topologyKey: topology.kubernetes.io/zone whenUnsatisfiable: DoNotSchedule labelSelector: matchLabels: app: api-gateway - maxSkew: 2 topologyKey: kubernetes.io/hostname whenUnsatisfiable: ScheduleAnyway labelSelector: matchLabels: app: api-gatewayThe zone constraint uses DoNotSchedule to enforce strict distribution, while the hostname constraint uses ScheduleAnyway to prefer spreading across nodes without blocking scheduling entirely. This layered approach balances availability guarantees with scheduling flexibility.

💡 Pro Tip: Set

maxSkew: 1for stateless services that need even distribution. For stateful workloads with zone-aware storage, usemaxSkew: 2to allow temporary imbalance during rolling updates.

Consider the interaction between topology spread and cluster autoscaling. When Karpenter provisions new nodes, pods may temporarily violate spread constraints until the scheduler rebalances. Setting minDomains ensures the constraint only applies when sufficient topology domains exist, preventing deadlocks in clusters that haven’t yet scaled to full capacity.

Priority Classes for Resource Contention

When cluster resources become constrained, priority classes determine which pods survive and which get preempted. This mechanism becomes critical during sudden traffic spikes when Karpenter hasn’t yet provisioned additional capacity:

apiVersion: scheduling.k8s.io/v1kind: PriorityClassmetadata: name: critical-baselinevalue: 1000000globalDefault: falsedescription: "Critical workloads that must run on baseline capacity"preemptionPolicy: PreemptLowerPriority---apiVersion: scheduling.k8s.io/v1kind: PriorityClassmetadata: name: burst-standardvalue: 100000globalDefault: truedescription: "Standard burst workloads eligible for spot capacity"preemptionPolicy: PreemptLowerPriorityAssign the critical-baseline class to payment processing, authentication services, and other workloads that cannot tolerate interruption. Reserve priority values above 1000000000 for system components—Kubernetes uses this range for critical cluster infrastructure.

The preemptionPolicy field controls whether higher-priority pods can evict lower-priority ones. Setting Never creates priority without preemption, useful for batch jobs that should queue rather than disrupt running workloads.

GPU and Specialized Workloads

For ML inference or video transcoding workloads, combine node selectors with resource requests to target GPU-enabled nodes provisioned by Karpenter:

spec: nodeSelector: karpenter.k8s.aws/instance-gpu-manufacturer: nvidia containers: - name: inference resources: limits: nvidia.com/gpu: 1Karpenter automatically provisions GPU instances when pending pods request these resources, provided your NodePool includes GPU instance types in the allowed list. GPU resources are non-compressible—unlike CPU, they cannot be overcommitted—so requests and limits should always match.

For workloads requiring specific GPU architectures, add selectors for instance generation or GPU memory. Karpenter exposes labels like karpenter.k8s.aws/instance-gpu-memory and karpenter.k8s.aws/instance-gpu-count that enable precise targeting of instance capabilities.

With scheduling policies distributing workloads across your node tiers, the next step is building observability to track provisioning decisions and their cost implications.

Observability: Monitoring Node Provisioning and Costs

Production Karpenter deployments demand visibility into provisioning decisions, node lifecycle events, and their cost implications. Without proper observability, you’re flying blind when capacity issues arise during peak traffic. A comprehensive monitoring strategy combines Karpenter’s native metrics, AWS CloudWatch integration, and disciplined cost allocation practices.

Karpenter Metrics That Matter

Karpenter exposes Prometheus metrics that reveal provisioning behavior. The most critical metrics for production monitoring:

karpenter_provisioner_scheduling_duration_seconds: Time from pod pending to node launch decisionkarpenter_nodes_created_total: Node creation rate by provisioner and instance typekarpenter_nodes_terminated_total: Consolidation and expiration activitykarpenter_deprovisioning_actions_performed_total: Tracks consolidation effectivenesskarpenter_cloudprovider_errors_total: Surfaces API failures that block provisioning

Beyond these core metrics, monitor karpenter_pods_state to track pending pod counts and karpenter_nodeclaims_disrupted to understand why nodes are being replaced. These secondary metrics often reveal subtle issues before they become user-facing problems.

Deploy a ServiceMonitor to scrape these metrics:

apiVersion: monitoring.coreos.com/v1kind: ServiceMonitormetadata: name: karpenter namespace: karpenterspec: selector: matchLabels: app.kubernetes.io/name: karpenter endpoints: - port: http-metrics interval: 30s path: /metricsCloudWatch Dashboard for Node Lifecycle

Combine Karpenter metrics with CloudWatch Container Insights for a unified view. This dashboard configuration tracks node provisioning latency alongside cluster capacity, giving operators a single pane of glass for capacity management:

{ "widgets": [ { "type": "metric", "properties": { "title": "Node Provisioning Latency (p99)", "region": "us-east-1", "metrics": [ ["Karpenter", "scheduling_duration_seconds", "quantile", "0.99"] ], "period": 300, "stat": "Average" } }, { "type": "metric", "properties": { "title": "Nodes by Lifecycle", "metrics": [ ["AWS/EC2", "RunningInstances", "AutoScalingGroupName", "eks-baseline-ng"], ["Karpenter", "nodes_created_total", {"label": "Karpenter Nodes"}] ] } } ]}Consider adding widgets for Spot interruption rates and instance type distribution. These visualizations help identify whether your instance diversification strategy is working or if you’re over-relying on specific instance families.

Cost Allocation Through Tagging

Accurate cost attribution requires consistent tagging at both the Kubernetes and AWS levels. Configure your NodePool to propagate cost-relevant tags:

apiVersion: karpenter.sh/v1beta1kind: NodePoolmetadata: name: workloadsspec: template: metadata: labels: cost-center: platform environment: production spec: requirements: - key: karpenter.sh/capacity-type operator: In values: ["spot", "on-demand"] tags: Team: platform-engineering Environment: production ManagedBy: karpenter CostCenter: "CC-1234"Enable AWS Cost Explorer’s Kubernetes cost allocation feature to correlate these tags with actual spend. The combination of cost-center labels and AWS tags provides both cluster-level and billing-level visibility. For granular analysis, integrate with tools like Kubecost or OpenCost, which can attribute costs down to individual workloads by correlating node costs with pod resource consumption.

💡 Pro Tip: Use the

eks:cluster-nametag automatically applied by Karpenter to filter costs by cluster in Cost Explorer without additional configuration.

Alerting on Capacity Constraints

Proactive alerts prevent capacity emergencies. This PrometheusRule triggers when provisioning consistently fails or latency degrades:

apiVersion: monitoring.coreos.com/v1kind: PrometheusRulemetadata: name: karpenter-alertsspec: groups: - name: karpenter rules: - alert: KarpenterProvisioningLatencyHigh expr: histogram_quantile(0.99, karpenter_provisioner_scheduling_duration_seconds_bucket) > 120 for: 5m labels: severity: warning annotations: summary: "Karpenter provisioning latency exceeds 2 minutes" - alert: KarpenterNodeLaunchFailures expr: increase(karpenter_cloudprovider_errors_total{method="Create"}[10m]) > 5 labels: severity: critical annotations: summary: "Multiple node launch failures detected"Supplement these alerts with capacity-focused rules that trigger when pending pods remain unscheduled for extended periods. An alert on karpenter_pods_state{state="pending"} > 0 sustained for more than ten minutes catches scenarios where Karpenter cannot find suitable capacity due to constraints or quota exhaustion.

These alerts give your on-call team early warning before users experience pod scheduling delays. With observability in place, you’re prepared to diagnose the failure modes we’ll examine next.

Failure Modes and Recovery Patterns

Production EKS clusters face inevitable infrastructure failures—AZ outages, spot interruptions, and node health issues. The difference between a minor blip and a major incident lies in your preparation. This section covers the resilience patterns that keep applications available when infrastructure fails.

Handling AZ Failures with Pod Disruption Budgets

When an entire availability zone becomes unavailable, Kubernetes must reschedule pods without overwhelming remaining capacity. Pod Disruption Budgets (PDBs) prevent cascading failures by controlling how many pods can be unavailable simultaneously during voluntary disruptions like node drains, cluster upgrades, or rebalancing operations.

The key distinction lies in choosing between minAvailable and maxUnavailable. Use minAvailable when you have a hard requirement for minimum capacity—such as maintaining quorum in a distributed system. Use maxUnavailable when you want to control the rate of disruption while allowing the scheduler more flexibility.

apiVersion: policy/v1kind: PodDisruptionBudgetmetadata: name: api-gateway-pdb namespace: productionspec: minAvailable: 2 selector: matchLabels: app: api-gateway---apiVersion: policy/v1kind: PodDisruptionBudgetmetadata: name: payment-service-pdb namespace: productionspec: maxUnavailable: 1 selector: matchLabels: app: payment-serviceFor multi-AZ resilience, combine PDBs with topology spread constraints. This ensures pods distribute across zones, so an AZ failure affects only a fraction of your replicas. The maxSkew value of 1 enforces even distribution, while DoNotSchedule prevents the scheduler from creating imbalanced deployments:

apiVersion: apps/v1kind: Deploymentmetadata: name: api-gatewayspec: replicas: 6 template: spec: topologySpreadConstraints: - maxSkew: 1 topologyKey: topology.kubernetes.io/zone whenUnsatisfiable: DoNotSchedule labelSelector: matchLabels: app: api-gatewayWith six replicas spread across three AZs, losing one zone leaves four healthy pods—well above the PDB minimum of two. This layered approach ensures both planned maintenance and unplanned failures respect your availability requirements.

Spot Instance Interruption Handling

Karpenter handles spot interruptions automatically through its interruption controller, which watches for AWS interruption warnings and cordons nodes before termination. The two-minute warning window provides enough time to gracefully drain pods and schedule replacements. Configure your NodePool to maximize recovery options:

apiVersion: karpenter.sh/v1kind: NodePoolmetadata: name: spot-workloadsspec: disruption: consolidationPolicy: WhenEmptyOrUnderutilized consolidateAfter: 1m template: spec: requirements: - key: karpenter.sh/capacity-type operator: In values: ["spot"] - key: node.kubernetes.io/instance-type operator: In values: ["m5.large", "m5a.large", "m6i.large", "m6a.large", "m5.xlarge"]💡 Pro Tip: Specify at least 10-15 instance types across multiple families. This dramatically increases spot capacity availability and reduces interruption frequency by letting Karpenter choose from deeper capacity pools.

Applications should also set appropriate terminationGracePeriodSeconds values that allow in-flight requests to complete. For long-running batch jobs, consider implementing checkpoint mechanisms that save progress before termination.

Node Draining During Updates

Controlled node updates require graceful draining. Karpenter’s do-not-disrupt annotation protects nodes during critical operations such as end-of-month batch processing or active incident response:

apiVersion: v1kind: Nodemetadata: name: ip-10-0-42-187.us-east-1.compute.internal annotations: karpenter.sh/do-not-disrupt: "true"For rolling updates across your fleet, configure expiration policies that force gradual node replacement. The disruption budget limits concurrent replacements to prevent capacity shortages:

spec: disruption: budgets: - nodes: "10%" template: spec: expireAfter: 168h # 7 daysThis configuration ensures no more than 10% of nodes drain simultaneously while guaranteeing every node gets replaced weekly with fresh AMIs and updated configurations.

Capacity Reservation for Critical Workloads

For workloads that cannot tolerate provisioning delays, reserve on-demand capacity using EC2 Capacity Reservations integrated with Karpenter. This guarantees instance availability regardless of regional demand:

apiVersion: karpenter.k8s.aws/v1kind: EC2NodeClassmetadata: name: reserved-capacityspec: amiSelectorTerms: - alias: al2023@latest subnetSelectorTerms: - tags: karpenter.sh/discovery: my-cluster securityGroupSelectorTerms: - tags: karpenter.sh/discovery: my-cluster capacityReservationSelectorTerms: - tags: purpose: critical-workloadsThis ensures critical pods always have guaranteed capacity, even during major spot reclamation events or regional capacity constraints. Reserve capacity in each AZ where you run critical workloads to maintain multi-AZ resilience.

The patterns covered here form the foundation of cluster reliability. However, resilience configurations require ongoing maintenance—AMI updates, policy tuning, and incident response procedures. The next section provides an operational runbook for day-2 operations that keeps these patterns effective over time.

Operational Runbook: Day-2 Operations

A well-architected EKS cluster requires ongoing maintenance to remain secure, cost-effective, and performant. This runbook provides concrete procedures for the most common day-2 operations you’ll encounter.

Rolling AMI Updates

Amazon releases updated EKS-optimized AMIs regularly, including security patches and Kubernetes version updates. For managed node groups, use a rolling update strategy that respects Pod Disruption Budgets:

- Update the launch template with the new AMI ID

- Trigger a node group update through the EKS console or

eksctl upgrade nodegroup - Monitor the rollout—EKS cordons nodes, drains pods, and replaces instances one availability zone at a time

For Karpenter-managed nodes, updates happen automatically when you update the EC2NodeClass AMI selector. Karpenter’s drift detection identifies nodes running outdated AMIs and gracefully replaces them during consolidation windows.

💡 Pro Tip: Schedule AMI updates during low-traffic periods. Karpenter’s

expireAftersetting forces node rotation within a defined window, ensuring you never run nodes older than your compliance requirements allow.

Pre-Scaling for Known Events

When you anticipate traffic spikes—product launches, marketing campaigns, seasonal peaks—pre-scale Karpenter NodePools by temporarily adjusting limits. Increase the limits.cpu and limits.memory values 30 minutes before the event, then restore original values afterward. This prevents cold-start latency when demand surges.

For predictable patterns, create separate NodePools with aggressive scaling parameters that activate only during event windows using weighted node selection.

Debugging Provisioning Failures

When pods remain pending, follow this diagnostic sequence:

- Check

kubectl describe podfor scheduling constraints that no node satisfies - Review Karpenter controller logs for capacity errors:

kubectl logs -n karpenter -l app.kubernetes.io/name=karpenter - Verify EC2 capacity in your target availability zones—spot interruptions or instance shortages surface here

- Confirm IAM permissions haven’t drifted, particularly for the Karpenter node role

Common culprits include overly restrictive node selectors, insufficient NodePool limits, and availability zone capacity constraints during regional demand spikes.

Monthly Cost Optimization Review

Establish a recurring review cadence using the observability stack from the previous section. Examine Karpenter’s consolidation efficiency, identify workloads consistently landing on expensive instance types, and validate that spot versus on-demand ratios match your risk tolerance.

These operational procedures form the foundation for maintaining cluster health. However, understanding what happens when components fail—and how to recover—requires examining specific failure modes and their recovery patterns.

Key Takeaways

- Use managed node groups for baseline capacity that covers 60-70% of typical load, letting Karpenter handle the variable remainder

- Configure Karpenter NodePools with consolidation enabled and appropriate disruption budgets to prevent both over-provisioning and unnecessary pod disruption

- Implement pod topology spread constraints and priority classes before you need them—retrofitting during an incident is painful

- Set up cost allocation tags from day one; understanding per-workload costs becomes impossible to add later

- Test AZ failure scenarios quarterly by cordoning nodes in a single zone and validating your pod disruption budgets actually work