Implementing Hexagonal Architecture in Microservices: A Practical Guide to Ports and Adapters

Your microservice started clean. Six months later, your HTTP handlers contain business logic, your database queries are scattered across services, and changing your message broker requires touching fifty files. The coupling crept in gradually, and now every change feels like surgery.

This pattern repeats across teams and codebases. You begin with clear intentions—a service that handles orders, another for inventory, a third for notifications. The boundaries seem obvious. Then reality sets in. A deadline pushes you to add “just one” database call directly in your controller. A new requirement means your Kafka consumer now validates business rules inline. Your repository layer starts making HTTP calls to other services because “it’s just easier.”

The architecture diagrams still look clean. The code tells a different story.

What makes this particularly insidious is how reasonable each individual decision seems at the time. No single commit introduces catastrophic coupling. Instead, infrastructure concerns seep into your domain logic through a thousand small conveniences. Your unit tests now require a running database. Swapping PostgreSQL for DynamoDB means rewriting your entire service layer. That message broker migration you estimated at two weeks? You’re now in month three.

The cost compounds. New team members take longer to understand where business logic actually lives. Bug fixes create unexpected side effects. Feature development slows as developers spend more time navigating the maze than solving problems.

Traditional layered architecture promised to prevent this. Controllers call services, services call repositories—clean and simple. Yet here you are, with layers that leak and boundaries that blur. The problem isn’t the layers themselves. It’s what happens when infrastructure decisions become inseparable from the business rules they’re supposed to support.

The Coupling Problem in Microservices

Every microservice starts with good intentions. Clean separation of concerns. Clear boundaries. A focused domain. Then reality sets in.

A database query sneaks into a controller. An HTTP client call appears in a service method. AWS SDK imports scatter across your business logic. Six months later, you’re staring at a service where PostgreSQL assumptions live three layers deep, and swapping to DynamoDB means rewriting half your codebase.

The Slow Creep of Infrastructure

Infrastructure coupling rarely arrives through a single bad decision. It accumulates through hundreds of small conveniences:

- Repository methods that return database-specific types instead of domain objects

- Business logic that catches

SQLExceptioninstead of domain exceptions - Service methods that accept

HttpRequestobjects directly - Validation rules that depend on ORM annotations

Each shortcut saves five minutes today. Each one adds friction to every change tomorrow.

The Real Cost

Tight coupling between business logic and infrastructure creates three compounding problems.

Development velocity degrades over time. Simple feature additions require changes across multiple layers. A new field in your domain model triggers modifications in controllers, services, repositories, and database migrations—not because the business logic demands it, but because infrastructure concerns have bled everywhere.

Tests become brittle and slow. When your order processing logic directly calls a payment gateway client, every unit test needs to mock HTTP responses. When your inventory checks hit Redis directly, tests require running containers. Teams respond by writing fewer tests or slower integration tests. Both paths lead to regression bugs.

Migrations become rewrites. Switching message brokers from RabbitMQ to Kafka should be a contained infrastructure change. In a tightly coupled system, it touches every service that publishes or consumes messages. What should take a week takes a quarter.

Why Layered Architecture Falls Short

Traditional three-tier architecture—controllers, services, repositories—promises separation but delivers a false sense of security. The layers create a dependency direction (controllers depend on services, services depend on repositories), but nothing prevents infrastructure concerns from flowing upward through return types, exceptions, and implicit assumptions.

You end up with services that are technically separate from repositories but practically inseparable from the database technology behind them.

The solution isn’t more layers. It’s inverting the dependency direction entirely—making infrastructure depend on your business logic instead of the reverse.

This inversion sits at the heart of hexagonal architecture, a pattern that treats your domain as the center of the universe and everything else as replaceable plugins.

Hexagonal Architecture: The Core Mental Model

Before diving into implementation details, you need a solid mental model of hexagonal architecture. This section establishes the vocabulary and concepts that will guide every design decision throughout your microservice.

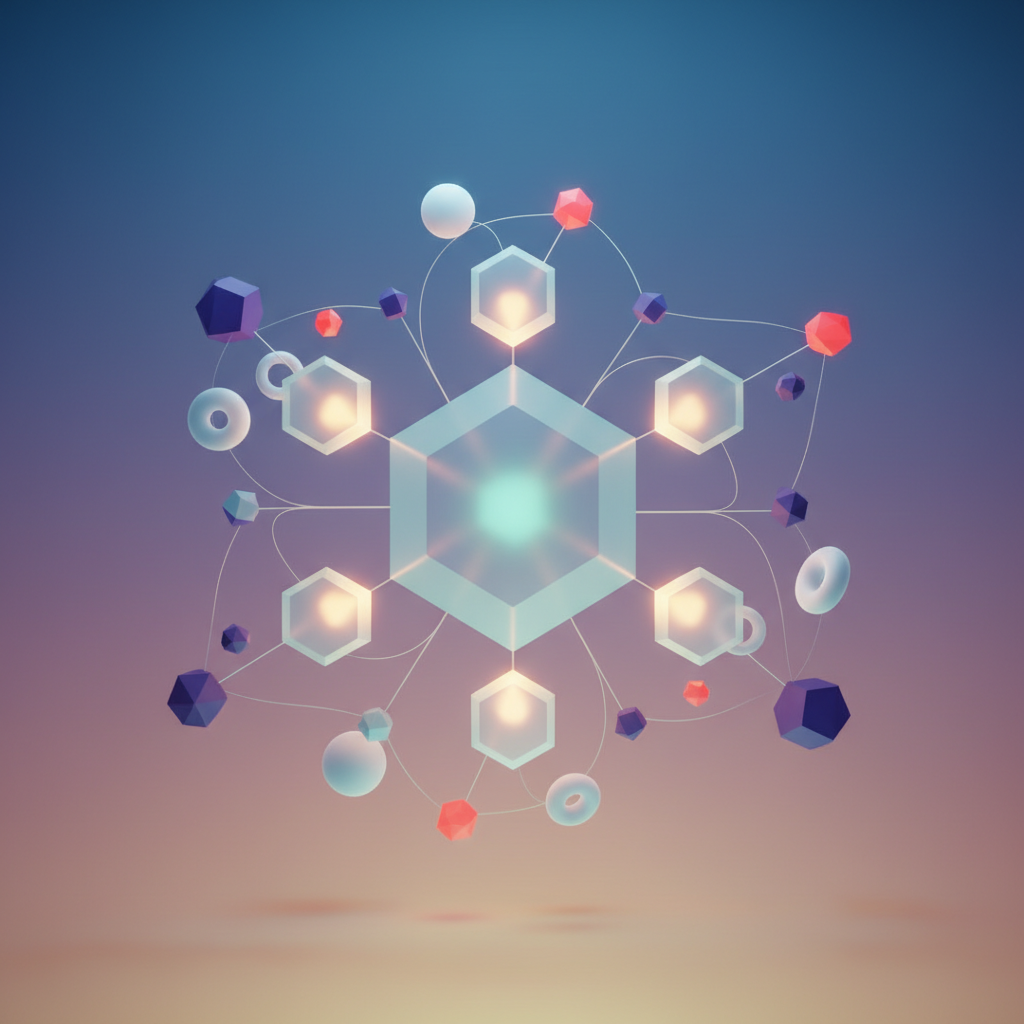

Hexagonal architecture, also known as Ports and Adapters, organizes code into three distinct zones: the domain core at the center, ports defining boundaries, and adapters connecting to the outside world. Picture a hexagon—the shape is arbitrary, but the geometry emphasizes that your application has multiple equivalent faces to the external world, none more privileged than another.

The Domain at the Center

The innermost layer contains your business logic in its purest form. This code knows nothing about HTTP, databases, message queues, or any other infrastructure concern. It speaks only in the language of your problem domain.

A payment processing service’s domain core understands concepts like transactions, refunds, and settlement rules. It does not understand PostgreSQL row locks, Kafka partitions, or REST status codes. This separation keeps business rules testable, portable, and immune to infrastructure changes.

The domain core depends on nothing external. Dependencies flow inward—outer layers know about inner layers, never the reverse. This inversion of control is the architectural foundation that makes hexagonal systems maintainable.

Ports as Contracts

Ports are interfaces that define what your domain needs without specifying how those needs get fulfilled. They represent the boundary between your business logic and the outside world.

Think of a port as a job description. It declares capabilities required—“I need something that can store customer records”—without caring whether a PostgreSQL database, MongoDB cluster, or in-memory map fulfills that role.

Ports come in two varieties based on who initiates the interaction:

Primary ports (also called driving ports) define how external actors interact with your domain. A REST controller or gRPC handler uses a primary port to invoke business operations. The outside world drives your application through these interfaces.

Secondary ports (also called driven ports) define what your domain needs from external systems. Database repositories, payment gateways, and notification services implement secondary ports. Your application drives these external systems through these interfaces.

💡 Pro Tip: Name your ports based on domain concepts, not technology.

OrderRepositorycommunicates intent better thanPostgresOrderStore. The port doesn’t care about the storage mechanism.

Adapters as Implementations

Adapters translate between the domain’s language and the external world’s protocols. Each adapter implements a port, handling all technology-specific concerns.

A REST adapter translates HTTP requests into domain method calls and domain responses back into JSON. A PostgreSQL adapter translates repository method calls into SQL queries and result sets back into domain objects. The domain remains oblivious to these translations.

Multiple adapters can implement the same port. Your test suite uses an in-memory adapter for the repository port while production uses PostgreSQL. Swapping implementations requires no changes to business logic—the port contract guarantees compatibility.

This adapter interchangeability delivers immediate practical benefits: faster test execution, simplified local development, and the flexibility to swap infrastructure without touching your core.

With this mental model established, the next section examines how to design ports that genuinely protect your domain boundaries rather than leaking infrastructure concerns inward.

Designing Ports: Contracts That Protect Your Domain

Ports are the formal contracts that define how your domain interacts with the outside world. They’re interfaces—nothing more, nothing less—but their design determines whether your architecture delivers on its promise of clean separation. Getting ports right means your domain remains testable, your adapters stay interchangeable, and your codebase communicates intent clearly to every developer who reads it.

Inbound Ports: What Your Application Does

Inbound ports define the use cases your application exposes. Each port represents a specific capability that external actors (HTTP controllers, CLI commands, message consumers) can invoke. Think of them as the menu of operations your domain offers to the outside world.

export interface CreateOrderCommand { customerId: string; items: Array<{ productId: string; quantity: number }>; shippingAddress: Address;}

export interface OrderServicePort { createOrder(command: CreateOrderCommand): Promise<Order>; cancelOrder(orderId: string, reason: string): Promise<void>; getOrderStatus(orderId: string): Promise<OrderStatus>;}Notice the naming: CreateOrderCommand uses domain language, not HTTP terminology. The port knows nothing about request bodies, headers, or status codes. This separation means you can invoke the same use case from a REST endpoint, a GraphQL resolver, or a Kafka consumer without changing a single line of domain code. The inbound port becomes a stable contract that shields your domain from the volatility of transport mechanisms and presentation concerns.

Outbound Ports: What Your Application Needs

Outbound ports declare dependencies on external systems—databases, APIs, message queues—without specifying how those dependencies work. They represent the domain’s requirements expressed as abstractions rather than concrete implementations.

export interface OrderRepositoryPort { save(order: Order): Promise<void>; findById(orderId: string): Promise<Order | null>; findByCustomer(customerId: string, options?: PaginationOptions): Promise<Order[]>;}export interface PaymentGatewayPort { charge(payment: PaymentRequest): Promise<PaymentResult>; refund(transactionId: string, amount: Money): Promise<RefundResult>;}The domain declares what it needs through these interfaces. Whether orders live in PostgreSQL, MongoDB, or an in-memory store becomes an implementation detail handled by adapters. This inversion of control keeps your domain pure and makes testing straightforward—you can substitute real databases with simple in-memory implementations during tests.

Port Granularity: Finding the Right Balance

The most common mistake is creating ports that are either too granular or too coarse. Split ports when:

- Different adapters need different subsets of operations

- Operations have fundamentally different failure modes

- You want to enforce the Interface Segregation Principle

// Too coarse - forces adapters to implement everythingexport interface NotificationPort { sendEmail(to: string, template: EmailTemplate): Promise<void>; sendSMS(phone: string, message: string): Promise<void>; sendPush(deviceToken: string, notification: PushNotification): Promise<void>;}

// Better - separate concernsexport interface EmailPort { send(to: string, template: EmailTemplate): Promise<void>;}

export interface SMSPort { send(phone: string, message: string): Promise<void>;}Combine ports when operations are always used together and share the same adapter lifecycle. A CustomerRepositoryPort makes more sense than separate CustomerReadPort and CustomerWritePort interfaces unless you’re implementing CQRS. The goal is cohesion: methods that change together should live together.

💡 Pro Tip: Start with coarser ports and split them when you feel friction—when an adapter implements methods it doesn’t need, or when tests require excessive mocking. Let real pain drive the design, not theoretical purity.

Type Safety at the Boundary

Ports benefit from strict typing that eliminates entire categories of bugs. TypeScript’s type system lets you encode constraints that would otherwise live only in documentation or runtime checks.

export type ReservationId = string & { readonly brand: unique symbol };

export interface InventoryServicePort { reserve( productId: string, quantity: number, expiresAt: Date ): Promise<ReservationId>;

confirm(reservationId: ReservationId): Promise<void>; release(reservationId: ReservationId): Promise<void>;}Branded types like ReservationId prevent you from accidentally passing an order ID where a reservation ID belongs. The compiler catches these errors before they reach production. This pattern is particularly valuable at port boundaries where values flow between different parts of your system. Combined with discriminated unions for result types, you can model success and failure cases explicitly, making impossible states unrepresentable in your type definitions.

Ports form the skeleton of your hexagonal architecture. They define the shape of your domain’s interactions without dictating implementation. With well-designed ports in place, you’re ready to build the adapters that bring these contracts to life.

Building Adapters: Implementing Infrastructure Concerns

Adapters are where your architecture meets reality. They translate between your domain’s language and the protocols, databases, and messaging systems that exist in the outside world. A well-designed adapter isolates all infrastructure complexity, allowing you to swap implementations without touching a single line of business logic. The adapter layer serves as a protective boundary—infrastructure details stay contained, and your domain remains blissfully unaware of how data arrives or where it persists.

HTTP Adapter: Translating REST to Domain Operations

The HTTP adapter receives incoming requests and translates them into domain operations. It handles HTTP-specific concerns like status codes, headers, content negotiation, and request validation—none of which should leak into your domain. This adapter acts as an anti-corruption layer, protecting your domain model from the conventions and constraints of REST APIs.

import { Request, Response } from 'express';import { OrderService } from '../../domain/ports/order.service.port';import { CreateOrderDto } from './dto/create-order.dto';

export class OrderController { constructor(private readonly orderService: OrderService) {}

async createOrder(req: Request, res: Response): Promise<void> { const dto = req.body as CreateOrderDto;

const command = { customerId: dto.customer_id, items: dto.items.map(item => ({ productId: item.product_id, quantity: item.quantity })) };

const result = await this.orderService.createOrder(command);

if (result.isFailure) { res.status(400).json({ error: result.error }); return; }

res.status(201).json({ order_id: result.value.id, status: result.value.status, created_at: result.value.createdAt.toISOString() }); }}Notice the adapter performs two translations: snake_case from the HTTP request becomes camelCase for the domain, and domain objects transform back to the API’s response format. The controller knows nothing about databases or message queues—it only speaks HTTP and domain commands. This separation means your API contract can evolve independently from your domain model. You might add new fields to the response, change date formats, or version your API without modifying domain logic.

Repository Adapter: PostgreSQL Implementation

The repository adapter implements your persistence port using a specific database technology. All SQL, connection pooling, transaction management, and query optimization lives here. This concentration of database concerns makes performance tuning straightforward—you know exactly where to look when queries need optimization.

import { Pool } from 'pg';import { Order } from '../../domain/entities/order';import { OrderRepository } from '../../domain/ports/order.repository.port';

export class PostgresOrderRepository implements OrderRepository { constructor(private readonly pool: Pool) {}

async save(order: Order): Promise<void> { const client = await this.pool.connect(); try { await client.query('BEGIN');

await client.query( `INSERT INTO orders (id, customer_id, status, created_at) VALUES ($1, $2, $3, $4) ON CONFLICT (id) DO UPDATE SET status = $3`, [order.id, order.customerId, order.status, order.createdAt] );

for (const item of order.items) { await client.query( `INSERT INTO order_items (order_id, product_id, quantity, price) VALUES ($1, $2, $3, $4) ON CONFLICT (order_id, product_id) DO UPDATE SET quantity = $3`, [order.id, item.productId, item.quantity, item.price] ); }

await client.query('COMMIT'); } catch (error) { await client.query('ROLLBACK'); throw error; } finally { client.release(); } }

async findById(id: string): Promise<Order | null> { const result = await this.pool.query( `SELECT o.*, json_agg(i.*) as items FROM orders o LEFT JOIN order_items i ON i.order_id = o.id WHERE o.id = $1 GROUP BY o.id`, [id] );

return result.rows[0] ? this.toDomain(result.rows[0]) : null; }

private toDomain(row: any): Order { return Order.reconstitute({ id: row.id, customerId: row.customer_id, status: row.status, items: row.items, createdAt: row.created_at }); }}The toDomain method handles the impedance mismatch between relational data and domain objects. Your domain never sees database rows or SQL—only rich domain entities. This mapping layer absorbs the differences between how data is stored (normalized tables with foreign keys) and how your domain thinks about it (aggregate roots with embedded collections).

Message Queue Adapter: Decoupled Event Publishing

The event publisher adapter implements your messaging port, encapsulating broker-specific details like connection management, serialization, routing strategies, and delivery guarantees. By isolating these concerns, you can switch from RabbitMQ to Kafka or even to an in-memory implementation for testing without changing how your domain raises events.

import { Channel, Connection } from 'amqplib';import { DomainEvent } from '../../domain/events/domain-event';import { EventPublisher } from '../../domain/ports/event.publisher.port';

export class RabbitMQEventPublisher implements EventPublisher { private channel: Channel;

constructor(private readonly connection: Connection) {}

async initialize(): Promise<void> { this.channel = await this.connection.createChannel(); await this.channel.assertExchange('domain.events', 'topic', { durable: true }); }

async publish(event: DomainEvent): Promise<void> { const routingKey = `orders.${event.type}`; const message = Buffer.from(JSON.stringify({ event_id: event.id, event_type: event.type, occurred_at: event.occurredAt.toISOString(), payload: event.payload }));

this.channel.publish('domain.events', routingKey, message, { persistent: true, contentType: 'application/json' }); }}💡 Pro Tip: Keep event serialization in the adapter. Your domain events use rich types; the adapter transforms them to the wire format your consumers expect. This allows consumers to be written in different languages without imposing constraints on your domain model.

Adapter Composition with Dependency Injection

Wire your adapters together at the application’s composition root. This is the only place that knows about concrete implementations—the single location where you make binding decisions between abstractions and their implementations.

import { Pool } from 'pg';import { connect } from 'amqplib';

export async function bootstrap() { const pgPool = new Pool({ connectionString: process.env.DATABASE_URL }); const rabbitConnection = await connect(process.env.RABBITMQ_URL);

const orderRepository = new PostgresOrderRepository(pgPool); const eventPublisher = new RabbitMQEventPublisher(rabbitConnection); await eventPublisher.initialize();

const orderService = new OrderServiceImpl(orderRepository, eventPublisher); const orderController = new OrderController(orderService);

return { orderController };}Every dependency flows inward. Adapters depend on ports; the domain depends on nothing external. Swapping PostgreSQL for MongoDB means writing a new adapter and changing one line in the composition root. This inversion of control delivers testability as a side effect—inject mock adapters during testing to verify domain behavior without spinning up databases or message brokers.

With your adapters handling infrastructure concerns, the domain core remains pristine. The boundary is clear: adapters translate, ports define contracts, and the domain enforces business rules. Let’s examine how to structure that core to maximize the benefits of this isolation.

The Domain Core: Pure Business Logic

The domain core represents the heart of hexagonal architecture—the place where business rules live in their purest form, untouched by databases, HTTP frameworks, or message queues. This isolation isn’t academic perfectionism; it’s the foundation that makes your microservices genuinely testable, portable, and resilient to infrastructure changes. When infrastructure concerns infiltrate domain logic, you end up with code that’s difficult to reason about, painful to test, and tightly coupled to technology choices that may change.

Entities and Value Objects: Framework-Free by Design

Your domain models should compile and run without importing a single infrastructure dependency. Entities carry identity and mutable state, while value objects represent immutable concepts defined entirely by their attributes. The distinction matters: two entities with identical attributes remain different if their identities differ, while two value objects with identical attributes are interchangeable.

import { OrderId } from '../value-objects/order-id.vo';import { Money } from '../value-objects/money.vo';import { OrderItem } from '../value-objects/order-item.vo';import { OrderPlacedEvent } from '../events/order-placed.event';

export class Order { private readonly items: OrderItem[] = []; private readonly domainEvents: DomainEvent[] = [];

constructor( public readonly id: OrderId, public readonly customerId: string, private status: OrderStatus = OrderStatus.Draft ) {}

addItem(item: OrderItem): void { if (this.status !== OrderStatus.Draft) { throw new OrderModificationError('Cannot modify a placed order'); } this.items.push(item); }

place(): void { if (this.items.length === 0) { throw new EmptyOrderError('Cannot place an order with no items'); } this.status = OrderStatus.Placed; this.domainEvents.push(new OrderPlacedEvent(this.id, this.total())); }

total(): Money { return this.items.reduce( (sum, item) => sum.add(item.subtotal()), Money.zero('USD') ); }

pullDomainEvents(): DomainEvent[] { const events = [...this.domainEvents]; this.domainEvents.length = 0; return events; }}Notice that Order contains business logic—validation rules, state transitions, calculations—without any decorators, ORM annotations, or framework imports. This entity works identically whether persisted to PostgreSQL, MongoDB, or an in-memory store. The absence of @Entity() decorators or Mongoose schemas isn’t a limitation; it’s a deliberate choice that keeps your business rules independent of persistence technology.

Application Services: Orchestrating Through Ports

Application services coordinate use cases by calling ports, never concrete implementations. They translate between the outside world and domain operations, handling the workflow of a business process without containing the business rules themselves. Think of them as conductors: they know when each instrument should play, but they don’t produce the music.

export class PlaceOrderService { constructor( private readonly orderRepository: OrderRepository, private readonly paymentGateway: PaymentGateway, private readonly eventPublisher: DomainEventPublisher ) {}

async execute(command: PlaceOrderCommand): Promise<OrderId> { const order = await this.orderRepository.findById( new OrderId(command.orderId) );

if (!order) { throw new OrderNotFoundError(command.orderId); }

const paymentResult = await this.paymentGateway.authorize( order.total(), command.paymentMethodId );

if (!paymentResult.success) { throw new PaymentFailedError(paymentResult.reason); }

order.place(); await this.orderRepository.save(order); await this.eventPublisher.publishAll(order.pullDomainEvents());

return order.id; }}The service depends on OrderRepository, PaymentGateway, and DomainEventPublisher—all ports defined as interfaces in the domain layer. The actual Stripe integration or PostgreSQL queries live in adapters, completely invisible to this code. This separation means you can test the entire order placement workflow with in-memory fakes, achieving fast and deterministic tests without Docker containers or network calls.

Domain Events as First-Class Citizens

Domain events capture meaningful business occurrences. They enable loose coupling between bounded contexts and provide a natural integration point for event-driven architectures. Unlike technical events (database triggers, message queue acknowledgments), domain events speak the language of the business: “order placed,” “payment authorized,” “shipment dispatched.”

export class OrderPlacedEvent implements DomainEvent { public readonly occurredAt: Date = new Date();

constructor( public readonly orderId: OrderId, public readonly totalAmount: Money ) {}

get eventName(): string { return 'order.placed'; }}By modeling events as immutable objects with typed payloads, you create a clear contract for event consumers. Other services can react to OrderPlacedEvent without knowing anything about the Order entity’s internal structure.

💡 Pro Tip: Collect domain events within entities during state changes, then publish them after persistence succeeds. This pattern prevents publishing events for operations that fail to save, avoiding inconsistencies between your data store and event stream.

Maintaining Domain Ignorance

The domain layer imports nothing from infrastructure. Dependencies flow inward: adapters depend on ports, ports are defined in the domain, and the domain depends only on itself and language primitives. This inversion of dependencies—the “D” in SOLID—is what makes hexagonal architecture work.

Enforce this through your module system—separate packages or strict import rules that fail CI when violated. Tools like ESLint’s no-restricted-imports or dedicated architecture testing libraries can automatically catch violations. When a developer accidentally imports an ORM decorator into a domain entity, the build should break immediately.

With a well-protected domain core in place, hexagonal architecture delivers its most compelling benefit: a testing strategy that makes infrastructure concerns optional. Your domain logic becomes a stable foundation that outlives any framework or database choice.

Testing Strategy: The Hexagonal Advantage

Hexagonal architecture transforms testing from a painful necessity into a genuine competitive advantage. When business logic has no knowledge of databases, HTTP frameworks, or message queues, you can verify it with pure function calls. When adapters are isolated behind ports, you can test them independently against real infrastructure. This separation creates a testing strategy that’s both faster and more reliable than traditional approaches.

Unit Testing Domain Logic

Domain services in hexagonal architecture accept port interfaces through dependency injection. During testing, you provide simple in-memory implementations rather than reaching for complex mocking frameworks:

describe('OrderService', () => { let orderService: OrderService; let orderRepository: InMemoryOrderRepository; let inventoryPort: StubInventoryPort;

beforeEach(() => { orderRepository = new InMemoryOrderRepository(); inventoryPort = new StubInventoryPort(); orderService = new OrderService(orderRepository, inventoryPort); });

it('rejects orders when inventory is insufficient', async () => { inventoryPort.setAvailableQuantity('SKU-7842', 5);

const result = await orderService.placeOrder({ customerId: 'cust-291', items: [{ sku: 'SKU-7842', quantity: 10 }] });

expect(result.isErr()).toBe(true); expect(result.error).toEqual(InsufficientInventoryError); });

it('reserves inventory on successful order', async () => { inventoryPort.setAvailableQuantity('SKU-7842', 20);

await orderService.placeOrder({ customerId: 'cust-291', items: [{ sku: 'SKU-7842', quantity: 10 }] });

expect(inventoryPort.getReservedQuantity('SKU-7842')).toBe(10); });});These tests execute in milliseconds. No database connections, no network calls, no flaky timeouts. The InMemoryOrderRepository and StubInventoryPort are trivial implementations—often under 50 lines each—that store data in plain objects or arrays. Because these test doubles implement the same port interfaces as production adapters, they require no mocking framework overhead and remain stable across refactors.

The key insight here is that your domain tests become documentation. A new team member can read through the test suite and understand every business rule without tracing through database schemas or HTTP handlers. The tests express intent directly because they operate at the same level of abstraction as the business requirements themselves.

Testing Adapters with Real Infrastructure

Adapters deserve their own test suite running against actual infrastructure. Testcontainers spins up real databases and message brokers in Docker, eliminating the gap between test and production environments:

describe('PostgresOrderRepository', () => { let container: StartedPostgreSqlContainer; let repository: PostgresOrderRepository;

beforeAll(async () => { container = await new PostgreSqlContainer('postgres:15') .withDatabase('orders_test') .start();

const pool = new Pool({ connectionString: container.getConnectionUri() }); await runMigrations(pool); repository = new PostgresOrderRepository(pool); });

afterAll(async () => { await container.stop(); });

it('persists and retrieves orders with all fields intact', async () => { const order = Order.create({ customerId: 'cust-291', items: [{ sku: 'SKU-7842', quantity: 10, priceInCents: 2499 }] });

await repository.save(order); const retrieved = await repository.findById(order.id);

expect(retrieved).toEqual(order); });});These tests run slower—seconds rather than milliseconds—but they catch real issues: SQL syntax errors, migration problems, connection pool exhaustion, and serialization bugs. Critically, adapter tests verify the translation layer works correctly without involving business logic. When an adapter test fails, you know exactly where the problem lies.

Integration Testing Through Ports

The middle layer of your testing pyramid uses fake adapters to verify complete use cases flow correctly through the system. These tests exercise the application’s orchestration logic while avoiding external dependencies:

describe('Order Placement Flow', () => { it('publishes OrderPlaced event after successful order', async () => { const eventBus = new InMemoryEventBus(); const app = createApplication({ orderRepository: new InMemoryOrderRepository(), inventoryPort: new StubInventoryPort({ 'SKU-7842': 100 }), eventBus });

await app.executeCommand(new PlaceOrderCommand({ customerId: 'cust-291', items: [{ sku: 'SKU-7842', quantity: 5 }] }));

expect(eventBus.publishedEvents).toContainEqual( expect.objectContaining({ type: 'OrderPlaced', customerId: 'cust-291' }) ); });});💡 Pro Tip: Create a test application factory that accepts port implementations as parameters. This lets you mix real and fake adapters freely—use a real database with a fake payment gateway, or a fake database with a real email service. This flexibility proves invaluable when debugging production issues that only manifest with specific adapter combinations.

The Hexagonal Testing Pyramid

The resulting test distribution looks different from traditional architectures. Domain unit tests form a broad base—hundreds of fast tests covering business rules. Adapter tests form a narrow middle band—dozens of slower tests per adapter. Integration tests sit at the top—a handful of tests verifying critical paths through the system.

This pyramid gives you confidence where it matters most: in the business logic that defines your system’s behavior. When a domain test fails, you know the business rule is broken. When an adapter test fails, you know the infrastructure integration is broken. When an integration test fails, you know the wiring between components is broken. Each failure points directly to its cause.

The practical benefit compounds over time. Teams report running their full domain test suite in under a second, enabling true test-driven development workflows. Meanwhile, adapter tests provide safety nets for infrastructure upgrades—swap Postgres versions or Redis configurations with confidence.

With a solid testing strategy in place, the question becomes practical: how do you get an existing codebase to this architecture without a risky rewrite?

Migration Path: Refactoring to Hexagonal

Adopting hexagonal architecture doesn’t require a ground-up rewrite. The most successful migrations happen incrementally, preserving working functionality while gradually introducing cleaner boundaries.

Identifying Boundaries in Existing Code

Before extracting any code, map your current dependencies. Look for classes or modules that mix business logic with infrastructure concerns—a service that validates orders while also making HTTP calls to payment providers is a prime candidate for separation.

Start by answering three questions:

- Where does business logic live? Trace the code paths that contain your core rules and calculations.

- What external systems does your service communicate with? Databases, message queues, third-party APIs, and file systems are infrastructure concerns.

- Which parts change together? Code that frequently changes for business reasons should be separated from code that changes for technical reasons.

Draw a dependency graph. Arrows pointing from your domain logic outward to infrastructure indicate coupling that hexagonal architecture will invert.

Incremental Extraction: The Strangler Fig Approach

The strangler fig pattern—gradually replacing legacy code by growing new implementations alongside it—works exceptionally well for architectural migrations.

Pick one infrastructure dependency and extract it behind a port. A database repository is often the safest starting point: define an interface representing the operations your domain needs, implement an adapter wrapping your existing data access code, and inject the adapter into your services.

Your first extraction will feel over-engineered. That’s expected. The value compounds as you extract additional concerns and your domain core emerges, free from infrastructure noise.

💡 Pro Tip: Extract ports at the pace of your testing needs. If you’re struggling to test a particular service, that’s where hexagonal boundaries will deliver the most immediate value.

Common Pitfalls

Over-abstraction kills more migrations than technical challenges. Not every database query needs its own repository method. Not every external call needs a dedicated port. Extract boundaries where they reduce cognitive load and improve testability—nowhere else.

Premature optimization manifests as creating ports for systems you don’t yet interact with or designing adapters for flexibility you don’t need. Build for today’s requirements; refactor when tomorrow’s arrive.

When Hexagonal Is Overkill

Simple CRUD services with minimal business logic gain little from hexagonal architecture. If your service primarily shuffles data between an API and a database with straightforward validation, the overhead of ports and adapters adds complexity without proportional benefit.

Similarly, prototypes and short-lived projects rarely justify the investment. Hexagonal architecture pays dividends over time through maintainability and testability—benefits that matter less when the code has a limited lifespan.

With migration strategies established, you now have a complete toolkit for implementing hexagonal architecture in your microservices—from mental models through testing approaches to incremental adoption paths.

Key Takeaways

- Define ports as interfaces in your domain layer before writing any adapter code—this forces you to think about what your domain actually needs

- Start with two adapters per port (real implementation + in-memory fake) to validate your port design is implementation-agnostic

- Use dependency injection at the composition root to wire adapters to ports, keeping your domain free of container dependencies

- Test your domain logic with fake adapters first; add integration tests with real infrastructure only for adapter implementations