Building Fitness Program Adaptation Algorithms

Your users have been following the same workout plan for six weeks. Some are crushing their lifts and ready for more. Others are grinding through plateaus, accumulating fatigue without progress. A static program treats both the same way, and that’s the problem.

Adaptive fitness programming solves this by treating each user’s training as a feedback loop. Track performance, analyze trends, and adjust variables automatically. The goal isn’t to replace human coaching judgment entirely, but to handle the repetitive pattern recognition that computers do well: detecting when someone is ready for more weight, needs a deload week, or should shift their rep ranges.

In this article, we’ll build an adaptive fitness system from the ground up. We’ll start with a data model that captures the right information, implement rule-based adaptation logic, then extend to machine learning for smarter progression predictions. By the end, you’ll have working code that automatically adjusts training variables based on real performance data.

The data model for tracking progress

Before algorithms can adapt anything, they need data. A fitness tracking schema must capture workout structure, planned versus actual performance, and user feedback signals that indicate fatigue or readiness.

CREATE TABLE users ( id UUID PRIMARY KEY DEFAULT gen_random_uuid(), created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW(), training_age_months INTEGER DEFAULT 0, bodyweight_kg DECIMAL(5, 2));

CREATE TABLE exercises ( id UUID PRIMARY KEY DEFAULT gen_random_uuid(), name VARCHAR(100) NOT NULL UNIQUE, movement_pattern VARCHAR(50), -- 'push', 'pull', 'squat', 'hinge', 'carry' primary_muscle_group VARCHAR(50), is_compound BOOLEAN DEFAULT true);

CREATE TABLE workout_sessions ( id UUID PRIMARY KEY DEFAULT gen_random_uuid(), user_id UUID REFERENCES users(id), scheduled_date DATE NOT NULL, completed_at TIMESTAMP WITH TIME ZONE, session_rpe DECIMAL(3, 1), -- Rate of perceived exertion (1-10) notes TEXT, fatigue_rating INTEGER CHECK (fatigue_rating BETWEEN 1 AND 5), sleep_quality INTEGER CHECK (sleep_quality BETWEEN 1 AND 5));

CREATE TABLE workout_sets ( id UUID PRIMARY KEY DEFAULT gen_random_uuid(), session_id UUID REFERENCES workout_sessions(id), exercise_id UUID REFERENCES exercises(id), set_number INTEGER NOT NULL, -- Planned values (from program) planned_reps INTEGER, planned_weight_kg DECIMAL(6, 2), planned_rpe DECIMAL(3, 1), -- Actual performance actual_reps INTEGER, actual_weight_kg DECIMAL(6, 2), actual_rpe DECIMAL(3, 1), -- Derived metrics completed BOOLEAN DEFAULT false, created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW());

-- Index for performance queriesCREATE INDEX idx_workout_sets_exercise_user ON workout_sets(exercise_id, session_id);CREATE INDEX idx_sessions_user_date ON workout_sessions(user_id, scheduled_date DESC);The key insight here is tracking both planned and actual values. The delta between what was prescribed and what was achieved tells us whether the program is too easy, too hard, or just right.

from dataclasses import dataclassfrom decimal import Decimalfrom typing import Optional

@dataclassclass WorkoutSet: planned_reps: int planned_weight_kg: Decimal planned_rpe: Decimal actual_reps: Optional[int] = None actual_weight_kg: Optional[Decimal] = None actual_rpe: Optional[Decimal] = None

@property def volume(self) -> Decimal: if self.actual_reps and self.actual_weight_kg: return self.actual_weight_kg * self.actual_reps return Decimal(0)

@property def performance_ratio(self) -> Optional[float]: """Ratio of actual to planned volume. >1 means exceeded plan.""" if not self.actual_reps or not self.planned_reps: return None actual = float(self.actual_weight_kg or 0) * self.actual_reps planned = float(self.planned_weight_kg) * self.planned_reps return actual / planned if planned > 0 else None📝 Note: RPE (Rate of Perceived Exertion) uses a 1-10 scale where 10 is maximum effort. RPE 8 means “could have done 2 more reps.” This subjective measure helps detect fatigue before performance drops.

Rule-based adaptation logic

Before reaching for machine learning, rule-based systems handle most adaptation scenarios well. They’re interpretable, debuggable, and don’t require training data. The core principle is progressive overload: gradually increase training stress to drive adaptation.

from dataclasses import dataclassfrom decimal import Decimalfrom enum import Enumfrom typing import Optional

class AdaptationAction(Enum): INCREASE_WEIGHT = "increase_weight" INCREASE_REPS = "increase_reps" DECREASE_WEIGHT = "decrease_weight" MAINTAIN = "maintain" DELOAD = "deload"

@dataclassclass ProgressionRule: """Defines conditions for training variable adjustments.""" min_completion_rate: float min_sessions_at_weight: int rpe_threshold_low: float # Below this = too easy rpe_threshold_high: float # Above this = too hard weight_increment_kg: Decimal rep_increment: int

class RuleBasedAdapter: """Adapts training variables based on performance rules."""

def __init__(self, rule: ProgressionRule): self.rule = rule

def evaluate_exercise_progress( self, recent_sessions: list[dict], current_weight: Decimal, target_reps: int ) -> tuple[AdaptationAction, dict]: """ Analyze recent performance and recommend adaptation.

Args: recent_sessions: Last N sessions with this exercise current_weight: Current working weight target_reps: Target reps per set

Returns: Tuple of (action, parameters for the action) """ if len(recent_sessions) < self.rule.min_sessions_at_weight: return AdaptationAction.MAINTAIN, {"reason": "insufficient_data"}

# Calculate aggregate metrics avg_completion = sum(s["completion_rate"] for s in recent_sessions) / len(recent_sessions) avg_rpe = sum(s["avg_rpe"] for s in recent_sessions) / len(recent_sessions) avg_reps_achieved = sum(s["avg_reps"] for s in recent_sessions) / len(recent_sessions)

# Check for overreaching (needs deload) consecutive_failures = self._count_consecutive_failures(recent_sessions) if consecutive_failures >= 2 or avg_rpe > 9.5: return AdaptationAction.DELOAD, { "reason": "overreaching_detected", "new_weight": current_weight * Decimal("0.9"), "deload_duration_weeks": 1 }

# Check if too easy if avg_rpe < self.rule.rpe_threshold_low and avg_completion >= 1.0: if avg_reps_achieved >= target_reps + 2: # Consistently exceeding reps = increase weight return AdaptationAction.INCREASE_WEIGHT, { "reason": "exceeded_target_reps", "new_weight": current_weight + self.rule.weight_increment_kg } else: # Meeting targets easily = increase reps first return AdaptationAction.INCREASE_REPS, { "reason": "low_rpe_at_target", "new_reps": target_reps + self.rule.rep_increment }

# Check if too hard if avg_rpe > self.rule.rpe_threshold_high or avg_completion < self.rule.min_completion_rate: return AdaptationAction.DECREASE_WEIGHT, { "reason": "excessive_difficulty", "new_weight": current_weight - self.rule.weight_increment_kg }

# Goldilocks zone: ready for progression if avg_completion >= self.rule.min_completion_rate and avg_rpe <= self.rule.rpe_threshold_high: return AdaptationAction.INCREASE_WEIGHT, { "reason": "progression_criteria_met", "new_weight": current_weight + self.rule.weight_increment_kg }

return AdaptationAction.MAINTAIN, {"reason": "within_adaptation_window"}

def _count_consecutive_failures(self, sessions: list[dict]) -> int: """Count sessions where completion rate was below threshold.""" count = 0 for session in reversed(sessions): if session["completion_rate"] < self.rule.min_completion_rate: count += 1 else: break return count

# Standard progression rules for different training goalsSTRENGTH_RULES = ProgressionRule( min_completion_rate=0.9, min_sessions_at_weight=2, rpe_threshold_low=6.0, rpe_threshold_high=9.0, weight_increment_kg=Decimal("2.5"), rep_increment=1)

HYPERTROPHY_RULES = ProgressionRule( min_completion_rate=0.85, min_sessions_at_weight=3, rpe_threshold_low=6.5, rpe_threshold_high=8.5, weight_increment_kg=Decimal("2.0"), rep_increment=2)The rule system follows a simple decision tree: check for overreaching first (safety), then check if training is too easy or too hard, and finally determine if the user is ready to progress. Each decision returns both an action and the reasoning, making the system auditable.

💡 Pro Tip: Log every adaptation decision with its reasoning. When users ask “why did my weight go down?”, you can show them the data that triggered the deload.

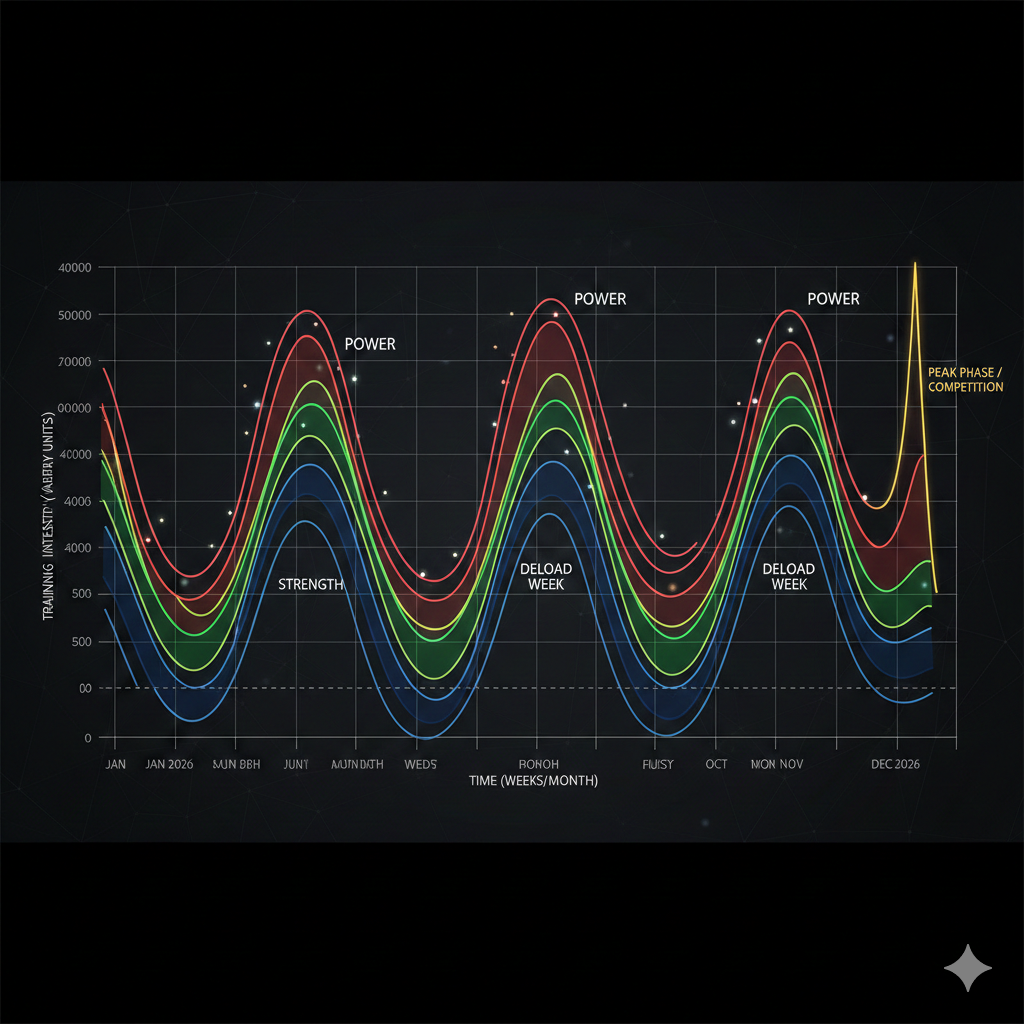

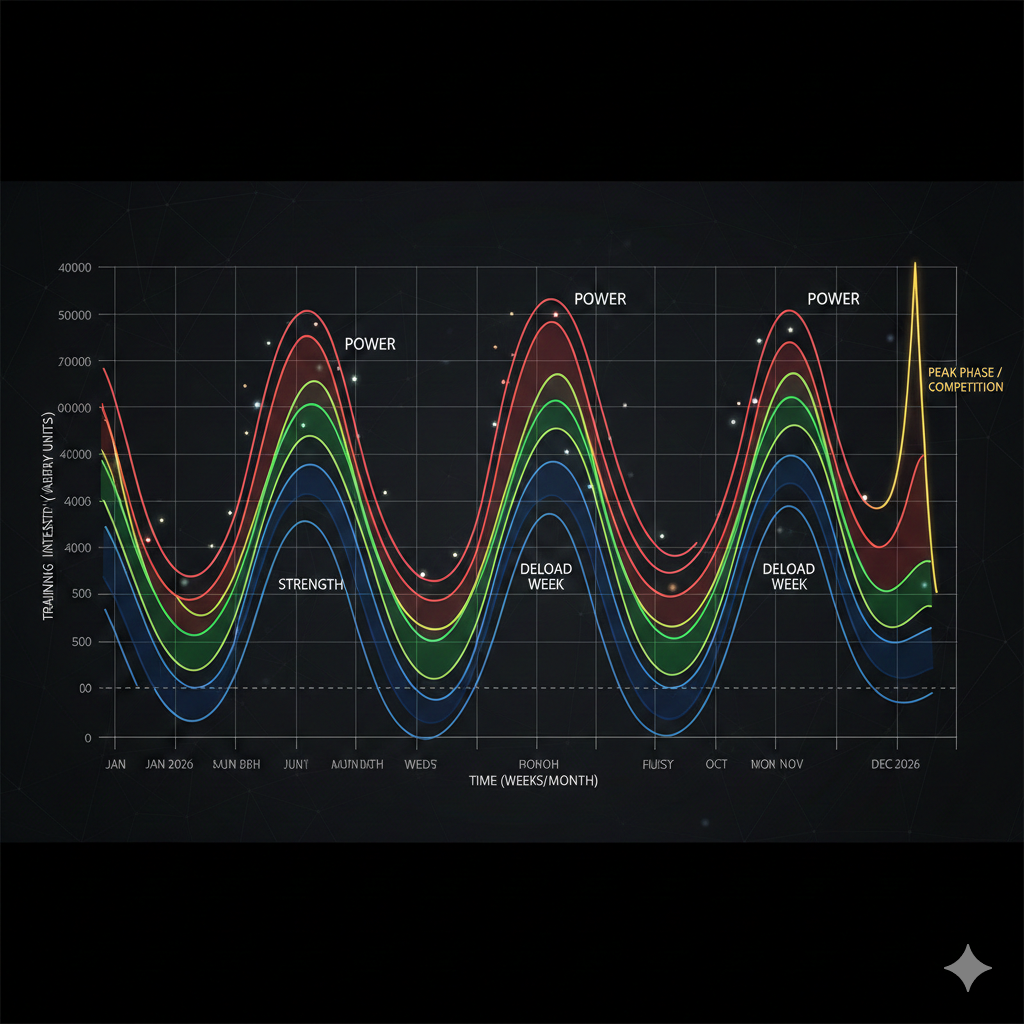

Periodization models

Real training programs don’t just progress linearly. Periodization structures training into phases with different goals, allowing for systematic variation that prevents plateaus and manages fatigue.

from dataclasses import dataclassfrom enum import Enumfrom typing import Iteratorfrom decimal import Decimal

class PeriodizationType(Enum): LINEAR = "linear" UNDULATING_DAILY = "daily_undulating" UNDULATING_WEEKLY = "weekly_undulating" BLOCK = "block"

@dataclassclass TrainingPhase: name: str duration_weeks: int rep_range: tuple[int, int] intensity_percent: tuple[float, float] # % of 1RM volume_modifier: float # 1.0 = baseline

@dataclassclass PeriodizationPlan: type: PeriodizationType phases: list[TrainingPhase] deload_frequency_weeks: int deload_volume_reduction: float

class LinearPeriodization: """Classic linear periodization: high volume -> high intensity."""

PHASES = [ TrainingPhase("hypertrophy", 4, (8, 12), (0.65, 0.75), 1.2), TrainingPhase("strength", 4, (4, 6), (0.80, 0.88), 1.0), TrainingPhase("peaking", 2, (1, 3), (0.90, 0.97), 0.7), TrainingPhase("deload", 1, (8, 10), (0.50, 0.60), 0.5), ]

def get_phase_for_week(self, week_number: int) -> TrainingPhase: total_weeks = sum(p.duration_weeks for p in self.PHASES) cycle_week = week_number % total_weeks accumulated = 0 for phase in self.PHASES: accumulated += phase.duration_weeks if cycle_week < accumulated: return phase return self.PHASES[-1]

class DailyUndulatingPeriodization: """DUP: Vary rep ranges within each week."""

DAILY_TEMPLATES = { "heavy": TrainingPhase("heavy", 1, (3, 5), (0.85, 0.90), 0.8), "moderate": TrainingPhase("moderate", 1, (6, 8), (0.75, 0.82), 1.0), "light": TrainingPhase("light", 1, (10, 12), (0.65, 0.72), 1.2), }

WEEKLY_ROTATION = ["heavy", "light", "moderate"]

def get_phase_for_session(self, session_number: int) -> TrainingPhase: day_index = session_number % len(self.WEEKLY_ROTATION) template_name = self.WEEKLY_ROTATION[day_index] return self.DAILY_TEMPLATES[template_name]

class PeriodizationEngine: """Generate workout parameters based on periodization model."""

def __init__(self, model: PeriodizationType): self.model = model self._linear = LinearPeriodization() self._dup = DailyUndulatingPeriodization()

def calculate_workout_parameters( self, user_1rm: Decimal, week_number: int, session_in_week: int ) -> dict: """ Calculate target weight, reps, and sets for a workout.

Args: user_1rm: User's estimated one-rep max for the exercise week_number: Current week in the program session_in_week: Which session this week (0-indexed)

Returns: Dict with target_weight, target_reps, target_sets, phase_name """ if self.model == PeriodizationType.LINEAR: phase = self._linear.get_phase_for_week(week_number) elif self.model == PeriodizationType.UNDULATING_DAILY: session_number = week_number * 3 + session_in_week # Assuming 3x/week phase = self._dup.get_phase_for_session(session_number) else: raise ValueError(f"Unsupported periodization type: {self.model}")

# Calculate target intensity (middle of range) intensity = (phase.intensity_percent[0] + phase.intensity_percent[1]) / 2 target_weight = user_1rm * Decimal(str(intensity))

# Calculate reps (middle of range) target_reps = (phase.rep_range[0] + phase.rep_range[1]) // 2

# Calculate sets based on volume modifier base_sets = 4 if phase.rep_range[1] <= 6 else 3 target_sets = max(2, int(base_sets * phase.volume_modifier))

return { "target_weight": round(target_weight / Decimal("2.5")) * Decimal("2.5"), # Round to nearest 2.5kg "target_reps": target_reps, "target_sets": target_sets, "phase_name": phase.name, "intensity_percent": intensity }Daily undulating periodization (DUP) has gained popularity because it provides variety within each week while still allowing progressive overload. Research suggests it may produce similar or better results than linear periodization for intermediate lifters.

Machine learning for progression prediction

Rule-based systems work well for textbook scenarios, but real users have irregular schedules, varying recovery capacity, and individual response rates. Machine learning can learn these patterns from historical data.

import numpy as npimport tensorflow as tffrom tensorflow import kerasfrom typing import Optionalfrom dataclasses import dataclass

@dataclassclass ProgressionFeatures: """Features for progression prediction model.""" current_weight: float current_reps: float avg_rpe_last_3: float completion_rate_last_3: float weeks_at_current_weight: int training_age_months: int sleep_quality_avg: float days_since_last_session: int volume_trend: float # Positive = increasing, negative = decreasing

class ProgressionPredictor: """Neural network for predicting optimal weight progression."""

def __init__(self, model_path: Optional[str] = None): if model_path: self.model = keras.models.load_model(model_path) else: self.model = self._build_model()

def _build_model(self) -> keras.Model: """Build the progression prediction network.""" model = keras.Sequential([ keras.layers.Input(shape=(9,)), # 9 input features keras.layers.Dense(64, activation='relu'), keras.layers.BatchNormalization(), keras.layers.Dropout(0.2), keras.layers.Dense(32, activation='relu'), keras.layers.BatchNormalization(), keras.layers.Dropout(0.2), keras.layers.Dense(16, activation='relu'), # Output: [weight_change, confidence] keras.layers.Dense(2, activation='linear') ])

model.compile( optimizer=keras.optimizers.Adam(learning_rate=0.001), loss='mse', metrics=['mae'] ) return model

def predict_progression(self, features: ProgressionFeatures) -> tuple[float, float]: """ Predict optimal weight change and confidence.

Returns: Tuple of (recommended_weight_change_kg, confidence_0_to_1) """ feature_array = np.array([[ features.current_weight, features.current_reps, features.avg_rpe_last_3, features.completion_rate_last_3, features.weeks_at_current_weight, features.training_age_months, features.sleep_quality_avg, features.days_since_last_session, features.volume_trend ]])

# Normalize features (in production, use fitted scaler) feature_array = self._normalize(feature_array)

prediction = self.model.predict(feature_array, verbose=0)[0] weight_change = float(prediction[0]) confidence = float(np.clip(tf.nn.sigmoid(prediction[1]), 0, 1))

return weight_change, confidence

def _normalize(self, features: np.ndarray) -> np.ndarray: """Normalize features to [0, 1] range.""" # In production, these would come from training data statistics mins = np.array([20, 1, 1, 0, 0, 0, 1, 0, -1]) maxs = np.array([300, 20, 10, 1, 12, 120, 5, 14, 1]) return (features - mins) / (maxs - mins + 1e-8)

def train(self, X: np.ndarray, y: np.ndarray, epochs: int = 100): """Train the model on historical progression data.""" self.model.fit( X, y, epochs=epochs, batch_size=32, validation_split=0.2, callbacks=[ keras.callbacks.EarlyStopping(patience=10, restore_best_weights=True) ] )

def save(self, path: str): self.model.save(path)The model outputs both a weight change recommendation and a confidence score. Low confidence predictions should fall back to rule-based logic.

⚠️ Warning: ML models for fitness adaptation require substantial training data (thousands of user-weeks) to generalize well. Start with rule-based systems and collect data before deploying ML in production.

Integrating with workout tracking

The service layer ties everything together, coordinating periodization, rule-based adaptation, and ML predictions.

from decimal import Decimalfrom adaptation.rules import RuleBasedAdapter, STRENGTH_RULES, AdaptationActionfrom adaptation.periodization import PeriodizationEngine, PeriodizationTypefrom adaptation.ml_model import ProgressionPredictor, ProgressionFeatures

class AdaptiveWorkoutService: """Generates and adapts workouts based on user performance."""

def __init__(self, db_pool, use_ml: bool = False, ml_model_path: str = None): self.db = db_pool self.rule_adapter = RuleBasedAdapter(STRENGTH_RULES) self.periodization = PeriodizationEngine(PeriodizationType.UNDULATING_DAILY) self.ml_predictor = ProgressionPredictor(ml_model_path) if use_ml else None self.ml_confidence_threshold = 0.7

async def get_adapted_weight( self, current_stats: dict, recent_sessions: list[dict], period_params: dict ) -> Decimal: """Determine adapted weight using ML or rules.""" current_weight = current_stats["last_weight"]

# Try ML prediction first if enabled and confident if self.ml_predictor: features = ProgressionFeatures( current_weight=float(current_weight), current_reps=float(current_stats["last_reps"]), avg_rpe_last_3=sum(s["avg_rpe"] for s in recent_sessions) / len(recent_sessions), completion_rate_last_3=sum(s["completion_rate"] for s in recent_sessions) / len(recent_sessions), weeks_at_current_weight=current_stats["weeks_at_weight"], training_age_months=current_stats["training_age_months"], sleep_quality_avg=sum(s.get("sleep_quality", 3) for s in recent_sessions) / len(recent_sessions), days_since_last_session=current_stats["days_since_last"], volume_trend=self._calculate_volume_trend(recent_sessions) ) weight_change, confidence = self.ml_predictor.predict_progression(features) if confidence >= self.ml_confidence_threshold: return current_weight + Decimal(str(round(weight_change * 2) / 2))

# Fall back to rule-based adaptation action, params = self.rule_adapter.evaluate_exercise_progress( recent_sessions, current_weight, period_params["target_reps"] ) if action in [AdaptationAction.INCREASE_WEIGHT, AdaptationAction.DECREASE_WEIGHT, AdaptationAction.DELOAD]: return params["new_weight"] return current_weight

def _calculate_volume_trend(self, sessions: list[dict]) -> float: if len(sessions) < 2: return 0.0 volumes = [s["total_volume"] for s in sessions] return (volumes[-1] - volumes[0]) / (volumes[0] + 1e-8)The pattern is clear: periodization provides the training framework, rules handle standard progressions, and ML adds personalization when it has high confidence. Low confidence predictions defer to the battle-tested rule logic.

Handling deload weeks

Accumulated fatigue requires periodic reduction in training stress. Rather than relying on a single threshold, deload detection combines multiple fatigue signals.

from dataclasses import dataclass

@dataclassclass DeloadParameters: volume_reduction: float intensity_reduction: float duration_days: int

class DeloadManager: FATIGUE_INDICATORS = { "consecutive_missed_reps": 3, "rpe_increase_threshold": 1.5, "performance_decline_percent": 0.1, "reported_fatigue_threshold": 4 }

def needs_deload(self, user_metrics: dict) -> tuple[bool, str]: reasons = [] if user_metrics.get("consecutive_missed_sessions", 0) >= 3: reasons.append("consecutive_underperformance") if user_metrics.get("rpe_trend", 0) >= 1.5: reasons.append("elevated_rpe") if user_metrics.get("performance_change", 0) <= -0.1: reasons.append("performance_decline") if user_metrics.get("weeks_since_deload", 0) >= 6: reasons.append("scheduled_deload_due")

needs_it = len(reasons) >= 2 or "scheduled_deload_due" in reasons return needs_it, ", ".join(reasons) if reasons else "none"The logic requires either two concurrent fatigue signals or a scheduled deload (every 4-6 weeks). This prevents false positives from one bad workout while catching genuine overreaching.

Key takeaways

Building adaptive fitness programs requires combining domain knowledge with software engineering patterns. Here’s what makes the system work:

- Track planned vs. actual: The delta between prescription and performance drives all adaptation decisions

- Start with rules: Rule-based systems are interpretable and work well for 80% of cases

- Use periodization: Structured variation prevents plateaus and manages fatigue systematically

- Add ML carefully: Machine learning adds value only with sufficient data and should fall back to rules when uncertain

- Respect recovery: Deload detection prevents overtraining, which is the silent killer of progress

Start by implementing the rule-based adapter with your existing workout tracking. Collect data on adaptation decisions and outcomes for several months before training ML models. The best adaptive programs feel invisible to users: weights go up when they’re ready, drop when they need recovery, and the progression just works.