Building Production-Ready GPT Integrations: Error Handling, Rate Limits, and Cost Control

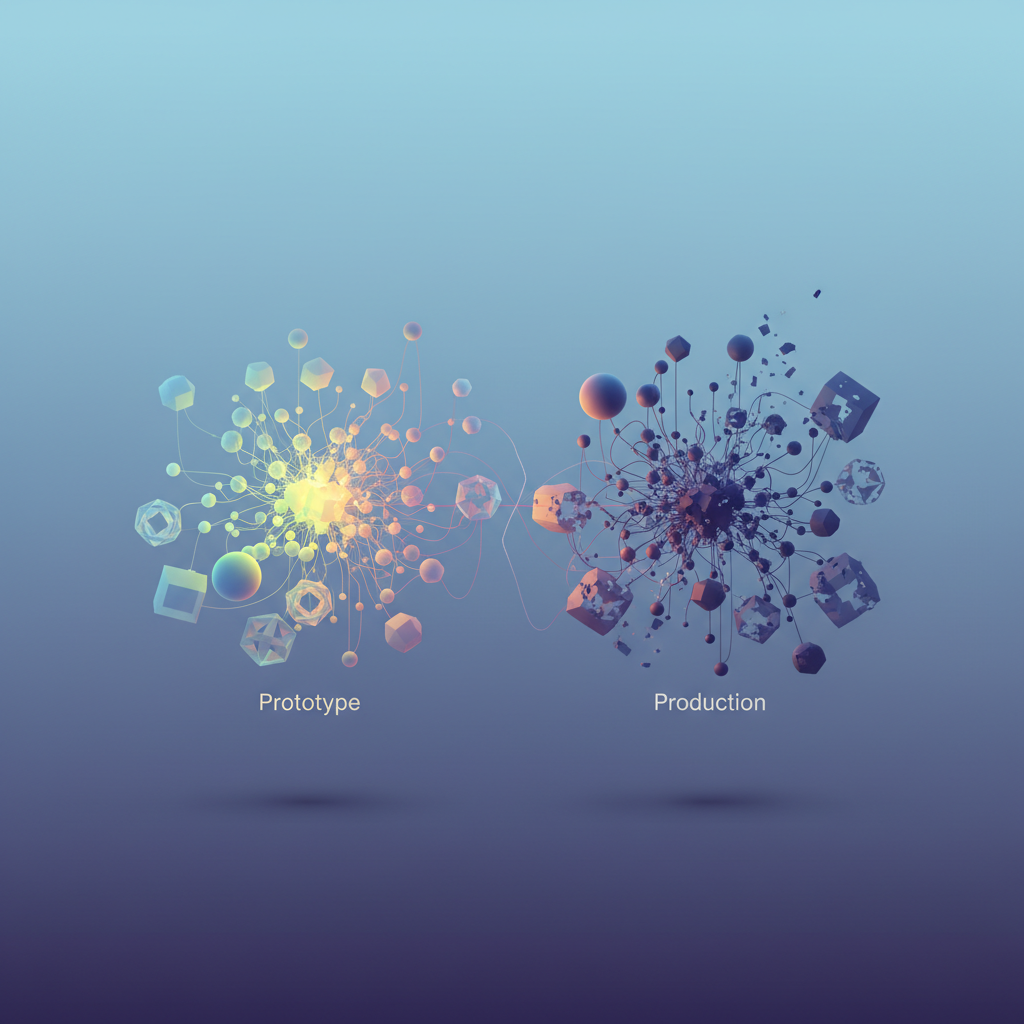

Your GPT-powered feature works perfectly in development. Then it hits production: rate limits crush your throughput, a single malformed response crashes your service, and your monthly API bill looks like a phone number. The gap between a working prototype and a production-ready GPT integration is wider than most engineers expect.

I’ve watched teams ship GPT features that sailed through staging, only to implode within hours of launch. The failure modes are predictable but rarely anticipated. A burst of traffic triggers rate limiting, which triggers retries, which amplifies the rate limiting into a cascade that takes down your entire service. A response comes back with an unexpected JSON structure—maybe the model decided to add helpful commentary outside the expected format—and your parser throws an unhandled exception. Your carefully tuned prompts that cost $0.02 per request in testing suddenly cost $0.15 when real users ask questions three times longer than your test fixtures.

Traditional backend patterns don’t map cleanly to LLM APIs. Your battle-tested exponential backoff strategy? It assumes the service will recover. OpenAI’s rate limits are per-minute quotas that reset on a fixed schedule—backing off exponentially just wastes time you could spend waiting for the next window. Your circuit breaker? It trips on latency spikes that are actually normal for a 4,000-token response. Your retry logic? It’s burning money re-sending prompts that already succeeded but timed out before the response arrived.

The engineers who build reliable GPT integrations treat these APIs as fundamentally different infrastructure—expensive, unpredictable, and rate-constrained by design. They architect for graceful degradation from day one.

Let’s examine why your prototype will fail, starting with the failure modes that catch even experienced teams off guard.

The Production Gap: Why Your GPT Prototype Will Fail in Production

Your GPT integration works beautifully in development. You’ve crafted clever prompts, built a clean API wrapper, and demonstrated impressive results to stakeholders. Then you deploy to production, and everything falls apart within the first week.

This isn’t a reflection of your engineering skills—it’s the nature of LLM APIs. They behave fundamentally differently from the REST endpoints you’ve spent years mastering, and the patterns that make traditional integrations reliable actively work against you here.

Failure Modes You Haven’t Anticipated

Rate limits on GPT APIs aren’t the predictable “429 responses per minute” you’re used to. OpenAI implements tiered rate limiting across multiple dimensions simultaneously: requests per minute, tokens per minute, and tokens per day. A single complex prompt can consume your entire token budget while barely registering against your request quota. Your monitoring shows green across the board, but users are getting failures.

Timeouts cascade in unexpected ways. A GPT-4 request that averages 3 seconds can spike to 45 seconds during peak load or when processing longer contexts. Your 10-second timeout seemed generous until it started killing 15% of legitimate requests. But raising it to 60 seconds creates connection pool exhaustion when the API experiences degradation.

Response format unpredictability is the silent killer. You asked for JSON, but GPT decided to wrap it in markdown code blocks. Or it returned valid JSON with a slightly different schema than your last 10,000 requests. Or it truncated mid-response because it hit the token limit. Your parsing code handles none of these cases.

The Cost Multiplication Problem

Traditional API costs scale linearly with traffic. GPT costs scale exponentially with carelessness.

A 10x increase in traffic doesn’t mean 10x costs—it means users are making repeat requests when the first one times out, your retry logic is amplifying failed requests, cache misses are triggering expensive recomputation, and longer conversations are accumulating context tokens. Without explicit controls, that 10x traffic spike becomes a 100x billing surprise.

Why Your Retry Strategy Will Backfire

Exponential backoff with jitter—the gold standard for API resilience—becomes a liability with LLM APIs. Each retry consumes tokens and budget. Retrying a failed 4,000-token request five times costs you 20,000 tokens even if none succeed. Rate limit errors compound: your retries consume quota that could serve new requests, creating a cascading failure across your entire user base.

The patterns that saved your microservices architecture will bankrupt your AI budget.

Understanding these failure modes is the first step. The next is architecting a client that anticipates them from the start.

Designing a Resilient GPT Client Architecture

A well-designed GPT client acts as a protective layer between your application and the unpredictable nature of external API calls. The architecture must handle variable latency (responses can take anywhere from 500ms to 60+ seconds for complex prompts), graceful degradation when the API is unavailable, and workload prioritization when requests pile up. Without these safeguards, a single slow API response can cascade into thread pool exhaustion, user-facing timeouts, and ultimately a degraded experience across your entire application.

Circuit Breaker Pattern for LLM Latency

Traditional circuit breakers trip after a fixed number of failures. LLM APIs require a more nuanced approach—you need to account for both errors and latency degradation that precedes outages. Unlike conventional REST endpoints that fail fast, GPT APIs often exhibit progressive slowdown before complete failure, making latency monitoring essential for early detection.

import timefrom dataclasses import dataclass, fieldfrom collections import dequefrom enum import Enum

class CircuitState(Enum): CLOSED = "closed" OPEN = "open" HALF_OPEN = "half_open"

@dataclassclass GPTCircuitBreaker: failure_threshold: int = 5 latency_threshold_ms: float = 10000 latency_percentile_threshold: float = 0.7 recovery_timeout: float = 30.0 window_size: int = 20

state: CircuitState = field(default=CircuitState.CLOSED) failures: int = field(default=0) last_failure_time: float = field(default=0) latencies: deque = field(default_factory=lambda: deque(maxlen=20))

def record_success(self, latency_ms: float): self.latencies.append(latency_ms) if self.state == CircuitState.HALF_OPEN: self.state = CircuitState.CLOSED self.failures = 0

def record_failure(self): self.failures += 1 self.last_failure_time = time.time() if self.failures >= self.failure_threshold: self.state = CircuitState.OPEN

def should_allow_request(self) -> bool: if self.state == CircuitState.CLOSED: return not self._is_latency_degraded()

if self.state == CircuitState.OPEN: if time.time() - self.last_failure_time > self.recovery_timeout: self.state = CircuitState.HALF_OPEN return True return False

return True # HALF_OPEN: allow probe request

def _is_latency_degraded(self) -> bool: if len(self.latencies) < self.window_size // 2: return False slow_requests = sum(1 for l in self.latencies if l > self.latency_threshold_ms) return slow_requests / len(self.latencies) > self.latency_percentile_thresholdThe latency-aware circuit breaker trips when 70% of recent requests exceed your latency threshold—a leading indicator that the API is struggling before outright failures occur. This proactive approach prevents your application from queuing requests behind an already-overwhelmed API, reducing both resource consumption and user wait times during degraded conditions.

Request Queuing with Priority Lanes

Not all GPT requests carry equal business value. User-facing chat completions need immediate processing, while background summarization tasks can wait. Implementing priority lanes ensures that critical operations receive preferential treatment when system resources become constrained, rather than processing requests on a first-come, first-served basis that ignores business context.

import asynciofrom enum import IntEnumfrom dataclasses import dataclassfrom typing import Any, Callable

class Priority(IntEnum): CRITICAL = 0 # User-blocking operations HIGH = 1 # Interactive features NORMAL = 2 # Background processing LOW = 3 # Batch jobs, analytics

@dataclassclass GPTRequest: priority: Priority payload: dict callback: Callable

class PriorityGPTQueue: def __init__(self, max_concurrent: int = 5): self.queues = {p: asyncio.Queue() for p in Priority} self.semaphore = asyncio.Semaphore(max_concurrent)

async def enqueue(self, request: GPTRequest): await self.queues[request.priority].put(request)

async def process_next(self) -> Any: for priority in Priority: if not self.queues[priority].empty(): async with self.semaphore: request = await self.queues[priority].get() return await request.callback(request.payload) return None💡 Pro Tip: Set

max_concurrentbased on your rate limit tier. For the standard 3,500 RPM limit, 10-15 concurrent requests provides headroom without risking throttling.

Consider adding request expiration to prevent stale low-priority requests from consuming resources after their results are no longer relevant. A background summarization job queued two hours ago may no longer be needed if the user has moved on.

Separating Sync and Async Workloads

The architectural split between synchronous and asynchronous GPT workloads prevents slow completions from blocking your application’s critical path. This separation is fundamental to maintaining responsiveness—mixing both workload types in a single processing pipeline creates unpredictable latency characteristics and makes capacity planning nearly impossible.

For synchronous workloads (user-facing), enforce strict timeouts and have fallback responses ready. For asynchronous workloads, push requests to a job queue like Celery or AWS SQS and process them with dedicated workers that can retry indefinitely. This isolation means a surge in batch processing jobs won’t starve interactive requests of resources.

async def route_gpt_request(request: dict, requires_immediate: bool = False): if requires_immediate: # Sync path: strict timeout, circuit breaker protection if not circuit_breaker.should_allow_request(): return {"response": "Service temporarily unavailable", "fallback": True} return await gpt_client.complete(request, timeout=8.0) else: # Async path: queue for background processing await job_queue.enqueue("gpt_completion", request) return {"status": "queued", "job_id": request["job_id"]}This separation ensures your checkout flow stays responsive even when your content generation pipeline is processing a backlog. The synchronous path benefits from aggressive timeouts and immediate fallbacks, while the asynchronous path can implement exponential backoff, retry logic, and dead-letter queues for failed requests without impacting user experience.

With the client architecture in place, the next challenge is managing the rate limits that GPT APIs enforce—and doing so without dropping legitimate requests.

Implementing Intelligent Rate Limit Management

Rate limit errors in production don’t announce themselves politely. They arrive at 2 AM during your highest-traffic period, cascading through your system and degrading user experience. The solution isn’t to react to 429 errors—it’s to anticipate them before they happen.

OpenAI’s rate limits operate on two axes: requests per minute (RPM) and tokens per minute (TPM). Your integration needs to track both proactively, not reactively. Understanding this dual-constraint system is essential: you might have plenty of request headroom but be burning through tokens on long completions, or vice versa. Either limit, when breached, results in the same 429 response that disrupts your users.

Reading the Signs: Response Header Tracking

Every OpenAI API response includes headers that reveal your current rate limit status. Capture these religiously:

from dataclasses import dataclassfrom datetime import datetimeimport threading

@dataclassclass RateLimitState: requests_remaining: int tokens_remaining: int reset_time: datetime last_updated: datetime

class RateLimitTracker: def __init__(self): self._state = None self._lock = threading.Lock()

def update_from_response(self, headers: dict) -> None: with self._lock: self._state = RateLimitState( requests_remaining=int(headers.get("x-ratelimit-remaining-requests", 0)), tokens_remaining=int(headers.get("x-ratelimit-remaining-tokens", 0)), reset_time=datetime.fromisoformat( headers.get("x-ratelimit-reset-requests", datetime.now().isoformat()) ), last_updated=datetime.now() )

def should_throttle(self, estimated_tokens: int) -> bool: with self._lock: if self._state is None: return False return ( self._state.requests_remaining < 5 or self._state.tokens_remaining < estimated_tokens * 2 )The should_throttle method gives you a predictive signal. When remaining capacity drops below your safety threshold, you can proactively slow down before hitting the wall. The threshold values here—5 requests and 2x estimated tokens—provide reasonable safety margins, but you should tune these based on your traffic patterns. High-variance workloads benefit from larger buffers, while steady-state applications can operate closer to the edge.

Note that the tracker uses thread-safe locking to handle concurrent updates. In a high-throughput system, multiple responses arrive simultaneously, and without proper synchronization, you risk reading stale or corrupted state exactly when accurate information matters most.

Smoothing Traffic with Token Buckets

Bursty traffic patterns trigger rate limits even when your average usage is well within bounds. A token bucket algorithm smooths your request flow:

import timeimport threading

class TokenBucket: def __init__(self, tokens_per_second: float, max_tokens: int): self.tokens_per_second = tokens_per_second self.max_tokens = max_tokens self.tokens = max_tokens self.last_refill = time.monotonic() self._lock = threading.Lock()

def acquire(self, tokens: int = 1, timeout: float = 30.0) -> bool: deadline = time.monotonic() + timeout

while time.monotonic() < deadline: with self._lock: self._refill() if self.tokens >= tokens: self.tokens -= tokens return True

wait_time = min(tokens / self.tokens_per_second, deadline - time.monotonic()) time.sleep(max(0.01, wait_time))

return False

def _refill(self) -> None: now = time.monotonic() elapsed = now - self.last_refill self.tokens = min(self.max_tokens, self.tokens + elapsed * self.tokens_per_second) self.last_refill = nowConfigure your bucket based on your OpenAI tier limits. For a Tier 1 account with 500 RPM, set tokens_per_second=8.3 (500/60) with a small burst buffer. The max_tokens parameter controls how much burst capacity you allow—set it too high and you defeat the smoothing purpose; set it too low and legitimate traffic spikes get unnecessarily delayed.

The implementation uses time.monotonic() rather than wall-clock time deliberately. System clock adjustments (NTP synchronization, daylight saving changes) can cause time.time() to jump forward or backward, which would corrupt your token accounting. Monotonic time guarantees forward progression, making your rate limiting predictable regardless of system clock behavior.

Multi-Tier Fallback Strategy

When rate limits loom despite your best efforts, graceful degradation beats failure. Implement a tiered fallback chain that preserves user experience even under constrained conditions:

from enum import Enumfrom typing import Optionalimport hashlib

class ModelTier(Enum): GPT4 = "gpt-4" GPT35 = "gpt-3.5-turbo" CACHED = "cached"

class FallbackGPTClient: def __init__(self, openai_client, cache, rate_tracker: RateLimitTracker): self.client = openai_client self.cache = cache self.rate_tracker = rate_tracker self.bucket = TokenBucket(tokens_per_second=8.0, max_tokens=20)

async def complete(self, prompt: str, prefer_quality: bool = True) -> tuple[str, ModelTier]: cache_key = hashlib.sha256(prompt.encode()).hexdigest() estimated_tokens = len(prompt.split()) * 2

# Check cache first for identical prompts cached = await self.cache.get(cache_key) if cached: return cached, ModelTier.CACHED

# Determine starting tier based on rate limit state if self.rate_tracker.should_throttle(estimated_tokens): tiers = [ModelTier.GPT35, ModelTier.CACHED] elif prefer_quality: tiers = [ModelTier.GPT4, ModelTier.GPT35, ModelTier.CACHED] else: tiers = [ModelTier.GPT35, ModelTier.CACHED]

for tier in tiers: if tier == ModelTier.CACHED: # Return semantic cache match or graceful error similar = await self.cache.get_similar(prompt) if similar: return similar, ModelTier.CACHED raise RateLimitExhaustedError("All tiers exhausted")

if not self.bucket.acquire(tokens=1, timeout=5.0): continue

try: response = await self._call_model(tier.value, prompt) await self.cache.set(cache_key, response) return response, tier except RateLimitError: continue

raise RateLimitExhaustedError("All tiers exhausted")The fallback hierarchy reflects a deliberate quality-versus-availability tradeoff. GPT-4 provides superior reasoning but consumes rate limit budget faster and costs more. GPT-3.5-turbo offers a middle ground—still generating fresh responses but with lower resource consumption. The cached tier represents your final safety net, returning previously computed responses for similar queries rather than failing entirely.

💡 Pro Tip: Track which tier actually served each request in your metrics. A sudden shift from GPT-4 to GPT-3.5 indicates you’re approaching capacity limits—useful for capacity planning conversations.

This approach ensures your application degrades gracefully under load. Users might receive slightly lower-quality responses during peak periods, but they never see error pages. The prefer_quality parameter gives calling code control over this tradeoff—background batch jobs might accept GPT-3.5 from the start to preserve GPT-4 capacity for interactive users.

The fallback client also highlights an important production pattern: your caching layer isn’t just for cost savings. It’s your last line of defense against complete service degradation. Invest in semantic similarity matching for your cache, so near-duplicate queries can return cached responses even when the exact prompt hasn’t been seen before.

Speaking of responses, catching rate limit errors is only half the battle. The text you receive from GPT models requires equally rigorous handling to ensure your application behaves predictably.

Bulletproof Response Parsing and Validation

GPT models are probabilistic systems. They generate text that looks correct but carries no structural guarantees. A response that worked perfectly during development can suddenly return malformed JSON, truncated content, or entirely unexpected formats under production load. Your parsing layer must treat every response as potentially hostile input—not because the model is adversarial, but because probabilistic outputs demand defensive programming.

Enforcing Structure with JSON Mode and Function Calling

OpenAI’s JSON mode and function calling provide the first line of defense against unstructured outputs. JSON mode guarantees syntactically valid JSON, while function calling constrains the response to a predefined schema. These mechanisms shift validation left, catching structural issues at the API level rather than in your application code.

from openai import OpenAI

client = OpenAI()

def extract_product_info(description: str) -> dict: response = client.chat.completions.create( model="gpt-4o", response_format={"type": "json_object"}, messages=[ { "role": "system", "content": "Extract product information as JSON with fields: name, price, category, in_stock" }, {"role": "user", "content": description} ] ) return json.loads(response.choices[0].message.content)Function calling provides even tighter control by defining exact parameter schemas. The model must conform to your specified types, enumerations, and constraints—or the API returns an error rather than silently malformed data:

tools = [{ "type": "function", "function": { "name": "create_support_ticket", "parameters": { "type": "object", "properties": { "priority": {"type": "string", "enum": ["low", "medium", "high", "critical"]}, "category": {"type": "string"}, "summary": {"type": "string", "maxLength": 200} }, "required": ["priority", "category", "summary"] } }}]💡 Pro Tip: JSON mode only guarantees valid JSON syntax, not schema compliance. The model can still return

{"error": "I don't understand"}instead of your expected structure. Always combine JSON mode with explicit schema validation.

Schema Validation with Pydantic

Pydantic transforms schema validation from manual field checking into declarative type safety. Define your expected response structure once, and Pydantic handles validation, type coercion, and error reporting. This approach catches subtle issues that JSON Schema alone misses—empty strings that should be null, integers serialized as strings, and fields present but semantically invalid.

from pydantic import BaseModel, Field, ValidationErrorfrom typing import Optionalfrom enum import Enum

class Priority(str, Enum): LOW = "low" MEDIUM = "medium" HIGH = "high" CRITICAL = "critical"

class SupportTicket(BaseModel): priority: Priority category: str = Field(min_length=1, max_length=50) summary: str = Field(min_length=10, max_length=200) customer_id: Optional[str] = None

def parse_gpt_response(raw_content: str) -> SupportTicket: try: data = json.loads(raw_content) return SupportTicket.model_validate(data) except json.JSONDecodeError as e: raise ResponseParsingError(f"Invalid JSON: {e}") except ValidationError as e: raise ResponseParsingError(f"Schema validation failed: {e.error_count()} errors")Consider adding custom validators for domain-specific rules. A customer_id field might need to match a specific format, or a category might need to exist in your database. Pydantic’s field_validator decorator lets you encode these constraints directly in your schema.

Handling Stream Interruptions and Partial Responses

Streaming responses introduce failure modes that don’t exist with synchronous calls. Network interruptions, timeouts, and client disconnections can leave you with partial content that breaks JSON parsing entirely. Unlike a failed synchronous request that returns an error, a streaming interruption might leave you with {"priority": "high", "catego and no indication that more data was expected.

class StreamAccumulator: def __init__(self, timeout_seconds: float = 30.0): self.chunks: list[str] = [] self.timeout = timeout_seconds self.last_chunk_time = time.monotonic()

def process_stream(self, stream) -> str: try: for chunk in stream: if time.monotonic() - self.last_chunk_time > self.timeout: raise StreamTimeoutError("No data received within timeout")

if chunk.choices[0].delta.content: self.chunks.append(chunk.choices[0].delta.content) self.last_chunk_time = time.monotonic()

return "".join(self.chunks) except Exception as e: partial_content = "".join(self.chunks) raise PartialResponseError( f"Stream interrupted: {e}", partial_content=partial_content, chunks_received=len(self.chunks) )Track both chunk count and accumulated bytes. A stream that delivers many tiny chunks but never completes may indicate a different failure mode than one that stops after a single large chunk. This metadata helps with debugging and informs retry decisions.

Recovery Strategies for Malformed Outputs

When validation fails, you need a recovery strategy beyond simply raising an exception. Implement a fallback chain that attempts progressively more aggressive recovery techniques. Start with light-touch fixes and escalate only when necessary.

def parse_with_recovery(raw_content: str, schema: type[BaseModel]) -> BaseModel: # Attempt 1: Direct parsing try: return schema.model_validate_json(raw_content) except ValidationError: pass

# Attempt 2: Extract JSON from markdown code blocks json_match = re.search(r"```(?:json)?\s*([\s\S]*?)```", raw_content) if json_match: try: return schema.model_validate_json(json_match.group(1)) except ValidationError: pass

# Attempt 3: Retry with explicit formatting instructions raise RecoveryExhaustedError( "All parsing strategies failed", raw_content=raw_content, should_retry=True )The should_retry flag signals to the calling code that a retry with a modified prompt (adding explicit JSON formatting instructions) is worth attempting before failing permanently. Log all recovery attempts with the original and recovered content—these logs reveal prompt weaknesses that you can fix proactively.

Solid response validation prevents the most common production failures, but even perfectly parsed responses can drain your budget. Controlling costs requires a different set of techniques—caching, prompt optimization, and hard budget limits.

Cost Control: Caching, Prompt Optimization, and Budget Guards

GPT API costs compound faster than most teams anticipate. A single complex prompt can cost $0.10 or more with GPT-4, and at scale, a poorly optimized integration burns through budgets in days. The good news: systematic cost control measures reduce spending by 60-80% without sacrificing response quality. This section covers the four pillars of cost optimization: semantic caching, prompt compression, budget enforcement, and strategic model selection.

Semantic Caching for Similar Requests

Traditional exact-match caching misses the biggest opportunity: semantically equivalent requests with different wording. A user asking “What’s the weather in NYC?” and another asking “Tell me New York City weather” should hit the same cache. Semantic caching uses embeddings to identify these near-duplicates, dramatically increasing cache hit rates compared to naive string matching.

import hashlibimport numpy as npfrom openai import OpenAIfrom redis import Redis

class SemanticCache: def __init__(self, redis_client: Redis, similarity_threshold: float = 0.92): self.redis = redis_client self.client = OpenAI() self.threshold = similarity_threshold self.embedding_model = "text-embedding-3-small"

def _get_embedding(self, text: str) -> list[float]: response = self.client.embeddings.create( model=self.embedding_model, input=text ) return response.data[0].embedding

def _cosine_similarity(self, a: list[float], b: list[float]) -> float: return np.dot(a, b) / (np.linalg.norm(a) * np.linalg.norm(b))

def get(self, prompt: str) -> str | None: query_embedding = self._get_embedding(prompt)

# Scan cached embeddings for semantic matches for key in self.redis.scan_iter("cache:embedding:*"): cached_embedding = np.frombuffer(self.redis.get(key), dtype=np.float32) similarity = self._cosine_similarity(query_embedding, cached_embedding)

if similarity >= self.threshold: response_key = key.decode().replace("embedding", "response") return self.redis.get(response_key).decode()

return None

def set(self, prompt: str, response: str, ttl: int = 3600) -> None: cache_id = hashlib.sha256(prompt.encode()).hexdigest()[:16] embedding = np.array(self._get_embedding(prompt), dtype=np.float32)

self.redis.setex(f"cache:embedding:{cache_id}", ttl, embedding.tobytes()) self.redis.setex(f"cache:response:{cache_id}", ttl, response)The embedding lookup adds ~$0.00002 per request—negligible compared to $0.03+ for a cached GPT-4 call. For high-traffic applications, consider using approximate nearest neighbor search with libraries like FAISS or Annoy to handle millions of cached embeddings efficiently.

Prompt Compression Without Quality Loss

Every token costs money. Aggressive prompt compression yields significant savings on high-volume endpoints. The key is identifying verbose patterns that add no semantic value—filler phrases, redundant instructions, and excessive politeness markers that LLMs don’t require.

class PromptOptimizer: # Common verbose patterns and their compressed equivalents COMPRESSION_MAP = { "Please provide": "Return", "I would like you to": "", "Can you please": "", "Make sure to": "", "It is important that": "", "In order to": "To", }

def compress(self, prompt: str) -> str: compressed = prompt for verbose, concise in self.COMPRESSION_MAP.items(): compressed = compressed.replace(verbose, concise)

# Remove redundant whitespace compressed = " ".join(compressed.split()) return compressed

def estimate_savings(self, original: str, compressed: str) -> dict: original_tokens = len(original) // 4 # Rough estimate compressed_tokens = len(compressed) // 4 return { "original_tokens": original_tokens, "compressed_tokens": compressed_tokens, "reduction_percent": (1 - compressed_tokens / original_tokens) * 100 }💡 Pro Tip: System prompts are sent with every request. A 500-token system prompt costs $0.015 per call with GPT-4. Compress it once, save on every request. Audit your system prompts quarterly—they tend to accumulate cruft over time.

Real-Time Budget Enforcement

Budget guards prevent runaway costs before they happen. Implement hard limits at the client level rather than relying on OpenAI’s usage limits, which have delay between consumption and reporting. Client-side enforcement gives you immediate control and the ability to implement sophisticated policies like per-user quotas or time-of-day throttling.

from datetime import datetime, timedeltafrom redis import Redis

class BudgetGuard: def __init__(self, redis_client: Redis, daily_limit_usd: float = 100.0): self.redis = redis_client self.daily_limit = daily_limit_usd

def record_cost(self, cost_usd: float) -> None: today = datetime.utcnow().strftime("%Y-%m-%d") self.redis.incrbyfloat(f"gpt:cost:{today}", cost_usd) self.redis.expire(f"gpt:cost:{today}", timedelta(days=7))

def check_budget(self) -> bool: today = datetime.utcnow().strftime("%Y-%m-%d") current_spend = float(self.redis.get(f"gpt:cost:{today}") or 0) return current_spend < self.daily_limit

def get_remaining_budget(self) -> float: today = datetime.utcnow().strftime("%Y-%m-%d") current_spend = float(self.redis.get(f"gpt:cost:{today}") or 0) return max(0, self.daily_limit - current_spend)Integrate budget checks before every API call. When budget is exhausted, gracefully degrade to cached responses, cheaper models, or queue requests for the next budget period rather than failing outright.

Fine-Tuning vs. Prompt Engineering

Fine-tuned models reduce token usage by eliminating lengthy instructions from every request. The decision matrix is straightforward:

- Use prompt engineering when: task variety is high, requirements change frequently, or volume is under 10,000 requests/day

- Use fine-tuning when: you have a stable, high-volume task with consistent output format and at least 100 quality training examples

Fine-tuning GPT-3.5 costs ~$0.008 per 1K training tokens upfront but reduces per-request costs by 50-70% through shorter prompts. Calculate your break-even point: if fine-tuning eliminates 200 tokens per request and you make 50,000 requests monthly, savings exceed training costs within the first month.

Consider a hybrid approach for production systems: use fine-tuned models for your highest-volume, most predictable tasks while maintaining prompt-engineered solutions for edge cases and evolving requirements.

With caching, compression, and budget enforcement in place, you have cost control. But how do you know these optimizations are working? The next section covers observability—logging, metrics, and debugging strategies that give you visibility into your GPT integration’s behavior.

Observability: Logging, Metrics, and Debugging GPT Integrations

Production GPT integrations fail silently. A prompt that worked yesterday returns garbage today. Costs spike without warning. Users complain about slow responses while your dashboards show green. Without proper observability, you’re flying blind—and when things break at 2 AM, you’ll wish you’d invested in instrumentation from the start.

What to Log

Every GPT request needs structured logging that captures both operational metrics and quality signals. The goal is to answer three questions quickly: What happened? Why did it happen? How do we prevent it from happening again?

import hashlibimport timefrom dataclasses import dataclass, asdictfrom typing import Optionalimport structlog

logger = structlog.get_logger()

@dataclassclass GPTRequestMetrics: request_id: str prompt_hash: str # SHA-256 of prompt template, not content model: str prompt_tokens: int completion_tokens: int latency_ms: float status: str # success, rate_limited, timeout, parse_error cache_hit: bool estimated_cost_usd: float response_length: int quality_score: Optional[float] = None

def log_gpt_request( request_id: str, prompt_template: str, response: dict, start_time: float, cache_hit: bool = False): usage = response.get("usage", {}) prompt_tokens = usage.get("prompt_tokens", 0) completion_tokens = usage.get("completion_tokens", 0)

metrics = GPTRequestMetrics( request_id=request_id, prompt_hash=hashlib.sha256(prompt_template.encode()).hexdigest()[:12], model=response.get("model", "unknown"), prompt_tokens=prompt_tokens, completion_tokens=completion_tokens, latency_ms=(time.time() - start_time) * 1000, status="success", cache_hit=cache_hit, estimated_cost_usd=calculate_cost(prompt_tokens, completion_tokens), response_length=len(response.get("choices", [{}])[0].get("message", {}).get("content", "")) )

logger.info("gpt_request_complete", **asdict(metrics)) emit_metrics(metrics)The prompt_hash field deserves attention. Hash the prompt template, not the interpolated content. This lets you track performance across prompt versions without logging sensitive user data. When debugging a regression, you can immediately correlate the issue with a specific prompt deployment.

Building Quality Feedback Loops

Raw metrics show you what happened. Quality signals show you if it worked. The distinction matters: a request can complete successfully with low latency and still produce unusable output. Track downstream indicators that reflect actual user value:

- Parse success rate: Did the response match your expected schema?

- User corrections: Did users edit or regenerate the output?

- Downstream failures: Did the next step in your pipeline reject this output?

- Time to first edit: How quickly did users modify generated content?

def track_response_quality(request_id: str, response_content: str): signals = { "has_refusal": "I cannot" in response_content or "I'm sorry" in response_content, "suspiciously_short": len(response_content) < 50, "truncated": response_content.endswith("...") or not response_content.rstrip().endswith((".", "}", "]")), "contains_hallucination_markers": "as an AI" in response_content.lower() }

quality_score = 1.0 - (sum(signals.values()) * 0.25)

logger.info("quality_signals", request_id=request_id, **signals, score=quality_score) return quality_scoreFeed these signals back into your prompt development process. When quality scores drop for a specific prompt_hash, you have a clear signal to investigate and iterate.

Alerting Before Users Notice

Set alerts on leading indicators, not lagging ones. By the time users file complaints, you’ve already lost their trust. Focus on metrics that predict problems:

- P95 latency exceeding 3x baseline: Model degradation or prompt bloat

- Parse failure rate above 5%: Prompt drift or model behavior change

- Token usage per request increasing: Prompt template changes or input validation failures

- Quality score dropping below threshold: Time to review recent prompt changes

- Cache hit rate declining: Potential issues with your caching key strategy

💡 Pro Tip: Create a dashboard that shows quality metrics grouped by

prompt_hash. When you deploy a new prompt version, you’ll immediately see if it’s performing better or worse than the previous iteration. Consider implementing automatic rollback when quality metrics degrade beyond acceptable thresholds.

Privacy Considerations

Never log raw prompts or responses containing user data. This isn’t just good practice—it’s often a legal requirement under GDPR, CCPA, and similar regulations. Instead:

- Log prompt template hashes and version identifiers

- Store full request/response pairs in a separate, encrypted audit log with strict retention policies

- Use sampling—log 1% of full payloads for debugging, 100% of metrics

- Implement PII detection before any logging

- Consider differential privacy techniques for aggregate analytics

Establish clear data retention policies from day one. Define how long you keep each category of data and automate the cleanup process.

With proper observability in place, you can debug production issues in minutes instead of days. The investment pays dividends every time you need to answer “what changed?” after a quality regression. Now let’s bring all these patterns together into a complete, production-ready integration template.

Putting It Together: A Production-Ready Integration Template

The patterns we’ve covered—resilient architecture, rate limit management, response validation, cost control, and observability—work best when integrated as a cohesive system. Here’s a complete, production-ready template that combines everything into a single, battle-tested client.

The Complete Production Client

import osfrom dataclasses import dataclassfrom typing import Optionalimport openaifrom tenacity import retry, stop_after_attempt, wait_exponential, retry_if_exception_typefrom pydantic import BaseModel, ValidationErrorimport structlogfrom prometheus_client import Counter, Histogramimport hashlibimport json

logger = structlog.get_logger()

## MetricsREQUEST_COUNT = Counter('gpt_requests_total', 'Total GPT API requests', ['status', 'environment'])REQUEST_LATENCY = Histogram('gpt_request_duration_seconds', 'GPT request latency')TOKEN_USAGE = Counter('gpt_tokens_total', 'Total tokens consumed', ['type'])

@dataclassclass GPTConfig: api_key: str model: str = "gpt-4" max_tokens: int = 1000 temperature: float = 0.7 max_retries: int = 3 timeout: int = 30 daily_budget_usd: float = 100.0 cache_ttl: int = 3600 environment: str = "production"

@classmethod def from_environment(cls) -> "GPTConfig": env = os.getenv("ENVIRONMENT", "development") configs = { "development": {"model": "gpt-3.5-turbo", "daily_budget_usd": 10.0, "max_retries": 1}, "staging": {"model": "gpt-4", "daily_budget_usd": 50.0, "max_retries": 2}, "production": {"model": "gpt-4", "daily_budget_usd": 500.0, "max_retries": 3}, } return cls( api_key=os.environ["OPENAI_API_KEY"], environment=env, **configs.get(env, configs["development"]) )

class ProductionGPTClient: def __init__(self, config: GPTConfig, cache, budget_tracker): self.config = config self.client = openai.OpenAI(api_key=config.api_key, timeout=config.timeout) self.cache = cache self.budget = budget_tracker self.log = logger.bind(environment=config.environment, model=config.model)

def _cache_key(self, prompt: str, response_model: type) -> str: content = f"{self.config.model}:{prompt}:{response_model.__name__}" return hashlib.sha256(content.encode()).hexdigest()

@retry( retry=retry_if_exception_type((openai.RateLimitError, openai.APITimeoutError)), stop=stop_after_attempt(3), wait=wait_exponential(multiplier=1, min=2, max=60), ) def _call_api(self, messages: list[dict]) -> openai.types.ChatCompletion: return self.client.chat.completions.create( model=self.config.model, messages=messages, max_tokens=self.config.max_tokens, temperature=self.config.temperature, )

def complete(self, prompt: str, response_model: type[BaseModel], use_cache: bool = True) -> BaseModel: cache_key = self._cache_key(prompt, response_model)

if use_cache and (cached := self.cache.get(cache_key)): self.log.info("cache_hit", prompt_hash=cache_key[:8]) return response_model.model_validate_json(cached)

if not self.budget.check_budget(self.config.daily_budget_usd): raise BudgetExceededError(f"Daily budget of ${self.config.daily_budget_usd} exceeded")

with REQUEST_LATENCY.time(): response = self._call_api([{"role": "user", "content": prompt}])

REQUEST_COUNT.labels(status="success", environment=self.config.environment).inc() TOKEN_USAGE.labels(type="prompt").inc(response.usage.prompt_tokens) TOKEN_USAGE.labels(type="completion").inc(response.usage.completion_tokens)

content = response.choices[0].message.content validated = response_model.model_validate_json(content)

self.cache.set(cache_key, content, ttl=self.config.cache_ttl) self.budget.record_usage(response.usage.total_tokens, self.config.model)

return validatedProduction Readiness Checklist

Before deploying, verify each item:

- Authentication: API keys stored in secrets manager, rotated quarterly

- Rate Limits: Retry logic with exponential backoff, circuit breaker configured

- Validation: All responses parsed through Pydantic models with fallback handling

- Cost Control: Budget alerts at 50%, 80%, 100% thresholds; semantic caching enabled

- Observability: Request latency, token usage, and error rates dashboarded

- Graceful Degradation: Fallback responses defined for all critical paths

💡 Pro Tip: Run this checklist as part of your CI/CD pipeline. Create a

pre_deploy_gpt_check.pyscript that validates configuration, tests connectivity with a minimal prompt, and confirms budget tracking is active.

This template handles the complexity so your application code stays clean—just inject the client and call complete() with your prompt and expected response type. The patterns work together: caching reduces costs, retries handle transience, validation catches malformed responses, and observability surfaces issues before users notice them.

Key Takeaways

- Implement proactive rate limit tracking by parsing OpenAI response headers and throttling requests before hitting limits, not after

- Use semantic caching with embedding similarity to serve repeated or similar requests from cache, reducing costs by 60-80%

- Always validate GPT responses against a schema before using them—treat LLM output as untrusted user input

- Build multi-tier fallbacks (GPT-4 → GPT-3.5 → cached response → graceful error) so your application degrades gracefully instead of failing completely

- Log token usage, latency, and prompt hashes for every request to enable cost attribution and quality monitoring