Building Multi-Cluster EKS Infrastructure: From Dashboard Visibility to Hybrid Node Management

Your Kubernetes footprint just grew from three clusters to fifteen across four AWS regions, and suddenly you’re drowning in context-switching between kubectl configs, missing critical alerts, and losing sleep over inconsistent deployments. The promise of container orchestration feels more like container chaos when you can’t see the forest for the trees.

This scenario plays out at nearly every organization that successfully adopts Kubernetes. You start with a single cluster, nail your deployment workflows, build confidence, and then scale horizontally—new clusters for staging, for isolated workloads, for regional failover, for that acquisition your company just closed. Each cluster made sense in isolation. Together, they’ve become an operational nightmare.

The symptoms are predictable: your on-call engineers spend the first ten minutes of every incident figuring out which cluster is actually affected. Helm chart versions drift between environments because nobody owns the synchronization. That security patch you applied to production three weeks ago? It never made it to the cluster running your internal tools. And the dashboard you built to monitor everything? It shows you twelve different Grafana instances, each telling a partial story.

Platform teams facing this reality have two choices: throw more engineers at the problem and accept the cognitive overhead as the cost of scale, or fundamentally rethink how they approach multi-cluster operations. The organizations that thrive at scale choose the second path—they invest in unified visibility, standardized tooling, and governance patterns that work across cluster boundaries rather than within them.

The breaking point usually arrives faster than anyone expects. Understanding why single-cluster thinking fails at scale is the first step toward building infrastructure that actually works when the cluster count hits double digits.

The Multi-Cluster Reality: Why Single-Cluster Thinking Breaks at Scale

The journey from a single Kubernetes cluster to a multi-cluster architecture fundamentally changes how platform teams operate. What works for three clusters becomes a liability at ten. The patterns that felt like best practices in a simple environment transform into operational debt that compounds with every new cluster you provision.

The Breaking Point

Most organizations hit their first wall around the five-cluster mark. At this point, manual kubectl context switching becomes error-prone, YAML sprawl across repositories turns into a maintenance nightmare, and the team’s mental model of “what’s running where” starts to fragment.

The symptoms are predictable:

- Configuration drift accelerates as teams make “temporary” changes directly to clusters without updating source control

- Incident response slows because engineers waste critical minutes identifying which cluster hosts the affected workload

- Cost attribution becomes guesswork when workloads span multiple clusters without consistent labeling or tagging

- Security posture degrades as policy enforcement varies between clusters managed by different team members

These issues don’t emerge gradually—they hit suddenly when a production incident exposes how fragile your operational assumptions have become.

The Visibility Gap

Single-cluster tooling creates dangerous blind spots in multi-cluster environments. Your monitoring stack might show healthy nodes in cluster A while cluster B experiences memory pressure. Your alerting catches a failing deployment in your primary region but misses the same failure pattern in secondary regions.

The visibility gap extends beyond metrics. Version skew across clusters, inconsistent add-on configurations, and divergent RBAC policies create a governance surface area that traditional dashboards simply cannot cover. Platform teams find themselves maintaining spreadsheets to track cluster configurations—a manual process that’s outdated the moment it’s completed.

💡 Pro Tip: If your team discusses cluster state in Slack more than in dashboards, you have a visibility problem that will manifest as an incident.

Governance at the Boundary

Compliance requirements don’t respect cluster boundaries. When auditors ask for evidence of consistent pod security standards or network policies across your fleet, “we check each cluster individually” isn’t an acceptable answer. The governance challenge compounds with regulatory frameworks like SOC 2, PCI-DSS, or HIPAA, where you must demonstrate uniform controls across all compute environments.

This multi-cluster reality demands purpose-built tooling. AWS recognized this operational pattern and built the EKS Dashboard specifically to address fleet-wide visibility—a capability we’ll explore in depth next.

EKS Dashboard Deep Dive: Unified Visibility Without the Overhead

When managing a fleet of EKS clusters, the cognitive load of context-switching between AWS accounts, regions, and kubectl contexts becomes a genuine operational burden. The EKS Dashboard, introduced as a native AWS capability, addresses this by providing a single pane of glass for multi-cluster observability without requiring you to deploy and maintain additional infrastructure. For platform teams already stretched thin managing Kubernetes complexity, this native integration eliminates an entire category of operational overhead while providing consistent visibility across your entire fleet.

Enabling Dashboard Access Across Your Fleet

The EKS Dashboard is available directly in the AWS Console, but unlocking its full potential requires proper IAM configuration and cluster access entries. For each cluster you want to monitor, ensure the observability add-on is enabled:

apiVersion: eks.amazonaws.com/v1kind: Addonmetadata: name: eks-pod-identity-agentspec: clusterName: platform-prod-us-east-1 addonName: amazon-cloudwatch-observability addonVersion: v1.8.0-eksbuild.1 serviceAccountRoleArn: arn:aws:iam::1234567890:role/CloudWatchAgentRole configurationValues: | { "containerLogs": { "enabled": true }, "metrics": { "enabled": true } }For organizations using AWS Organizations, cross-account visibility requires delegated administrator setup. Register your central platform account as the delegated admin for EKS:

## CloudFormation snippet for delegated administratorAWSTemplateFormatVersion: '2010-09-09'Resources: EKSDelegatedAdmin: Type: AWS::Organizations::DelegatedAdministrator Properties: AccountId: '1234567890' ServicePrincipal: eks.amazonaws.comOnce delegation is configured, your central platform team gains read-only visibility into clusters across all member accounts without requiring individual IAM role assumptions or VPN connectivity to each environment.

Key Metrics That Actually Matter

The dashboard surfaces dozens of metrics, but operational awareness comes from focusing on the right signals. Configure your default view to prioritize:

- Control plane health: API server latency percentiles (p99 > 1s warrants investigation)

- Node group capacity: Available vs. requested resources across instance types

- Pod scheduling failures: Immediate indicator of capacity or configuration issues

- Add-on versions: Drift detection across clusters for security compliance

- Certificate expiration: Proactive alerting before cluster authentication breaks

The cluster comparison view becomes invaluable during incident response. Rather than SSH-ing into bastion hosts or juggling terminal windows, you can immediately identify which clusters exhibit anomalous behavior. This comparative analysis is particularly powerful when investigating issues that may be region-specific or related to recent deployments that only affected a subset of your fleet.

💡 Pro Tip: Create saved filters for your production clusters grouped by business unit or criticality tier. During incidents, one-click access to “all production clusters” eliminates precious minutes of manual filtering.

Integrating with Your Existing Observability Stack

The EKS Dashboard complements rather than replaces your existing monitoring infrastructure. For teams running Prometheus and Grafana, the dashboard handles AWS-native metrics while your stack manages application-level observability. This layered approach ensures you maintain deep application insights while gaining infrastructure-wide visibility without duplicating collection agents or storage costs.

Export dashboard metrics to your central Prometheus instance using the CloudWatch Exporter:

apiVersion: v1kind: ConfigMapmetadata: name: cloudwatch-exporter-config namespace: monitoringdata: config.yml: | region: us-east-1 metrics: - aws_namespace: AWS/EKS aws_metric_name: cluster_failed_node_count aws_dimensions: [ClusterName] aws_statistics: [Maximum] - aws_namespace: AWS/EKS aws_metric_name: pod_cpu_utilization aws_dimensions: [ClusterName, Namespace] aws_statistics: [Average, Maximum] period_seconds: 300This hybrid approach gives platform teams the best of both worlds: native AWS integration for infrastructure metrics with the flexibility of custom dashboards for application-specific KPIs. Teams can build unified Grafana dashboards that correlate EKS control plane metrics with application performance data, enabling faster root cause analysis when issues span infrastructure and application layers.

For alerting, route critical cluster-level alerts through CloudWatch Alarms while application alerts flow through Alertmanager. This separation of concerns prevents alert fatigue and ensures the right team receives the right signal. Consider establishing escalation policies where infrastructure alerts page the platform team while application alerts route to service owners.

The visibility foundation established here becomes essential as you scale your cluster fleet. With unified monitoring in place, the next challenge is ensuring consistent, repeatable infrastructure deployment—which brings us to Terraform patterns for fleet management.

Infrastructure as Code: Terraform Patterns for Fleet Management

Managing a fleet of EKS clusters without Infrastructure as Code is a recipe for configuration drift, inconsistent security postures, and operational chaos. Terraform provides the foundation for reproducible cluster provisioning, but scaling from one cluster to ten requires deliberate architectural decisions in your module structure, state management, and node group configuration. Organizations that invest in robust IaC patterns early find themselves well-positioned to handle rapid growth, while those that delay often accumulate technical debt that compounds with each additional cluster.

Module Structure for Consistent Provisioning

The key to fleet management lies in building composable, opinionated modules that encode your organization’s standards while remaining flexible enough to handle per-cluster variations. A three-tier module architecture works well for most organizations: a base module wrapping the community EKS module, environment-specific modules that set defaults, and cluster-specific configurations that handle unique requirements.

module "eks" { source = "terraform-aws-modules/eks/aws" version = "~> 20.0"

cluster_name = var.cluster_name cluster_version = var.kubernetes_version

cluster_endpoint_public_access = var.environment == "production" ? false : true cluster_endpoint_private_access = true

vpc_id = var.vpc_id subnet_ids = var.private_subnet_ids

# Fleet-wide defaults enforced here cluster_encryption_config = { provider_key_arn = var.kms_key_arn resources = ["secrets"] }

cluster_addons = { coredns = { most_recent = true } kube-proxy = { most_recent = true } vpc-cni = { most_recent = true } eks-pod-identity-agent = { most_recent = true } }

tags = merge(var.common_tags, { Environment = var.environment ManagedBy = "terraform" Fleet = var.fleet_name })}Wrap this base module with environment-specific modules that set sensible defaults. Your production module enforces private endpoints, larger instance types, and stricter IAM policies, while development clusters remain cost-optimized and accessible. This layered approach ensures that security and compliance requirements are baked into the infrastructure rather than applied as afterthoughts.

Consider versioning your internal modules independently from your cluster configurations. When you discover a security improvement or operational enhancement, you can roll it out incrementally by updating the module version reference in each cluster’s configuration rather than modifying the module directly and affecting all consumers simultaneously.

Karpenter Integration for Dynamic Node Management

Static node groups work for predictable workloads, but modern platforms require Karpenter’s just-in-time provisioning. The integration requires careful coordination between Terraform-managed infrastructure and Karpenter’s CRDs. Terraform handles the foundational IAM roles, instance profiles, and SQS queues for interruption handling, while Karpenter manages the actual node lifecycle through Kubernetes-native resources.

module "karpenter" { source = "terraform-aws-modules/eks/aws//modules/karpenter" version = "~> 20.0"

cluster_name = module.eks.cluster_name

enable_v1_permissions = true enable_pod_identity = true

node_iam_role_additional_policies = { AmazonSSMManagedInstanceCore = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore" }}

resource "helm_release" "karpenter" { namespace = "kube-system" name = "karpenter" repository = "oci://public.ecr.aws/karpenter" chart = "karpenter" version = "1.0.0"

values = [ yamlencode({ settings = { clusterName = module.eks.cluster_name clusterEndpoint = module.eks.cluster_endpoint interruptionQueue = module.karpenter.queue_name } }) ]

depends_on = [module.karpenter]}💡 Pro Tip: Keep a small managed node group for system-critical workloads like Karpenter itself and cluster add-ons. Letting Karpenter manage nodes that run Karpenter creates a chicken-and-egg problem during cluster recovery.

Define your NodePool and EC2NodeClass resources outside of Terraform using GitOps tooling like ArgoCD or Flux. This separation acknowledges that node configuration changes more frequently than cluster infrastructure and benefits from the rapid iteration that GitOps provides. Terraform establishes the trust relationship and permissions; Kubernetes resources define the provisioning behavior.

State Management for Multi-Cluster Deployments

State isolation prevents blast radius issues when applying changes. Each cluster should maintain its own state file, with shared infrastructure (VPCs, IAM roles, KMS keys) managed separately. This separation ensures that a misconfigured apply against one cluster cannot corrupt the state of another, and it enables parallel operations across your fleet.

terraform { backend "s3" { bucket = "acme-terraform-state" key = "eks/production/us-east-1/terraform.tfstate" region = "us-east-1" dynamodb_table = "terraform-state-lock" encrypt = true }}Use Terragrunt or a similar orchestration tool to manage the complexity of applying changes across multiple clusters:

locals { environment = "production" region = "us-east-1" account_id = "123456789012"}

terraform { source = "../../modules/eks-cluster"}

inputs = { cluster_name = "prod-${local.region}" kubernetes_version = "1.31" environment = local.environment fleet_name = "platform-clusters"}This pattern allows parallel applies across clusters while maintaining isolation. When upgrading Kubernetes versions fleet-wide, you can stage rollouts by modifying individual cluster configurations rather than risking atomic changes. Establish a clear promotion path—development clusters upgrade first, followed by staging, and finally production—with appropriate soak time between each tier to catch issues before they impact critical workloads.

Implement state locking consistently across all backends using DynamoDB tables. In a fleet scenario where multiple engineers may be working on different clusters simultaneously, state corruption from concurrent applies becomes a real risk without proper locking mechanisms in place.

The infrastructure patterns established here create the foundation for extending beyond AWS-managed nodes. When your workloads require on-premises compute or edge locations, EKS Hybrid Nodes allow you to maintain this same Terraform-driven consistency while incorporating non-cloud infrastructure into your clusters.

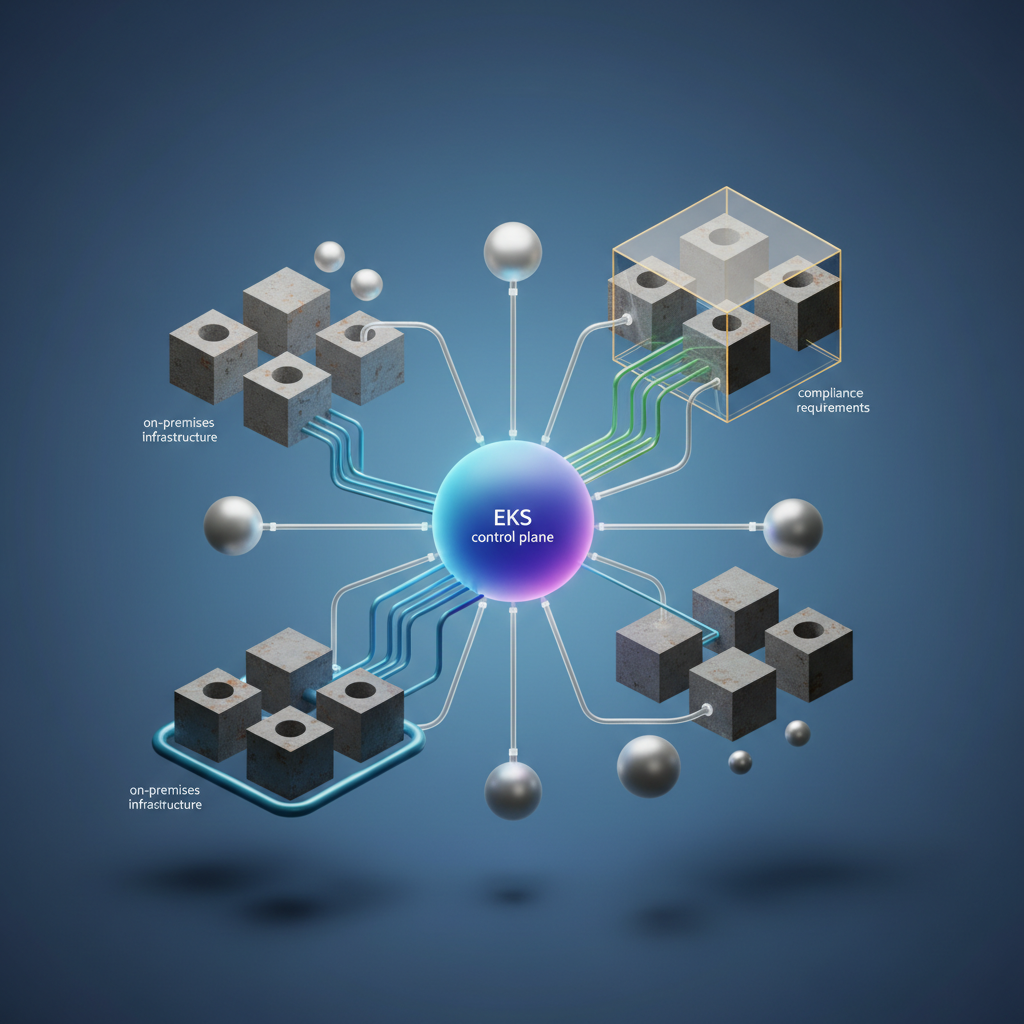

EKS Hybrid Nodes: Extending Kubernetes to On-Premises Infrastructure

Managing workloads across cloud and on-premises infrastructure introduces complexity that traditional deployment models struggle to address. EKS Hybrid Nodes solves this by extending your EKS control plane to nodes running in your own data centers, giving you a single Kubernetes API to manage workloads regardless of where they run.

When Hybrid Architecture Makes Sense

Not every organization needs hybrid infrastructure. All-cloud deployments remain simpler to operate and should be the default choice. However, specific scenarios make hybrid nodes compelling:

Data locality requirements drive many hybrid decisions. Financial services processing market data, healthcare systems handling patient records, or manufacturing facilities collecting sensor data often face regulations requiring data to remain on-premises. Running compute near the data source eliminates egress costs and latency.

Edge processing needs emerge when you need real-time decision-making closer to data sources. Video analytics, IoT aggregation, and industrial control systems benefit from local compute while still leveraging centralized management.

Migration staging provides a practical path for organizations moving to cloud incrementally. Running hybrid nodes lets teams adopt Kubernetes practices and EKS tooling before fully migrating infrastructure.

If your workloads don’t fall into these categories, stick with standard EKS managed node groups. The operational overhead of hybrid infrastructure only pays off when you have genuine constraints.

Network Requirements and Connectivity Patterns

Hybrid nodes require reliable connectivity to the EKS control plane. AWS supports two primary patterns:

AWS Site-to-Site VPN works for most deployments, providing encrypted tunnels over public internet. Expect latency between 20-100ms depending on geographic distance. This suffices for workloads tolerant of occasional network variability.

AWS Direct Connect delivers consistent, low-latency connectivity for production-critical hybrid deployments. The dedicated connection bypasses internet routing, providing predictable performance essential for latency-sensitive applications.

Your on-premises nodes need outbound access to several AWS endpoints: the EKS API server, Amazon ECR for container images, Systems Manager for node registration, and CloudWatch for logging. Configure your firewall rules accordingly.

💡 Pro Tip: Deploy a local container registry mirror for frequently-used images. This reduces cross-network traffic and improves pod startup times on hybrid nodes.

Configuring EKS Hybrid Nodes with eksctl

The eksctl CLI streamlines hybrid node configuration. Start by defining your cluster with hybrid node support enabled:

apiVersion: eksctl.io/v1alpha5kind: ClusterConfig

metadata: name: production-hybrid region: us-east-1 version: "1.29"

vpc: id: vpc-0a1b2c3d4e5f67890 subnets: private: us-east-1a: id: subnet-0123456789abcdef0 us-east-1b: id: subnet-0fedcba9876543210

hybridNodes: enabled: true remoteNetworkConfig: remotePodNetworks: - 10.100.0.0/16 remoteNodeNetworks: - 192.168.1.0/24

managedNodeGroups: - name: cloud-workers instanceType: m6i.xlarge desiredCapacity: 3 privateNetworking: trueCreate the cluster with hybrid support:

eksctl create cluster -f hybrid-cluster.yamlAfter cluster creation, register your on-premises nodes using the SSM hybrid activation. Generate the activation credentials:

aws ssm create-activation \ --iam-role EKSHybridNodeRole \ --registration-limit 50 \ --default-instance-name "hybrid-node" \ --region us-east-1Install the SSM agent and EKS node components on your on-premises machines, then register them using the activation code and ID returned from the previous command.

Once registered, hybrid nodes appear alongside your cloud nodes in kubectl output, scheduled by the same control plane and managed through identical workflows.

With hybrid nodes extending your cluster’s reach, the next challenge becomes ensuring consistent capabilities across all nodes—starting with the essential add-ons that enable TLS certificate management, DNS automation, and secure AWS service access.

Essential Add-ons: cert-manager, external-dns, and Pod Identity

Every production EKS cluster requires a baseline set of capabilities: automated TLS certificate management, dynamic DNS record creation, and secure workload authentication to AWS services. These three add-ons—cert-manager, external-dns, and EKS Pod Identity—form the foundation that enables everything else in your platform. Without them, you’ll spend significant operational time on tasks that should be automated.

Automated Certificate Management with cert-manager

cert-manager eliminates the operational burden of managing TLS certificates across your clusters. It handles issuance, renewal, and rotation automatically, integrating with Let’s Encrypt, AWS Private CA, or your internal PKI. The controller watches for Certificate resources and Ingress annotations, then orchestrates the entire ACME challenge workflow without manual intervention.

apiVersion: cert-manager.io/v1kind: ClusterIssuermetadata: name: letsencrypt-prodspec: acme: server: https://acme-v02.api.letsencrypt.org/directory privateKeySecretRef: name: letsencrypt-prod-account-key solvers: - selector: dnsZones: - "timderzhavets.com" dns01: route53: region: us-east-1 hostedZoneID: Z0123456789ABCDEFGHIJFor multi-cluster deployments, use DNS-01 challenges rather than HTTP-01. This approach works regardless of ingress configuration and handles wildcard certificates cleanly. DNS-01 also eliminates the need for publicly accessible endpoints during certificate issuance, which simplifies network security policies.

DNS Record Automation with external-dns

external-dns watches your Kubernetes resources and automatically creates DNS records in Route 53. When you deploy a Service with a hostname annotation or an Ingress with a host rule, external-dns handles the corresponding DNS entry. This eliminates the manual step of updating DNS records during deployments and ensures records stay synchronized with your actual infrastructure.

provider: awsaws: region: us-east-1 zoneType: publicdomainFilters: - timderzhavets.compolicy: synctxtOwnerId: eks-prod-clustersources: - service - ingress - istio-gatewayThe txtOwnerId parameter prevents conflicts when multiple clusters manage records in the same hosted zone. Each cluster uses its own identifier, and external-dns creates TXT records to track ownership. This ownership model ensures that one cluster never accidentally modifies or deletes records belonging to another.

💡 Pro Tip: Set

policy: syncrather thanupsert-onlyto ensure external-dns removes stale records when you delete services. This prevents DNS record accumulation over time and keeps your hosted zones clean.

Secure Workload Authentication with EKS Pod Identity

EKS Pod Identity replaces the older IRSA (IAM Roles for Service Accounts) approach with a simpler, more secure authentication model. Pods receive temporary AWS credentials without needing to manage OIDC providers or trust policies manually. The EKS Pod Identity Agent runs as a DaemonSet and intercepts credential requests from the AWS SDK, returning short-lived credentials scoped to the specific pod.

apiVersion: eks.amazonaws.com/v1alpha1kind: PodIdentityAssociationmetadata: name: external-dns namespace: kube-systemspec: serviceAccountName: external-dns roleArn: arn:aws:iam::123456789012:role/external-dns-prodThe corresponding Terraform configuration creates the IAM role with the correct trust policy:

module "external_dns_pod_identity" { source = "terraform-aws-modules/eks-pod-identity/aws" version = "~> 1.4"

name = "external-dns-${var.cluster_name}"

attach_external_dns_policy = true external_dns_hosted_zone_arns = [data.aws_route53_zone.primary.arn]

association_defaults = { namespace = "kube-system" service_account = "external-dns" }

associations = { prod = { cluster_name = module.eks.cluster_name } }}Pod Identity offers several advantages over IRSA: credentials refresh automatically without pod restarts, you can audit credential usage through CloudTrail with pod-level granularity, and the setup requires less boilerplate configuration. The trust relationship is managed by EKS itself rather than through manually configured OIDC providers.

Deployment Order Matters

These add-ons have dependencies that dictate installation sequence. Deploy them in order:

- EKS Pod Identity agent (enables IAM authentication for pods)

- cert-manager (requires Pod Identity for Route 53 access during DNS-01 challenges)

- external-dns (requires Pod Identity for Route 53 record management)

Attempting to deploy cert-manager or external-dns before the Pod Identity agent results in authentication failures that can be difficult to diagnose. Your infrastructure-as-code should encode these dependencies explicitly.

With these foundational components in place, your clusters can securely authenticate to AWS services, automatically provision TLS certificates, and dynamically manage DNS records. This automation becomes essential when managing cluster fleets through GitOps workflows, where manual certificate rotation or DNS updates would create unacceptable operational overhead.

GitOps at Scale: ArgoCD for Multi-Cluster Deployments

Managing deployments across a fleet of EKS clusters demands more than kubectl scripts and CI pipelines. ArgoCD provides the declarative foundation for multi-cluster GitOps, enabling platform teams to deploy consistently while maintaining the flexibility to handle environment-specific configurations. As your cluster count grows from a handful to dozens or hundreds, manual deployment approaches become untenable—ArgoCD’s automation capabilities become essential infrastructure.

ApplicationSets: Fleet-Wide Deployment Automation

ApplicationSets eliminate the toil of creating individual ArgoCD Applications for each cluster. By defining a single template, you deploy workloads across your entire fleet automatically. This approach transforms cluster provisioning from a deployment bottleneck into a seamless operation where new clusters inherit their workloads immediately upon registration.

apiVersion: argoproj.io/v1alpha1kind: ApplicationSetmetadata: name: platform-services namespace: argocdspec: generators: - clusters: selector: matchLabels: environment: production values: revision: main - clusters: selector: matchLabels: environment: staging values: revision: develop template: metadata: name: '{{name}}-platform-services' spec: project: platform source: repoURL: https://github.com/acme-corp/platform-services.git targetRevision: '{{values.revision}}' path: 'clusters/{{metadata.labels.region}}/platform' helm: valueFiles: - values.yaml - 'values-{{metadata.labels.environment}}.yaml' destination: server: '{{server}}' namespace: platform syncPolicy: automated: prune: true selfHeal: true syncOptions: - CreateNamespace=true - ServerSideApply=trueThe cluster generator dynamically discovers EKS clusters registered with ArgoCD, applying different Git branches based on environment labels. When you add a new cluster with matching labels, ArgoCD automatically creates and syncs the corresponding Application. This dynamic discovery pattern means your deployment manifests never need updating when clusters join or leave the fleet.

💡 Pro Tip: Use the

matrixgenerator to combine cluster selection with list generators, enabling deployments of multiple applications across multiple clusters from a single ApplicationSet definition. This multiplicative approach scales elegantly—ten applications across twenty clusters requires just one ApplicationSet, not two hundred Application resources.

Progressive Rollouts Across Cluster Tiers

Production deployments require careful orchestration. ArgoCD’s sync waves combined with ApplicationSet strategies enable progressive rollouts that respect your cluster hierarchy. This tiered approach catches issues in lower environments before they impact production traffic, giving your team time to detect problems and halt rollouts when necessary.

apiVersion: argoproj.io/v1alpha1kind: ApplicationSetmetadata: name: api-gateway-rollout namespace: argocdspec: generators: - list: elements: - cluster: eks-dev-us-east-1 tier: "1" server: https://A1B2C3D4E5.gr7.us-east-1.eks.amazonaws.com - cluster: eks-staging-us-east-1 tier: "2" server: https://F6G7H8I9J0.gr7.us-east-1.eks.amazonaws.com - cluster: eks-prod-us-east-1 tier: "3" server: https://K1L2M3N4O5.gr7.us-east-1.eks.amazonaws.com strategy: type: RollingSync rollingSync: steps: - matchExpressions: - key: tier operator: In values: ["1"] - matchExpressions: - key: tier operator: In values: ["2"] maxUpdate: 1 - matchExpressions: - key: tier operator: In values: ["3"] maxUpdate: 25% template: metadata: name: 'api-gateway-{{cluster}}' labels: tier: '{{tier}}' spec: project: applications source: repoURL: https://github.com/acme-corp/api-gateway.git targetRevision: v2.4.0 path: deploy/kubernetes destination: server: '{{server}}' namespace: api-gatewayThis configuration deploys to development first, waits for successful sync, then proceeds to staging one cluster at a time, and finally rolls out to production clusters at 25% increments. The explicit tier labeling creates a deployment graph that operators can reason about during incident response.

Drift Detection and Automated Remediation

Cluster drift—when live state deviates from Git—creates operational risk. Whether caused by emergency hotfixes, misconfigured RBAC, or well-intentioned manual interventions, drift erodes the reliability guarantees that GitOps promises. ArgoCD’s self-healing capabilities automatically reconcile unauthorized changes, ensuring your declared state remains the source of truth.

apiVersion: v1kind: ConfigMapmetadata: name: argocd-cm namespace: argocddata: resource.customizations.health.argoproj.io_Application: | hs = {} hs.status = "Healthy" if obj.status ~= nil then if obj.status.health ~= nil then hs.status = obj.status.health.status end end return hs resource.compareoptions: | ignoreAggregatedRoles: true ignoreResourceStatusField: crdEnable metrics export to surface drift events in your monitoring stack. Configure alerts when argocd_app_info{sync_status="OutOfSync"} persists beyond your tolerance window. Setting a five-minute threshold balances responsiveness against transient sync delays during normal operations. This visibility, combined with automated remediation, ensures your clusters remain consistent with your declared state while providing audit trails for compliance requirements.

With GitOps providing deployment consistency across your cluster fleet, the final piece is establishing operational runbooks that codify day-2 practices for your platform team.

Operational Runbook: Day-2 Patterns for Platform Teams

Deploying multi-cluster infrastructure is the beginning, not the end. The real work starts when you need to upgrade Kubernetes versions across a fleet, optimize costs without disrupting workloads, and respond to incidents spanning multiple clusters simultaneously.

Cluster Upgrade Strategies

Zero-downtime upgrades require treating clusters as cattle, not pets. The blue-green cluster pattern provisions a new cluster at the target Kubernetes version, migrates workloads progressively, and decommissions the old cluster only after validation completes. This approach eliminates the anxiety of in-place upgrades and provides instant rollback capability.

For organizations that cannot afford parallel infrastructure costs, the rolling node group strategy works well. Upgrade the control plane first, then create new node groups at the target version while draining old ones. Karpenter simplifies this process by automatically provisioning nodes with the correct AMI when you update the EC2NodeClass.

Schedule upgrades during low-traffic windows, but automate the execution. Manual upgrades at 2 AM lead to mistakes. Codify your upgrade runbook in your CI/CD pipeline and let automation handle the timing.

Cost Optimization Through Consolidation

Karpenter’s consolidation feature identifies underutilized nodes and bin-packs workloads onto fewer instances. Enable consolidation policies that respect pod disruption budgets and avoid consolidating during business hours when traffic patterns are unpredictable.

Review Spot instance utilization monthly. Karpenter’s weight-based provisioning lets you prefer Spot for fault-tolerant workloads while reserving On-Demand capacity for stateful services. The EKS Dashboard surfaces cost metrics across clusters, making it straightforward to identify optimization opportunities.

Multi-Cluster Incident Response

When incidents span clusters, the EKS Dashboard becomes your first stop for triage. Quickly identify which clusters show degraded health, then drill into specific workloads. Establish runbooks that map symptoms to clusters—a latency spike in your edge region might indicate Hybrid Node connectivity issues rather than application problems.

💡 Pro Tip: Create dedicated Slack channels per cluster with automated alerts. When an incident escalates, you know exactly where to coordinate response efforts.

Document every incident in a shared repository. Pattern recognition across clusters reveals systemic issues that single-cluster operators miss entirely.

Key Takeaways

- Deploy EKS Dashboard with the provided Terraform module to gain immediate visibility across all clusters in your fleet

- Implement the modular Terraform structure to ensure consistent cluster provisioning and eliminate configuration drift

- Use EKS Pod Identity instead of IRSA for new workloads to simplify IAM management and improve security posture

- Adopt ArgoCD ApplicationSets to deploy changes across your entire cluster fleet from a single Git commit