Building a Zero-Trust GitLab CI/CD Pipeline for Kubernetes Using the Agent-Based Workflow

Your Kubernetes cluster credentials are sitting in GitLab CI/CD variables right now, accessible to anyone with maintainer access to your repository. That service account token you created “temporarily” six months ago? It has cluster-admin privileges and rotates never. Every shared runner that executes your deployment jobs downloads those credentials, expands your attack surface, and leaves traces in job logs that persist for weeks.

This is the uncomfortable reality of traditional GitLab-Kubernetes integration. You base64-encode a kubeconfig, paste it into a CI/CD variable, mark it as “protected” and “masked,” and convince yourself the problem is solved. But protection only restricts which branches can access the variable—it does nothing against a compromised runner, a malicious merge request from a fork, or an insider with legitimate maintainer access who decides to echo $KUBECONFIG | base64 -d in a job script.

The certificate-based authentication approach that many teams adopt as a “security improvement” changes nothing fundamental. You’ve swapped a token for a certificate and private key. The credentials still flow outward from your cluster to GitLab’s infrastructure. They still sit in variables. They still traverse runner environments you don’t control.

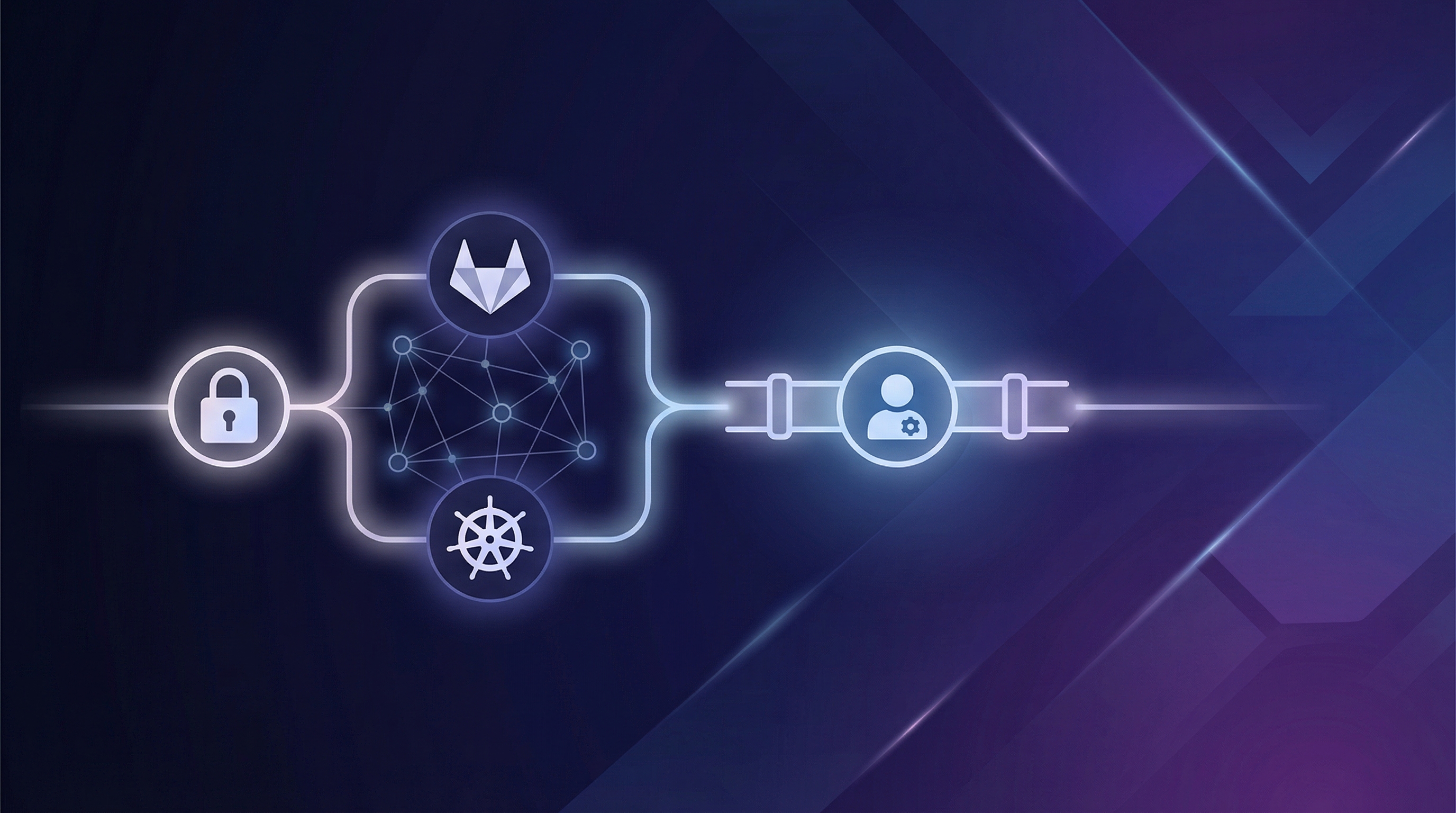

The core architectural flaw isn’t which credentials you’re exposing—it’s that you’re exposing credentials at all. Push-based deployment models require your CI/CD system to reach into your cluster, which means your cluster must be reachable and your pipeline must hold the keys. Pull-based models invert this relationship entirely: an agent inside your cluster reaches out to GitLab, asks “what should I deploy?”, and acts on the response. Your cluster credentials never leave your network perimeter.

The GitLab Agent for Kubernetes implements exactly this pattern, and understanding why it matters starts with examining how traditional integration fails.

The Credential Exposure Problem in Traditional GitLab-Kubernetes Integration

Every Kubernetes deployment from a CI/CD pipeline requires authentication. For years, the standard approach involved storing a kubeconfig file or service account token directly in GitLab CI/CD variables. This pattern works—until it doesn’t. The moment those credentials leak, an attacker gains direct access to your cluster with whatever permissions that service account holds.

The Anatomy of Credential Exposure

Traditional GitLab-Kubernetes integration follows a push-based model: the CI/CD runner authenticates to the cluster and pushes changes. This requires the runner to possess valid credentials, typically stored as:

- A

KUBECONFIGvariable containing the full cluster configuration - A

KUBE_TOKENpaired withKUBE_CA_CERTIFICATEandKUBE_SERVER - A service account token with cluster-admin or namespace-admin privileges

Each of these creates a static credential that exists outside your cluster’s security boundary. The credentials sit in GitLab’s database, get injected into runner environments, and persist in job logs if you’re not careful with masking.

Attack Vectors You’re Already Exposed To

Compromised runners represent the most direct threat. Shared runners process jobs from multiple projects. A malicious .gitlab-ci.yml in any project using that runner can exfiltrate environment variables, including your Kubernetes credentials. Even with protected variables, a compromised runner host exposes everything in memory during job execution.

Variable leakage happens more often than teams admit. Developers copy pipelines between projects, accidentally expose variables in debug output, or store credentials in repository files that end up in git history. Once a credential escapes, you face the painful process of rotation—assuming you detect the leak at all.

Over-privileged service accounts compound these risks. Teams frequently create a single service account with broad permissions rather than scoping credentials per application or environment. When that one credential leaks, the blast radius encompasses your entire cluster.

Why Certificate-Based Auth Falls Short

Switching from token-based to certificate-based authentication feels like progress. Certificates expire, support rotation, and integrate with Kubernetes RBAC. But certificates stored in CI/CD variables face the same fundamental problem: they exist as extractable secrets outside your cluster. A compromised runner can exfiltrate a client certificate just as easily as a bearer token.

The real issue isn’t the authentication mechanism—it’s the direction of trust. Push-based deployments require your CI/CD system to hold credentials that grant cluster access. Any compromise of that CI/CD system immediately becomes a cluster compromise.

The Case for Inverting the Model

Pull-based deployment inverts this trust relationship. Instead of CI/CD pushing to the cluster, an agent inside the cluster pulls configuration from a trusted source. The cluster initiates connections outbound, never exposing an inbound authentication endpoint. Credentials never leave the cluster boundary.

This architectural shift eliminates entire categories of vulnerabilities. The GitLab Agent for Kubernetes implements exactly this pattern, fundamentally changing how pipelines interact with your infrastructure.

How the GitLab Agent for Kubernetes Changes the Security Model

The GitLab Agent for Kubernetes fundamentally inverts the traditional connection model. Instead of CI/CD pipelines reaching into your cluster, the agent reaches out from your cluster to GitLab. This architectural shift eliminates the need to expose cluster credentials in CI/CD variables entirely.

The Agent Architecture

The agent runs as a deployment inside your Kubernetes cluster, maintaining a persistent gRPC connection to GitLab’s infrastructure. This outbound-only connection model means your cluster requires no inbound firewall rules, no public endpoints, and no VPN tunnels for deployments to work.

When a CI/CD pipeline needs to interact with Kubernetes, it communicates with GitLab’s servers, which relay commands through the existing agent connection. The cluster credentials never leave the cluster—they exist only in the agent’s service account permissions.

This architecture works seamlessly behind corporate firewalls, NAT gateways, and private networks. As long as the agent can establish outbound HTTPS connections to GitLab (or your self-managed GitLab instance), deployments function normally.

Scoped Authentication

The agent uses token-based authentication tied to specific projects and groups in your GitLab hierarchy. You define which repositories can access the agent through an explicit allowlist in the agent’s configuration. A project not on that list simply cannot deploy to the cluster, regardless of what credentials a developer might have.

This scoping works at multiple levels:

- Project-level access: Only specified projects can use the agent

- Group-level access: Grant access to all projects within a GitLab group

- Environment-based restrictions: Limit agent access to specific CI/CD environments

The agent configuration lives as code in your repository, making access changes auditable and subject to merge request reviews.

Comparison with GitOps Tools

If you’re familiar with Flux or ArgoCD, you’ll recognize similarities in the connection model. All three use an in-cluster component that pulls configuration rather than accepting pushed deployments. The GitLab Agent differs in its native integration with GitLab CI/CD—you can mix imperative pipeline commands with declarative GitOps manifests in the same workflow.

ArgoCD and Flux remain excellent choices for pure GitOps workflows. The GitLab Agent shines when you need the flexibility of CI/CD pipelines (running tests, building images, complex deployment logic) alongside the security benefits of an agent-based connection.

💡 Pro Tip: You can run the GitLab Agent alongside Flux or ArgoCD. Use the agent for CI/CD-driven deployments and your GitOps tool for continuous reconciliation—they complement rather than compete.

With the security model understood, let’s install the agent in your cluster and configure it to accept deployments from your projects.

Installing and Configuring the GitLab Agent in Your Cluster

With the security model understood, let’s install and configure the GitLab Agent in your cluster. This process involves creating a configuration repository, deploying the agent via Helm, establishing the secure connection back to GitLab, and configuring appropriate RBAC permissions for your deployment workflows.

Creating the Agent Configuration Repository

The agent requires a configuration file stored within your GitLab project. This file defines which projects and groups can leverage the agent for deployments, establishing the authorization boundary for your CI/CD pipelines. Create a directory structure following GitLab’s strict naming convention—the path must match exactly for the agent to discover its configuration:

ci_access: projects: - id: mygroup/deployment-project default_namespace: production - id: mygroup/staging-deployments default_namespace: staging groups: - id: mygroup/infrastructure default_namespace: infra

observability: logging: level: info grpc: level: warnThe ci_access block defines which projects and groups can use this agent for deployments. Each entry specifies a default namespace, preventing pipelines from accidentally deploying to unintended namespaces. This configuration acts as an allowlist—any project or group not explicitly listed cannot authenticate through this agent, providing an additional layer of access control beyond Kubernetes RBAC.

Registering the Agent and Generating the Access Token

Before installing the agent in your cluster, you must register it within GitLab to obtain the authentication token. Navigate to Operate → Kubernetes clusters in your project, select Connect a cluster, and choose your agent configuration from the dropdown. GitLab generates a unique access token upon registration—store this immediately in your secrets management solution, as the token appears only once and cannot be retrieved later. If you lose the token, you must reset it, which requires redeploying the agent with the new credentials.

Helm-Based Agent Installation

With the token secured, deploy the agent using Helm. The following configuration includes production-hardened settings that ensure high availability, resource efficiency, and security compliance:

replicas: 2

config: token: "" # Provided via --set or external secret kasAddress: wss://kas.gitlab.com

rbac: create: true

serviceAccount: create: true name: gitlab-agent

resources: limits: cpu: 500m memory: 256Mi requests: cpu: 100m memory: 128Mi

affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchLabels: app: gitlab-agent topologyKey: kubernetes.io/hostname

securityContext: runAsNonRoot: true runAsUser: 1000 readOnlyRootFilesystem: trueThe podAntiAffinity configuration ensures agent replicas run on different nodes, maintaining availability during node failures or maintenance windows. The security context enforces non-root execution and an immutable filesystem, aligning with container security best practices and many compliance frameworks.

Install the agent with Helm:

helm repo add gitlab https://charts.gitlab.iohelm repo update

kubectl create namespace gitlab-agent

helm upgrade --install production-agent gitlab/gitlab-agent \ --namespace gitlab-agent \ --set config.token="glagent-Rz8nV2xKmPqL5wYcT9bF3hJd" \ --set config.kasAddress="wss://kas.gitlab.com" \ --values agent-values.yaml💡 Pro Tip: Store the agent token in a Kubernetes Secret managed by your secrets management solution (Vault, Sealed Secrets, or External Secrets Operator) rather than passing it directly in the Helm command. This approach enables token rotation without modifying deployment scripts and prevents sensitive values from appearing in shell history or CI logs.

Setting Up RBAC Permissions

The agent needs Kubernetes permissions matching your deployment requirements. Choosing between namespace-scoped and cluster-wide permissions significantly impacts your security posture. For namespace-scoped deployments (recommended for most workloads):

apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: name: gitlab-agent-deployer namespace: productionrules: - apiGroups: ["", "apps", "batch"] resources: ["deployments", "services", "configmaps", "secrets", "pods", "jobs"] verbs: ["get", "list", "watch", "create", "update", "patch", "delete"] - apiGroups: ["networking.k8s.io"] resources: ["ingresses"] verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: name: gitlab-agent-deployer namespace: productionsubjects: - kind: ServiceAccount name: gitlab-agent namespace: gitlab-agentroleRef: kind: Role name: gitlab-agent-deployer apiGroup: rbac.authorization.k8s.ioFor cluster-wide deployments (required for CRDs, namespaces, or cluster-scoped resources), use ClusterRole and ClusterRoleBinding instead. Apply the principle of least privilege—start with namespace-scoped permissions and expand only when specific deployment requirements demand broader access. Document any cluster-wide permissions in your security runbooks, as these represent elevated risk that warrants additional review during audits.

Verifying the Agent Connection

Confirm the agent is connected by checking the GitLab UI under Operate → Kubernetes clusters. The agent should show a “Connected” status with the last contact timestamp, indicating successful gRPC stream establishment. You can also verify the deployment locally and troubleshoot any connection issues:

kubectl get pods -n gitlab-agentkubectl logs -n gitlab-agent -l app=gitlab-agent --tail=50Look for successful connection messages indicating the agent has established a gRPC stream to the GitLab Agent Server (KAS). Common issues include network policies blocking egress traffic, corporate proxies intercepting WebSocket connections, or expired tokens requiring regeneration.

With the agent running and connected, your cluster is ready to receive deployment commands securely. Next, we’ll configure your CI/CD pipelines to authenticate using the agent’s Kubernetes context.

Connecting CI/CD Pipelines to the Agent with Kubernetes Context

With the GitLab Agent installed and running in your cluster, the next step is configuring your CI/CD pipelines to authenticate through it. This eliminates the need to store kubeconfig files or service account tokens as CI/CD variables—the agent handles authentication transparently through a secure, bidirectional tunnel between GitLab and your cluster.

Authorizing Projects to Use the Agent

Before a pipeline can use the agent, you must explicitly authorize the project or group. This authorization happens in the agent’s configuration file within the agent’s configuration repository. Without this explicit grant, pipelines will fail to establish a connection to the cluster, regardless of other permissions.

ci_access: projects: - id: mygroup/myproject - id: mygroup/another-project default_namespace: staging groups: - id: mygroup/frontend-teamThe ci_access block defines which projects and groups can connect to this agent from their pipelines. The optional default_namespace setting specifies where Kubernetes commands execute when the pipeline doesn’t explicitly set one—this proves particularly useful for teams that consistently deploy to the same namespace.

After updating this configuration file and pushing to the repository, the agent picks up the changes automatically—no restart required. This dynamic configuration reload means you can onboard new projects without any cluster-side intervention.

Configuring the Kubernetes Context in Pipelines

GitLab injects the Kubernetes context into jobs that reference an agent. The context becomes available through the KUBECONFIG environment variable, which kubectl and Helm read automatically. This injection happens at job startup, before your script commands execute.

deploy: image: bitnami/kubectl:1.28 script: - kubectl config get-contexts - kubectl get pods -n production environment: name: production kubernetes: agent: mygroup/agent-config:production-agentThe kubernetes.agent field follows the format path/to/agent-config-project:agent-name. This tells GitLab which agent to use for establishing the cluster connection. The job runs with full kubectl access scoped to whatever RBAC permissions you granted the agent’s service account. If the agent’s service account lacks permission for an operation, kubectl commands will fail with standard Kubernetes authorization errors—the agent doesn’t bypass RBAC.

Environment-Specific Agent Selection

Production deployments demand strict separation from staging environments. Configure different agents for each environment to enforce this boundary at the infrastructure level rather than relying solely on namespace isolation or RBAC policies within a single cluster.

.deploy_base: image: alpine/helm:3.14 script: - helm upgrade --install myapp ./chart -n $KUBE_NAMESPACE

deploy_staging: extends: .deploy_base variables: KUBE_NAMESPACE: staging environment: name: staging kubernetes: agent: mygroup/agent-config:staging-agent

deploy_production: extends: .deploy_base variables: KUBE_NAMESPACE: production environment: name: production kubernetes: agent: mygroup/agent-config:production-agent rules: - if: $CI_COMMIT_BRANCH == "main" when: manualEach environment references a distinct agent, and each agent runs in its respective cluster with appropriate RBAC permissions. The staging agent has no access to production resources, and vice versa. This separation ensures that even a misconfigured pipeline or compromised CI variable cannot accidentally target the wrong environment—the agent simply won’t have connectivity to unauthorized clusters.

💡 Pro Tip: Name your agents descriptively (e.g.,

us-east-prod-agent,eu-west-staging-agent) to make pipeline configurations self-documenting. When reviewing merge requests, reviewers immediately understand which cluster a deployment targets without needing to cross-reference external documentation.

Working with Multiple Clusters

Organizations running workloads across multiple clusters—whether for geographic distribution, compliance requirements, or disaster recovery—register a separate agent in each cluster. The pipeline then selects the appropriate agent based on deployment logic, enabling sophisticated multi-region deployment strategies.

deploy_us: environment: name: production/us-east kubernetes: agent: infrastructure/agents:us-east-1-prod

deploy_eu: environment: name: production/eu-west kubernetes: agent: infrastructure/agents:eu-west-1-prod needs: [deploy_us]The agent configuration repository (infrastructure/agents in this example) contains the configuration files for all agents, each registered with a descriptive name matching its cluster location. Centralizing agent configurations in a dedicated repository simplifies governance—security teams can audit all cluster access grants in one location, and infrastructure changes follow standard merge request workflows.

For organizations with stringent compliance requirements, consider maintaining separate agent configuration repositories per environment tier. This allows different approval workflows for production versus non-production agent access grants.

With pipeline authentication configured, you’re ready to build complete deployment workflows. The next section walks through a production-grade Helm deployment pipeline that leverages these agent connections for secure, repeatable releases.

Building a Complete Deployment Pipeline with Helm and the Agent

With the GitLab Agent connected and your pipeline authenticated to your cluster, you’re ready to build a production-grade deployment workflow. This section walks through a multi-stage pipeline that builds container images, scans for vulnerabilities, deploys to staging with automated validation, and promotes to production with manual approval gates. The patterns demonstrated here reflect industry best practices for continuous delivery to Kubernetes environments.

The Multi-Stage Pipeline Structure

A robust Kubernetes deployment pipeline separates concerns across distinct stages. Each stage has clear entry and exit criteria, and failures at any point prevent progression to subsequent stages. This structure ensures that only validated, secure artifacts reach production while providing visibility into the deployment process at every step.

stages: - build - scan - deploy-staging - validate - deploy-production

variables: HELM_RELEASE_NAME: myapp HELM_CHART_PATH: ./charts/myapp STAGING_NAMESPACE: staging PRODUCTION_NAMESPACE: production

build: stage: build image: docker:24.0 services: - docker:24.0-dind script: - docker build -t $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA . - docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA rules: - if: $CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH

container-scan: stage: scan image: name: aquasec/trivy:latest entrypoint: [""] script: - trivy image --exit-code 1 --severity HIGH,CRITICAL $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA allow_failure: falseThe allow_failure: false on the security scan ensures that containers with high-severity vulnerabilities never reach your clusters. This gate acts as your first line of defense, catching known CVEs before they can be exploited in any environment.

Helm Deployments with Agent Context

Helm deployments through the agent require setting the Kubernetes context before running Helm commands. The agent provides this context automatically when you reference it in your job configuration. This approach eliminates the need to manage kubeconfig files or service account tokens directly in your CI/CD variables, significantly reducing the attack surface for credential theft.

The hidden .deploy-base job below defines reusable configuration that both staging and production deployments inherit. This DRY approach ensures consistency across environments while allowing environment-specific overrides through variables and value files.

.deploy-base: image: name: alpine/helm:3.14.0 entrypoint: [""] before_script: - kubectl config use-context ${KUBE_CONTEXT} - helm dependency update ${HELM_CHART_PATH}

deploy-staging: extends: .deploy-base stage: deploy-staging variables: KUBE_CONTEXT: mygroup/infrastructure:production-cluster script: - | helm upgrade --install ${HELM_RELEASE_NAME} ${HELM_CHART_PATH} \ --namespace ${STAGING_NAMESPACE} \ --create-namespace \ --set image.repository=${CI_REGISTRY_IMAGE} \ --set image.tag=${CI_COMMIT_SHA} \ --set environment=staging \ --values ./charts/myapp/values-staging.yaml \ --wait \ --timeout 5m environment: name: staging url: https://staging.myapp.example.comThe --wait flag tells Helm to block until all resources reach a ready state, which integrates naturally with GitLab’s pipeline status tracking. Combined with --timeout, this ensures your pipeline accurately reflects the actual deployment state rather than simply confirming that Kubernetes accepted the manifest.

Implementing Deployment Gates

Production deployments require explicit approval. GitLab’s when: manual combined with environment protection rules creates a secure promotion workflow. The validation stage between staging and production deployment serves as an automated quality gate, verifying that the staging deployment is actually serving traffic correctly before allowing promotion.

validate-staging: stage: validate image: curlimages/curl:8.5.0 script: - | for i in $(seq 1 30); do HTTP_CODE=$(curl -s -o /dev/null -w "%{http_code}" https://staging.myapp.example.com/health) if [ "$HTTP_CODE" = "200" ]; then echo "Health check passed" exit 0 fi echo "Attempt $i: HTTP $HTTP_CODE - waiting..." sleep 10 done echo "Health check failed after 30 attempts" exit 1 needs: - deploy-staging

deploy-production: extends: .deploy-base stage: deploy-production variables: KUBE_CONTEXT: mygroup/infrastructure:production-cluster script: - | helm upgrade --install ${HELM_RELEASE_NAME} ${HELM_CHART_PATH} \ --namespace ${PRODUCTION_NAMESPACE} \ --set image.repository=${CI_REGISTRY_IMAGE} \ --set image.tag=${CI_COMMIT_SHA} \ --set environment=production \ --set replicaCount=3 \ --values ./charts/myapp/values-production.yaml \ --wait \ --timeout 10m environment: name: production url: https://myapp.example.com when: manual needs: - validate-staging💡 Pro Tip: Configure protected environments in GitLab’s project settings to require approval from specific users or groups before the manual job can be triggered. This adds an additional layer of authorization beyond the manual gate itself.

Automated Rollback on Failure

When health checks fail post-deployment, automated rollback prevents extended downtime. Helm maintains release history, making rollbacks straightforward. You can configure Helm to retain a specific number of releases using the --history-max flag, balancing the ability to roll back against cluster resource consumption.

rollback-production: extends: .deploy-base stage: deploy-production variables: KUBE_CONTEXT: mygroup/infrastructure:production-cluster script: - helm rollback ${HELM_RELEASE_NAME} --namespace ${PRODUCTION_NAMESPACE} --wait environment: name: production action: stop when: manual needs: - deploy-productionFor automated rollback, you can extend the deployment job itself to catch failures and roll back within the same job execution. This approach minimizes the window of degraded service by eliminating the need for manual intervention when deployments fail:

deploy-production: # ... previous configuration ... script: - | helm upgrade --install ${HELM_RELEASE_NAME} ${HELM_CHART_PATH} \ --namespace ${PRODUCTION_NAMESPACE} \ --set image.tag=${CI_COMMIT_SHA} \ --values ./charts/myapp/values-production.yaml \ --wait \ --timeout 10m \ --atomicThe --atomic flag automatically triggers a rollback if the deployment fails or times out, ensuring your production environment returns to its last known good state without manual intervention. Under the hood, --atomic implies --wait, so you can technically omit the explicit --wait flag when using atomic deployments, though including both makes the intent clearer for future maintainers.

This pipeline provides a foundation you can extend with additional validation stages, canary deployments, or integration with external approval systems. Consider adding smoke tests that exercise critical user journeys, performance benchmarks that compare against baseline metrics, or integration with PagerDuty or Slack for deployment notifications. However, even well-designed pipelines encounter issues—the agent connection can fail, permissions can be misconfigured, and deployments can time out for unexpected reasons.

Troubleshooting Agent Connectivity and Permission Issues

Production deployments surface edge cases that documentation glosses over. When your pipeline fails at 2 AM with cryptic Kubernetes errors, systematic debugging separates a quick fix from hours of frustration. This section covers the most common failure modes and provides concrete diagnostic steps to resolve them efficiently.

Diagnosing Agent-to-GitLab Connection Failures

The agent maintains a persistent gRPC connection to GitLab’s Kubernetes Agent Server (KAS). When this connection breaks, pipelines fail with timeout errors before any deployment logic runs. Understanding the connection lifecycle helps pinpoint where failures occur.

Start by checking the agent pod’s logs:

kubectl logs -n gitlab-agent -l app=gitlab-agent --tail=100

## Look for connection state messageskubectl logs -n gitlab-agent -l app=gitlab-agent | grep -E "(connect|disconnect|error)"Healthy agents log Connected to gRPC server followed by periodic heartbeat confirmations. If you see repeated dial tcp: i/o timeout errors, the agent cannot reach KAS—check network policies, egress rules, and proxy configurations. DNS resolution failures present similarly, so verify the agent can resolve kas.gitlab.com (or your self-managed KAS hostname) from within the cluster.

For agents behind corporate proxies, verify the HTTPS_PROXY environment variable is set in the agent deployment:

kubectl get deployment -n gitlab-agent gitlab-agent -o jsonpath='{.spec.template.spec.containers[0].env}' | jq .If proxy settings appear correct but connections still fail, confirm that your proxy allows WebSocket upgrades—some corporate proxies block the connection upgrade that gRPC requires for streaming communication.

Common RBAC Misconfigurations

RBAC issues manifest as forbidden errors in pipeline output. The agent’s service account needs permissions matching your deployment operations, and missing permissions often surface only when specific resources are touched.

When Helm deployments fail with secrets is forbidden, the agent lacks permissions to manage release metadata:

## Check what the agent can actually dokubectl auth can-i create deployments --as=system:serviceaccount:gitlab-agent:gitlab-agent -n production

## List all permissions for the agent service accountkubectl auth can-i --list --as=system:serviceaccount:gitlab-agent:gitlab-agent -n productionThe fix requires updating your ClusterRole or Role bindings. A common oversight: granting deployments access but forgetting replicasets, which Deployment controllers create automatically. Similarly, Helm requires access to secrets for storing release state, even if your charts don’t explicitly define secrets. Review your deployment manifests and trace every resource type that gets created, including those generated by controllers.

Debugging Context Not Found Errors

Pipelines failing with error: context "my-cluster:gitlab-agent" was not set indicate a mismatch between your .gitlab-ci.yml context name and the agent’s registration. This error frequently occurs when copying pipeline configurations between projects.

Verify the correct context path:

## In your pipeline, list available contextskubectl config get-contexts

## The context follows this pattern: <project-path>:<agent-name>## Example: mygroup/infrastructure:production-agentThe context name derives from the project housing your agent configuration, not the project running the pipeline. Cross-project agent access requires explicit authorization in the agent’s config.yaml. When multiple agents exist across different projects, double-check that you reference the full path including any parent groups.

Monitoring Agent Health

Expose the agent’s Prometheus metrics endpoint to catch connectivity issues before they break deployments. Proactive monitoring transforms reactive firefighting into predictable maintenance windows.

kubectl port-forward -n gitlab-agent svc/gitlab-agent-metrics 8080:8080 &curl -s localhost:8080/metrics | grep gitlab_kas

## Key metrics to monitor:## gitlab_kas_tunnel_connections_total - connection attempts## gitlab_kas_request_duration_seconds - latency to GitLab💡 Pro Tip: Alert on

gitlab_kas_tunnel_connections_totalincreasing without a corresponding increase in successful connections. This pattern signals persistent connectivity problems before pipeline failures surface. Combine this with latency monitoring to distinguish between network issues and KAS-side problems.

With the agent running reliably, you can extend beyond push-based CI/CD. The agent’s pull-based reconciliation enables full GitOps workflows where the cluster watches your repository for changes.

Extending the Pattern: GitOps with the Agent’s Pull-Based Workflow

The CI/CD push model we’ve implemented provides strong security guarantees, but GitOps takes the pattern further by inverting the deployment flow entirely. Instead of pipelines pushing changes to clusters, the agent continuously reconciles cluster state with your Git repository—eliminating pipeline-to-cluster permissions altogether.

Push vs Pull: Choosing the Right Model

CI/CD push deployments work well when you need immediate feedback loops, complex deployment orchestration, or integration with external approval systems. The pipeline triggers a deployment, waits for completion, and reports success or failure inline with your merge request.

Pull-based GitOps excels in multi-cluster environments, drift detection scenarios, and organizations prioritizing auditability. The agent watches your repository for changes and applies them automatically, treating Git as the single source of truth. Every production state change traces back to a commit.

The decision often comes down to deployment complexity. If your releases involve database migrations, feature flag coordination, or staged rollouts with manual gates, push-based pipelines give you the control flow primitives to orchestrate these steps. If your deployments are declarative manifest applications, GitOps removes an entire class of pipeline failure modes.

Configuring Manifest Synchronization

The GitLab agent supports GitOps through its manifest synchronization feature. You define watched paths in your agent configuration, and the agent polls your repository for changes at configurable intervals. When manifests change, the agent applies them using server-side apply semantics, providing consistent reconciliation behavior.

This configuration lives in the same .gitlab/agents/<agent-name>/config.yaml file you’ve already created. You specify which directories contain Kubernetes manifests, which namespace to deploy into, and how frequently to check for updates.

💡 Pro Tip: Start with a dedicated GitOps repository separate from your application code. This separation lets you control deployment permissions independently and keeps your manifest history clean.

The Hybrid Approach

Many organizations land on a hybrid model: CI/CD pipelines handle builds, tests, and image publishing, while GitOps manages the actual cluster state. Your pipeline updates image tags in a GitOps repository, and the agent deploys the change. This gives you pipeline observability for the build phase and GitOps benefits for deployment.

For existing pipelines, migration happens incrementally. Start by adding agent-based GitOps to a non-production cluster while keeping your push-based production deployments. Once you’ve validated the reconciliation behavior and built confidence in the pull model, promote the pattern upward.

The agent-based architecture you’ve built provides the foundation for either approach—and the flexibility to evolve as your security and operational requirements mature.

Key Takeaways

- Install the GitLab Agent in each cluster to eliminate kubeconfig secrets from CI/CD variables entirely

- Scope agent RBAC permissions to specific namespaces rather than granting cluster-admin access

- Use the

kuberneteskeyword in.gitlab-ci.ymlto automatically authenticate jobs through the agent - Implement environment-specific agents to enforce separation between staging and production clusters

- Monitor agent connectivity proactively using the built-in Prometheus metrics endpoint