Ambassador Pattern: Building Resilient Proxies That Handle the Chaos Your Services Can't

Your microservice just hit a transient network failure for the third time this hour. The payment service timed out, the inventory check returned a 503, and now your order processing is stuck in limbo. You could add retry logic—a few lines of code, exponential backoff, maybe some jitter to prevent thundering herds. But then you need that same logic in the shipping service. And the notification service. And the analytics pipeline. Suddenly you’re maintaining identical resilience code across fifteen repositories, each with its own subtle bugs and configuration drift.

Circuit breakers? Same story. Rate limiting? You’ve now got three different implementations, none of which agree on what “rate” means. Every service becomes a little fatter, a little more coupled to infrastructure concerns that have nothing to do with its actual business logic. Your payment service should process payments. It shouldn’t need to know that the downstream fraud detection API has been flaky since Tuesday’s deployment.

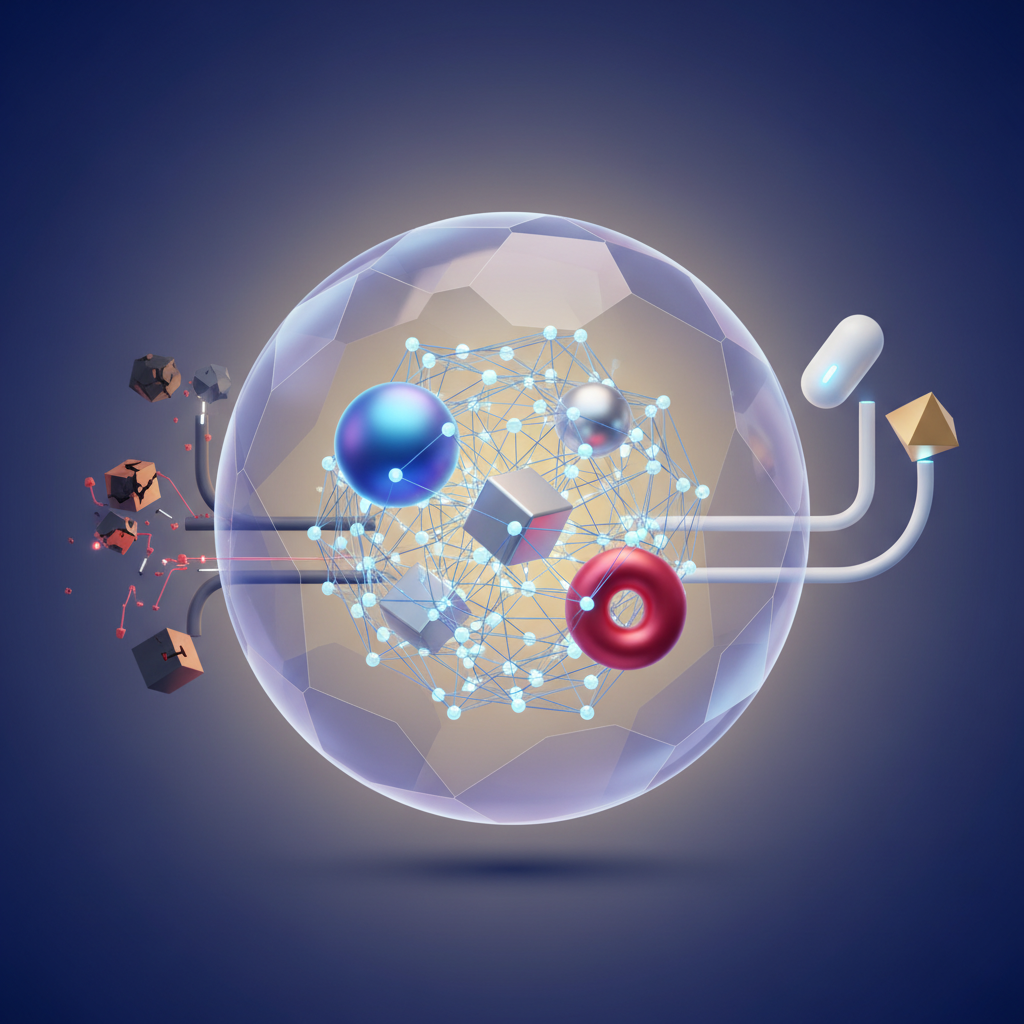

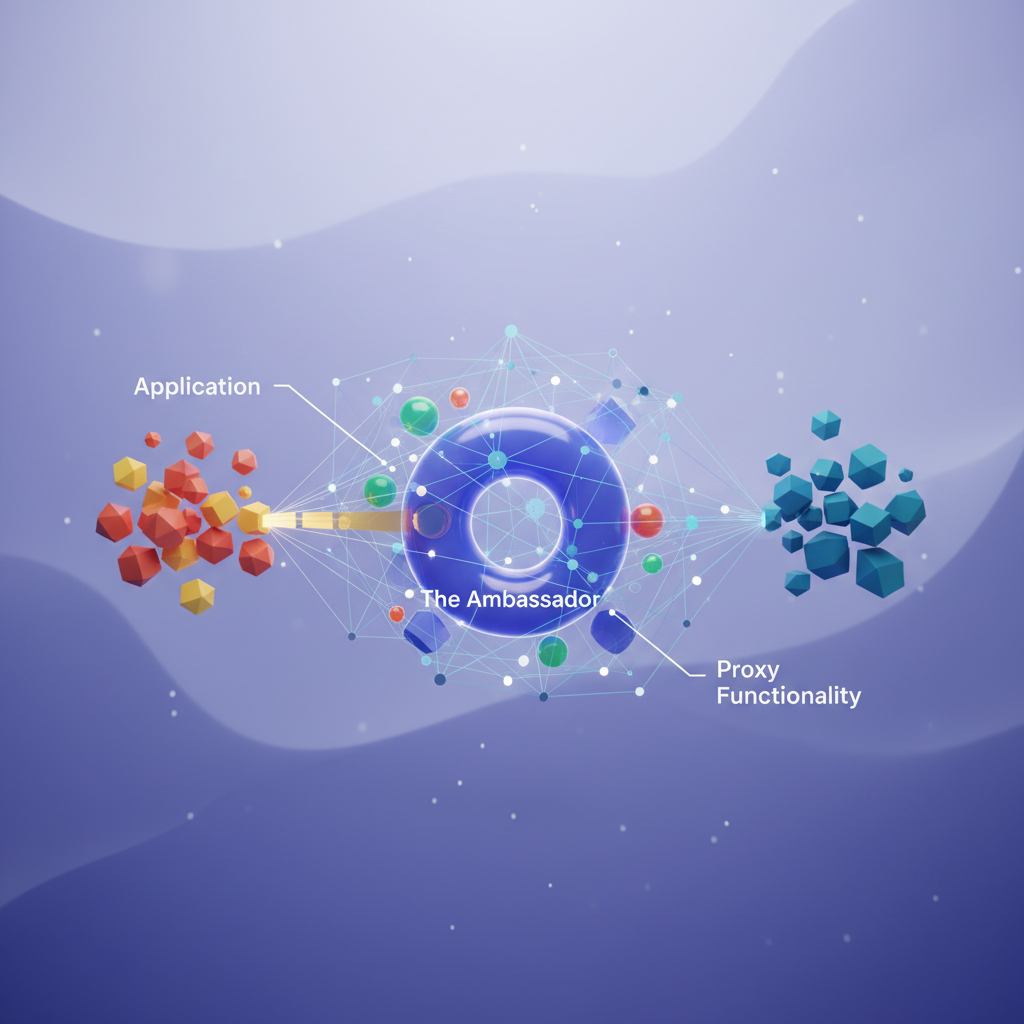

This is where the Ambassador pattern earns its name. Like a diplomatic ambassador handling protocol and communication on behalf of their government, an ambassador proxy sits alongside your service and manages all the messy realities of network communication. Retries, timeouts, circuit breaking, TLS termination, request routing—all of it lives in a dedicated component that your application code never sees. Your service makes a simple HTTP call to localhost. The ambassador handles everything else.

The result is genuine separation of concerns: business logic stays in your services, operational resilience lives in infrastructure. But building an ambassador that actually works in production—one that fails gracefully under pressure and doesn’t become a bottleneck itself—requires understanding both the pattern’s power and its pitfalls.

Why Your Services Shouldn’t Know About Network Failures

Picture a payment service that processes credit card transactions. It needs to call an external payment gateway, but networks fail. So you add retry logic. Then you add exponential backoff. Then circuit breaking when the gateway goes down. Then connection pooling. Then request timeouts. Then metrics collection for each of these behaviors.

Your 200-line payment service is now 800 lines, and half of it has nothing to do with payments.

The Cross-Cutting Concern Explosion

This pattern repeats across every service in your architecture. Your inventory service needs the same retry logic. So does your notification service, your user service, and your analytics pipeline. Each team implements these concerns slightly differently. Some use Resilience4j, others roll their own. Timeout values vary wildly. Circuit breaker thresholds are inconsistent.

You now have three problems:

- Duplicated logic scattered across dozens of services, each with its own bugs and edge cases

- Inconsistent behavior that makes debugging production issues a nightmare

- Tightly coupled code where business logic and infrastructure concerns are intertwined

When you need to update your retry strategy—say, switching from fixed delays to jittered exponential backoff—you’re looking at changes across every service, coordinated deployments, and the inevitable team that misses the memo.

Separation of Concerns for Distributed Systems

The single responsibility principle doesn’t stop at class boundaries. In distributed systems, your services should focus on business logic while infrastructure concerns live elsewhere.

This is where the Ambassador pattern enters. An ambassador is a proxy that sits alongside your service, handling all the messy details of network communication. Your payment service makes a simple HTTP call to localhost. The ambassador intercepts that call and handles retries, circuit breaking, timeouts, and observability before forwarding the request to its actual destination.

Your service code stays clean. It knows nothing about network failures, retry policies, or circuit breaker states. It just makes a call and gets a response—or a clear error.

Ambassador vs Direct Communication

With direct client-to-service communication, every service must implement its own resilience stack. With an ambassador, that logic moves to a dedicated component that can be configured, updated, and monitored independently.

The tradeoff is clear: you add a network hop and operational complexity in exchange for cleaner services and consistent cross-cutting behavior. For a monolith or a handful of services, this overhead isn’t worth it. For a platform with dozens of microservices maintained by multiple teams, the ambassador pattern pays for itself in reduced duplication and predictable failure handling.

💡 Pro Tip: The ambassador pattern shines when you have multiple services needing identical resilience behavior. If you’re running three services, embed the logic. If you’re running thirty, extract it.

Understanding when this tradeoff makes sense is essential—but first, let’s examine what actually belongs inside an ambassador proxy.

Anatomy of an Ambassador: What Lives in the Proxy

An ambassador proxy sits between your application and the outside world, handling everything your service shouldn’t care about. Understanding exactly what belongs in this proxy—and what doesn’t—determines whether you build a clean abstraction or a tangled mess.

Core Responsibilities

The ambassador owns four primary concerns:

Connection Management encompasses connection pooling, keep-alive handling, and protocol upgrades. Your application opens a connection to localhost; the ambassador maintains a pool of connections to the actual remote service. This separation means your app never deals with connection limits, DNS resolution, or TLS handshakes to external endpoints.

Retry Logic belongs entirely in the ambassador. When a request fails due to a transient network error or a 503 response, the proxy retries with exponential backoff. Your application code stays blissfully unaware that three attempts happened before success.

Circuit Breaking prevents cascade failures. When the ambassador detects that a downstream service is failing repeatedly, it stops sending requests entirely for a cooldown period. This protects both your application from wasted resources and the failing service from additional load.

Request Routing handles service discovery, load balancing across multiple instances, and directing traffic based on headers or paths. The ambassador translates your application’s simple localhost call into the correct destination.

The Sidecar Relationship

The ambassador pattern is a specialized form of the sidecar pattern. While sidecars can handle any auxiliary concern—logging agents, security proxies, configuration management—ambassadors specifically proxy outbound network calls. Every ambassador is a sidecar, but not every sidecar is an ambassador.

In Kubernetes, both deploy as additional containers in the same pod, sharing the network namespace. The distinction matters for mental modeling: when you hear “ambassador,” think outbound traffic management.

Observability Hooks

The proxy becomes a natural instrumentation point. Without touching application code, the ambassador captures:

- Request latency histograms

- Error rates by endpoint

- Retry counts and circuit breaker state changes

- Connection pool utilization

These metrics flow to your monitoring stack through standard exporters. The ambassador sees every outbound request, making it the single source of truth for external dependency health.

💡 Pro Tip: Keep business logic out of the ambassador. Validation, transformation, and authentication decisions belong in your application. The ambassador handles how requests reach their destination, never what those requests mean.

With this mental model of ambassador responsibilities established, let’s build one. The next section walks through a basic Java implementation that demonstrates these concepts in practice.

Building a Basic Ambassador in Java

Now that you understand what lives inside an ambassador proxy, let’s build one from scratch. This exercise reveals the core mechanics that commercial service meshes abstract away—and gives you the foundation to customize behavior for your specific needs.

The Proxy Architecture

Our ambassador sits between your application and external services, intercepting every outbound HTTP request. The application connects to localhost:8080 instead of the remote service, completely unaware that a proxy exists. This transparency is fundamental—you can add, remove, or modify the ambassador without touching application code.

public class AmbassadorProxy { private final HttpClient httpClient; private final String targetHost; private final int targetPort; private final Duration timeout; private final Logger logger = LoggerFactory.getLogger(AmbassadorProxy.class);

public AmbassadorProxy(String targetHost, int targetPort, Duration timeout) { this.targetHost = targetHost; this.targetPort = targetPort; this.timeout = timeout; this.httpClient = HttpClient.newBuilder() .connectTimeout(timeout) .build(); }

public void start(int listenPort) throws IOException { HttpServer server = HttpServer.create(new InetSocketAddress(listenPort), 0); server.createContext("/", this::handleRequest); server.setExecutor(Executors.newVirtualThreadPerTaskExecutor()); server.start(); logger.info("Ambassador proxy listening on port {}, forwarding to {}:{}", listenPort, targetHost, targetPort); }}The constructor accepts configuration that would otherwise clutter your application code: the target host, port, and timeout duration. Virtual threads handle concurrent requests efficiently without the thread-pool sizing headaches of traditional approaches. Each incoming request spawns a lightweight virtual thread, allowing the proxy to handle thousands of concurrent connections with minimal memory overhead. This eliminates the need to carefully tune thread pool sizes based on expected traffic patterns.

Transparent Request Forwarding

The request handler reconstructs incoming requests and forwards them to the target service. The key is preserving headers, query parameters, and the request body exactly as received. Any modification to these elements could break downstream services that depend on specific header values or payload formats.

private void handleRequest(HttpExchange exchange) throws IOException { String method = exchange.getRequestMethod(); String path = exchange.getRequestURI().toString(); String targetUrl = String.format("http://%s:%d%s", targetHost, targetPort, path);

long startTime = System.currentTimeMillis(); String requestId = UUID.randomUUID().toString().substring(0, 8);

logger.info("[{}] {} {} -> {}", requestId, method, path, targetUrl);

try { HttpRequest.Builder requestBuilder = HttpRequest.newBuilder() .uri(URI.create(targetUrl)) .timeout(timeout);

// Copy headers from original request exchange.getRequestHeaders().forEach((name, values) -> { if (!isHopByHopHeader(name)) { values.forEach(value -> requestBuilder.header(name, value)); } });

// Set method and body byte[] requestBody = exchange.getRequestBody().readAllBytes(); requestBuilder.method(method, HttpRequest.BodyPublishers.ofByteArray(requestBody));

HttpResponse<byte[]> response = httpClient.send( requestBuilder.build(), HttpResponse.BodyHandlers.ofByteArray() );

forwardResponse(exchange, response, requestId, startTime);

} catch (HttpTimeoutException e) { logger.warn("[{}] Request timed out after {}ms", requestId, timeout.toMillis()); sendError(exchange, 504, "Gateway Timeout"); } catch (Exception e) { logger.error("[{}] Proxy error: {}", requestId, e.getMessage()); sendError(exchange, 502, "Bad Gateway"); }}Notice how the ambassador translates exceptions into appropriate HTTP status codes. Your application receives a clean 504 Gateway Timeout instead of dealing with SocketTimeoutException handling scattered across your codebase. This centralized error handling ensures consistent behavior across all service calls—a 502 Bad Gateway always means the upstream service failed, while 504 specifically indicates timeout conditions.

The request ID generated for each call provides correlation across distributed logs. When debugging production issues, you can trace a single request from the application through the ambassador and into the target service using this identifier.

💡 Pro Tip: Filter out hop-by-hop headers like

Connection,Keep-Alive, andTransfer-Encodingwhen forwarding. These headers apply only to the immediate connection and cause issues if passed through. The HTTP/1.1 specification defines these headers as non-forwarding, and violating this causes subtle bugs that are difficult to diagnose.

Configurable Timeout Handling

Timeouts deserve special attention. The ambassador enforces a consistent timeout policy across all outbound requests—something notoriously difficult to achieve when timeout configuration is spread across multiple service clients. Different teams often set conflicting timeout values, leading to unpredictable behavior during partial outages.

public record AmbassadorConfig( String targetHost, int targetPort, int listenPort, Duration connectionTimeout, Duration requestTimeout) { public static AmbassadorConfig fromEnvironment() { return new AmbassadorConfig( System.getenv().getOrDefault("TARGET_HOST", "api.payment-service.internal"), Integer.parseInt(System.getenv().getOrDefault("TARGET_PORT", "443")), Integer.parseInt(System.getenv().getOrDefault("LISTEN_PORT", "8080")), Duration.ofSeconds(Long.parseLong( System.getenv().getOrDefault("CONNECT_TIMEOUT_SECONDS", "5"))), Duration.ofSeconds(Long.parseLong( System.getenv().getOrDefault("REQUEST_TIMEOUT_SECONDS", "30"))) ); }}Environment-based configuration lets you tune timeouts per deployment without rebuilding. A payment service ambassador might use a 30-second timeout, while a cache lookup proxy uses 500 milliseconds. This separation of configuration from code follows the twelve-factor app methodology and enables operations teams to adjust behavior without developer involvement.

The distinction between connection timeout and request timeout matters significantly. Connection timeout bounds how long the proxy waits to establish a TCP connection—useful for detecting network partitions quickly. Request timeout covers the entire round-trip including response body transfer, protecting against slow or stalled upstream services.

Request Logging Without Application Changes

Every request passes through the ambassador, creating a natural instrumentation point. The logging we added captures request IDs, timing, and outcomes without a single line of code in your application. This observability comes for free once the proxy is in place.

private void forwardResponse(HttpExchange exchange, HttpResponse<byte[]> response, String requestId, long startTime) throws IOException { long duration = System.currentTimeMillis() - startTime;

logger.info("[{}] Response: {} in {}ms", requestId, response.statusCode(), duration);

// Copy response headers response.headers().map().forEach((name, values) -> { if (!isHopByHopHeader(name)) { exchange.getResponseHeaders().put(name, values); } });

byte[] body = response.body(); exchange.sendResponseHeaders(response.statusCode(), body.length); exchange.getResponseBody().write(body); exchange.close();}The structured log format enables powerful analysis. You can aggregate response times by endpoint, track error rates over time, and identify slow requests—all from ambassador logs. Teams often pipe these logs to observability platforms like Elasticsearch or Datadog for dashboards and alerting.

This basic ambassador already provides timeout enforcement, structured logging, and clean error translation. But production systems face intermittent failures that require more sophisticated handling—retries with backoff and circuit breakers that prevent cascade failures. Let’s add those resilience features next.

Adding Resilience: Retries and Circuit Breakers

A basic proxy that forwards requests is useful, but production traffic demands more. Network blips, overloaded services, and cascading failures will find every weakness in your system. The ambassador pattern shines when you add resilience mechanisms that shield your application from these realities.

Exponential Backoff with Jitter

Naive retry logic hammers failing services repeatedly, often making bad situations worse. When a service struggles under load, immediate retries pile additional requests onto an already overwhelmed system. Exponential backoff spaces out retries, giving downstream services time to recover. Adding jitter prevents the thundering herd problem where multiple clients retry simultaneously after a shared outage.

public class RetryPolicy { private final int maxRetries; private final long baseDelayMs; private final long maxDelayMs; private final Random random = new Random();

public RetryPolicy(int maxRetries, long baseDelayMs, long maxDelayMs) { this.maxRetries = maxRetries; this.baseDelayMs = baseDelayMs; this.maxDelayMs = maxDelayMs; }

public long calculateDelay(int attemptNumber) { long exponentialDelay = baseDelayMs * (1L << attemptNumber); long cappedDelay = Math.min(exponentialDelay, maxDelayMs); long jitter = (long) (random.nextDouble() * cappedDelay * 0.3); return cappedDelay + jitter; }

public <T> T execute(Supplier<T> operation) throws Exception { Exception lastException = null;

for (int attempt = 0; attempt <= maxRetries; attempt++) { try { return operation.get(); } catch (TransientException e) { lastException = e; if (attempt < maxRetries) { Thread.sleep(calculateDelay(attempt)); } } } throw lastException; }}The 30% jitter factor spreads retry attempts across a time window rather than stacking them at exact intervals. For a base delay of 100ms, the first retry happens between 100-130ms, the second between 200-260ms, and so on. This randomization becomes critical at scale—when thousands of clients experience the same failure, jitter transforms a synchronized retry storm into a manageable trickle of requests.

Circuit Breaker States

The circuit breaker pattern prevents your ambassador from repeatedly calling a service that’s clearly failing. Named after electrical circuit breakers that prevent fires by cutting power during overloads, this pattern protects both your application and downstream services from wasted effort. Three states govern the behavior:

Closed: Requests flow normally. The circuit breaker tracks failure rates in a sliding window, counting consecutive failures or calculating failure percentages. This is the healthy state where your system operates as expected.

Open: All requests fail immediately without attempting the downstream call. This gives the failing service time to recover without the additional burden of handling doomed requests. Your application receives fast failures rather than waiting for timeouts, preserving thread pools and connection resources.

Half-Open: After a timeout, the circuit allows a limited number of test requests through. These probe requests determine whether the downstream service has recovered. Success transitions back to closed; failure reopens the circuit for another waiting period.

public class CircuitBreaker { private final int failureThreshold; private final long openDurationMs; private final int halfOpenMaxAttempts;

private State state = State.CLOSED; private int failureCount = 0; private int halfOpenAttempts = 0; private long lastFailureTime = 0;

public CircuitBreaker(int failureThreshold, long openDurationMs, int halfOpenMaxAttempts) { this.failureThreshold = failureThreshold; this.openDurationMs = openDurationMs; this.halfOpenMaxAttempts = halfOpenMaxAttempts; }

public synchronized <T> T execute(Supplier<T> operation) throws CircuitOpenException { if (state == State.OPEN) { if (System.currentTimeMillis() - lastFailureTime >= openDurationMs) { state = State.HALF_OPEN; halfOpenAttempts = 0; } else { throw new CircuitOpenException("Circuit is open"); } }

try { T result = operation.get(); onSuccess(); return result; } catch (Exception e) { onFailure(); throw e; } }

private void onSuccess() { if (state == State.HALF_OPEN) { halfOpenAttempts++; if (halfOpenAttempts >= halfOpenMaxAttempts) { state = State.CLOSED; failureCount = 0; } } else { failureCount = 0; } }

private void onFailure() { lastFailureTime = System.currentTimeMillis(); failureCount++; if (failureCount >= failureThreshold || state == State.HALF_OPEN) { state = State.OPEN; } }

private enum State { CLOSED, OPEN, HALF_OPEN }}Distinguishing Failure Types

Not every error deserves a retry. Transient failures—timeouts, connection resets, 503 responses—indicate temporary conditions that may resolve on subsequent attempts. Permanent failures—400 bad requests, 404 not found, 401 unauthorized—won’t resolve by retrying and should propagate immediately to the caller.

The failure classifier makes this distinction explicit, enabling both retry policies and circuit breakers to react appropriately. Retrying a malformed request wastes resources and delays the inevitable error response. Conversely, giving up immediately on a momentary network hiccup sacrifices reliability unnecessarily.

public class FailureClassifier {

public boolean isTransient(Exception e) { if (e instanceof SocketTimeoutException) return true; if (e instanceof ConnectException) return true; if (e instanceof HttpResponseException hre) { int status = hre.getStatusCode(); return status == 503 || status == 502 || status == 504 || status == 429; } return false; }

public boolean shouldCircuitBreak(Exception e) { if (e instanceof SocketTimeoutException) return true; if (e instanceof HttpResponseException hre) { return hre.getStatusCode() >= 500; } return false; }}💡 Pro Tip: Track circuit breaker state transitions as metrics. Frequent open/close cycling indicates a service hovering at its capacity limit—a signal to investigate scaling or timeout configuration.

Configuring Thresholds for Production

Threshold values depend on your traffic patterns and SLAs. Aggressive thresholds open circuits quickly but risk false positives during brief hiccups. Conservative thresholds allow more failures through but better tolerate normal variance. A reasonable starting point:

- Failure threshold: 5 failures within a sliding window

- Open duration: 30 seconds for fast-recovering services, 60+ seconds for databases

- Half-open attempts: 3 successful requests before fully closing

- Max retries: 3 attempts with 100ms base delay, capped at 2 seconds

These values prevent cascading failures while allowing quick recovery when downstream services stabilize. Monitor your actual failure rates and adjust based on observed behavior rather than theoretical assumptions.

The resilience layer transforms your ambassador from a simple proxy into a protective buffer. Your application code stays clean—it makes requests and handles business logic while the ambassador absorbs the chaos of distributed systems.

With retries and circuit breaking in place, the next step is deploying this ambassador alongside your services. Kubernetes sidecars provide the ideal deployment model, ensuring every pod gets its own resilience layer without modifying container images.

Deploying Ambassadors as Kubernetes Sidecars

The ambassador pattern finds its natural home in Kubernetes through the sidecar deployment model. By running the ambassador as a container alongside your application in the same pod, you get shared networking, coordinated lifecycle management, and zero application code changes.

Pod Configuration with Ambassador Containers

A pod with an ambassador sidecar contains two containers: your application and the proxy. They share the same network namespace, meaning localhost communication happens over the loopback interface with no network overhead.

apiVersion: apps/v1kind: Deploymentmetadata: name: order-service labels: app: order-servicespec: replicas: 3 selector: matchLabels: app: order-service template: metadata: labels: app: order-service spec: containers: - name: order-service image: myregistry.io/order-service:1.4.2 ports: - containerPort: 8080 env: - name: PAYMENT_SERVICE_URL value: "http://localhost:9000/api/payments" resources: requests: memory: "256Mi" cpu: "250m" limits: memory: "512Mi" cpu: "500m" livenessProbe: httpGet: path: /health port: 8080 initialDelaySeconds: 30 periodSeconds: 10

- name: ambassador image: myregistry.io/ambassador-proxy:2.1.0 ports: - containerPort: 9000 env: - name: UPSTREAM_URL value: "http://payment-service.payments.svc.cluster.local:8080" - name: RETRY_ATTEMPTS value: "3" - name: CIRCUIT_BREAKER_THRESHOLD value: "5" resources: requests: memory: "64Mi" cpu: "50m" limits: memory: "128Mi" cpu: "100m" livenessProbe: httpGet: path: /ambassador/health port: 9000 initialDelaySeconds: 5 periodSeconds: 10 readinessProbe: httpGet: path: /ambassador/ready port: 9000 initialDelaySeconds: 5 periodSeconds: 5The application container sends requests to localhost:9000, completely unaware that it’s talking to a proxy. The ambassador forwards these requests to the actual payment service running elsewhere in the cluster.

Resource Allocation Strategy

Ambassador containers should be lightweight. Allocate resources based on expected throughput, but start conservative. A proxy handling request forwarding and circuit breaking logic needs far less memory than your application.

The configuration above allocates the ambassador roughly 25% of the application’s resources. Monitor actual usage after deployment and adjust accordingly—over-provisioning sidecars across hundreds of pods adds up quickly.

💡 Pro Tip: Set resource requests equal to limits for the ambassador container. This guarantees a QoS class of “Guaranteed” for the pod, preventing the ambassador from being evicted during node pressure scenarios.

Health Checks and Failure Isolation

Both containers need independent health checks. The ambassador’s liveness probe ensures Kubernetes restarts it if the proxy crashes. The readiness probe prevents traffic from reaching the pod until the ambassador establishes its connection pools and loads circuit breaker state.

When the ambassador fails, the entire pod restarts. This is intentional—an application without its proxy is effectively disconnected from its dependencies. Kubernetes handles this gracefully by shifting traffic to healthy replicas.

Scaling Considerations

Each pod replica gets its own ambassador instance. This provides complete isolation—a circuit breaker tripping in one replica doesn’t affect others. However, this also means circuit breaker state isn’t shared across the deployment.

For most services, per-pod circuit breakers work well. If a downstream service is truly failing, all ambassadors will independently trip their breakers. For scenarios requiring coordinated state, you’ll need external storage like Redis to share circuit breaker status.

apiVersion: v1kind: ConfigMapmetadata: name: ambassador-configdata: config.yaml: | upstreams: - name: payment-service url: http://payment-service.payments.svc.cluster.local:8080 retry: attempts: 3 backoff: exponential initialDelay: 100ms circuitBreaker: failureThreshold: 5 resetTimeout: 30s timeout: 5sMount this ConfigMap into your ambassador container for centralized configuration management across all replicas.

With your ambassador deployed and handling the complexity of service communication, you’ll want visibility into what’s actually happening. Retries, circuit breaker state changes, and latency distributions are invisible without proper instrumentation.

Observability: Making the Invisible Visible

Your ambassador proxy now handles retries, circuit breaking, and request routing—but how do you know it’s actually working? Without proper observability, your proxy becomes a black box sitting between your services, making debugging a nightmare when things go wrong. When a request fails, you need to answer fundamental questions: Did the ambassador retry? Was the circuit breaker open? How long did the upstream service take to respond?

The good news: because all traffic flows through your ambassador, you have a single point to capture metrics, traces, and logs for every request. This architectural chokepoint, which might seem like a liability, actually becomes your greatest observability asset. Let’s instrument our proxy to expose this goldmine of operational data.

Exposing Prometheus Metrics

First, add a metrics endpoint that Prometheus can scrape. We’ll track the essential signals: request counts, latencies, and circuit breaker states. These metrics form the foundation of your monitoring dashboards and alerting rules.

public class AmbassadorMetrics { private static final MeterRegistry registry = new PrometheusMeterRegistry(PrometheusConfig.DEFAULT);

private final Counter requestsTotal; private final Counter requestsRetried; private final Timer requestLatency; private final AtomicInteger circuitBreakerState;

public AmbassadorMetrics(String targetService) { this.requestsTotal = Counter.builder("ambassador_requests_total") .tag("target", targetService) .register(registry);

this.requestsRetried = Counter.builder("ambassador_requests_retried_total") .tag("target", targetService) .register(registry);

this.requestLatency = Timer.builder("ambassador_request_duration_seconds") .tag("target", targetService) .publishPercentiles(0.5, 0.95, 0.99) .register(registry);

this.circuitBreakerState = registry.gauge( "ambassador_circuit_breaker_state", Tags.of("target", targetService), new AtomicInteger(0) // 0=closed, 1=open, 2=half-open ); }

public void recordRequest(long durationNanos, boolean wasRetried) { requestsTotal.increment(); if (wasRetried) requestsRetried.increment(); requestLatency.record(durationNanos, TimeUnit.NANOSECONDS); }

public void updateCircuitState(CircuitBreaker.State state) { circuitBreakerState.set(state.ordinal()); }}The percentile histograms deserve special attention. Publishing p50, p95, and p99 latencies lets you distinguish between “the service is slow for everyone” and “the service is slow for a small subset of requests”—a critical distinction when diagnosing performance issues.

Distributed Tracing Through the Proxy

Your ambassador must propagate trace context, or you’ll lose visibility across service boundaries. Without proper context propagation, your distributed traces will show disconnected fragments instead of the complete request journey. Inject the tracing headers before forwarding requests:

public class TracingInterceptor implements RequestInterceptor { private final Tracer tracer;

@Override public HttpRequest intercept(HttpRequest request) { Span span = tracer.buildSpan("ambassador-proxy") .withTag("target.url", request.getUri().toString()) .start();

try (Scope scope = tracer.activateSpan(span)) { tracer.inject(span.context(), Format.Builtin.HTTP_HEADERS, new HttpHeadersCarrier(request.getHeaders())); return request; } }}This ensures your traces show the complete request path: client → ambassador → target service → response. When investigating slow requests, you can immediately see whether the latency originated in the ambassador layer (perhaps due to retries) or in the downstream service itself.

Alerting on Circuit Breaker State Changes

Circuit breakers opening is a critical signal that demands immediate visibility. Unlike gradual performance degradation, a circuit breaker transition represents a discrete event that often indicates a significant system state change. Push state changes to your alerting system immediately:

circuitBreaker.getEventPublisher() .onStateTransition(event -> { log.warn("Circuit breaker state change: {} -> {} for service {}", event.getStateTransition().getFromState(), event.getStateTransition().getToState(), targetServiceName);

metrics.updateCircuitState(event.getStateTransition().getToState());

if (event.getStateTransition().getToState() == State.OPEN) { alertManager.fire(Alert.builder() .name("ambassador_circuit_open") .severity(Severity.WARNING) .label("service", targetServiceName) .annotation("summary", "Circuit breaker opened for " + targetServiceName) .build()); } });💡 Pro Tip: Alert on circuit breakers staying open for more than 5 minutes, not on every state change. Transient failures that self-heal don’t need human attention. Configure your alerting rules to fire only when the circuit remains open across multiple scrape intervals.

Structured Logging for Request Flows

When debugging production issues, structured logs let you reconstruct the full request lifecycle. Unlike free-form log messages, structured logs enable powerful queries across your log aggregation system. You can filter by trace ID, target service, status code, or any combination of fields.

public void logRequest(ProxiedRequest request, ProxiedResponse response) { log.info("ambassador_request", kv("trace_id", request.getTraceId()), kv("method", request.getMethod()), kv("target", request.getTargetUri()), kv("status", response.getStatusCode()), kv("duration_ms", response.getDurationMs()), kv("retry_count", request.getRetryCount()), kv("circuit_state", circuitBreaker.getState().name()) );}The retry_count field proves particularly valuable during incident investigations. When you see requests with high retry counts clustered around a specific time window, you’ve identified exactly when upstream instability began—often before your alerting systems detected the problem.

With these four pillars in place—metrics, traces, events, and logs—your ambassador transforms from an opaque middleware layer into a window showing exactly how your services communicate. You can now answer questions like “why did this request take 3 seconds?” or “when did we start seeing failures to the payment service?” without guessing. The correlation between these signals proves especially powerful: a trace ID in your logs links directly to the distributed trace, which in turn shows the metric values captured at that moment.

Of course, all this instrumentation adds complexity. In the next section, we’ll examine scenarios where the ambassador pattern creates more problems than it solves.

When Not to Use the Ambassador Pattern

The ambassador pattern solves real problems, but it’s not free. Before adding another moving part to your architecture, understand where the costs outweigh the benefits.

The Latency Tax

Every ambassador sits in the request path. Even the most optimized proxy adds 1-5ms of latency per hop. For high-frequency trading systems, real-time gaming backends, or any service where P99 latency is contractually bound, this overhead matters. If your service makes 10 downstream calls per request, you’ve potentially added 50ms to your response time—latency that compounds as call chains deepen.

Measure before you commit. Profile your existing latency budget and determine whether the operational benefits justify the performance cost.

Simple Systems Don’t Need Complex Solutions

A monolith talking to a single database doesn’t need an ambassador. Neither does a small microservices deployment with predictable traffic patterns and services that already handle their own retries gracefully.

The ambassador pattern shines when you have:

- Multiple services with inconsistent resilience implementations

- Legacy applications you can’t modify

- Polyglot environments where implementing the same retry logic in five languages creates maintenance nightmares

If you’re running three services written by the same team in the same language, embedding resilience libraries directly into your applications is simpler, faster, and easier to debug.

Service Meshes and API Gateways Already Exist

Istio, Linkerd, and Envoy provide ambassador-like functionality out of the box. They handle retries, circuit breaking, mTLS, and observability without custom code. API gateways like Kong or AWS API Gateway offer similar capabilities at the edge.

Before building custom ambassadors, ask: does your organization already run a service mesh? If yes, leverage it. The ambassador pattern emerged before service meshes became production-ready. Today, rolling your own proxy often means reinventing wheels that Envoy perfected years ago.

The Maintenance Burden

Custom ambassadors require ongoing investment. Security patches, performance tuning, feature additions, and the institutional knowledge to operate them—all land on your team. Off-the-shelf solutions distribute that burden across thousands of organizations.

💡 Pro Tip: If you need capabilities beyond what service meshes provide, consider extending Envoy with custom filters rather than building from scratch. You get the battle-tested foundation with the flexibility to add domain-specific logic.

The ambassador pattern remains valuable for specific use cases: air-gapped environments, extreme customization requirements, or organizations with the engineering bandwidth to maintain infrastructure components. For everyone else, evaluate managed alternatives first.

Understanding these tradeoffs positions you to choose the right tool—whether that’s a custom ambassador, a service mesh, or resilience libraries embedded directly in your services.

Key Takeaways

- Start with a minimal ambassador that handles only timeouts and logging, then add resilience features as you encounter specific failure modes in production

- Deploy ambassadors as sidecar containers in Kubernetes to get process isolation and independent scaling without network hops between pods

- Instrument your ambassador with metrics for request latency, error rates, and circuit breaker state so you can detect problems before your users do

- Evaluate service mesh solutions like Istio or Linkerd before building custom ambassadors—they provide ambassador functionality with less maintenance overhead